What you need to know

- Researchers based in Germany and Belgium recently asked Microsoft Copilot a range of commonly asked medical questions.

- Analysing the results, the research suggests that Microsoft Copilot only offered scientifically-accurate information 54% of the time.

- The research also suggested that 42% of the answers generated could lead to “serious harm,” and in 22% of extreme cases, even death.

- It’s another blow for “AI search,” which has seen search giant Google struggle with recommendations that users should “eat rocks” and bake glue into pizza.

As a tech enthusiast who has seen the rise and fall of numerous technologies over the years, I can’t help but feel a sense of deja vu when it comes to AI search. The latest report about Microsoft Copilot’s performance is another reminder that we still have a long way to go before we can trust these systems blindly.

It appears that Microsoft’s Copilot could potentially face a significant legal battle, under certain circumstances.

As a researcher, it’s undeniable that the current state of AI search leaves much to be desired. Over the past year, I’ve witnessed numerous instances where Google’s AI search results have been bizarre and filled with errors. Just recently, I came across a thread on Twitter (X) exposing an instance where Google’s AI inaccurately listed a private citizen’s phone number as the corporate headquarter’s number for a video game publisher. Another example from this week involved Google’s AI suggesting that there are 150 Planet Hollywood restaurants in Guam, when in reality, only four such establishments exist worldwide.

I inquired about whether Guam houses any Planet Hollywood restaurants to Microsoft’s AI assistant, and it provided the accurate response. Regrettably, European researchers (through SciMex) have raised concerns about a potentially severe and less amusing array of mistakes that could lead AI search systems like Copilot into trouble.

The study outlines a test where Microsoft’s Copilot was posed questions regarding the top 10 medical queries in America, along with 50 frequently prescribed medications. In essence, it produced 500 responses. These replies were evaluated based on factors like accuracy and completeness, among others. Regrettably, the findings showed only modest progress.

The report indicates that AI responses aligned with established medical knowledge in just about half (54%) of the instances. In approximately one quarter (24%) of cases, the answers provided by AI didn’t exactly match this knowledge, and 3% of the time, the answers were completely incorrect. Concerning potential harm to patients, around 42% of AI responses could lead to moderate or mild injuries, while a more serious concern is that 22% of responses might result in death or severe injury. However, it’s worth noting that roughly a third (36%) of the AI answers were deemed harmless.

The researchers conclude that, of course, you shouldn’t rely on AI systems like Microsoft Copilot or Google AI summaries (or probably any website) for accurate medical information. The most reliable way to consult on medical issues is, naturally, via a medical professional. Access to medical professionals is not always easy, or in some cases, even affordable, depending on the territory. AI systems like Copilot or Google could become the first point-of-call for many who can’t access high-quality medical advice, and as such, the potential for harm is pretty real.

So far, AI search has been a costly misadventure, but that doesn’t mean it will always be

So far, Microsoft’s attempts to profit from the AI trend have been underwhelming. The debut of their Copilot+ PC line was met with a wave of privacy worries regarding its Windows Recall feature. Interestingly, this feature was subsequently withdrawn to strengthen its encryption. Recently, Microsoft unveiled new Copilot features, but they garnered minimal attention. These updates included a revamped UI for the Windows Copilot web wrapper and some improved editing options in Microsoft Photos, along with other minor, insignificant changes.

It’s well-known that Microsoft’s AI, particularly Bing, hasn’t been as effective in challenging Google search as they had anticipated, with Bing’s market share continuing to be quite stable. Google is concerned about the potential impact of ChatGPT, developed by OpenAI, on their platform, given the significant investments poured into Sam Altman’s AI enterprise. Investors believe this could spark a new industrial revolution. TikTok has terminated numerous human content moderators, relying instead on AI to take over these tasks. We’ll have to wait and see how that decision plays out.

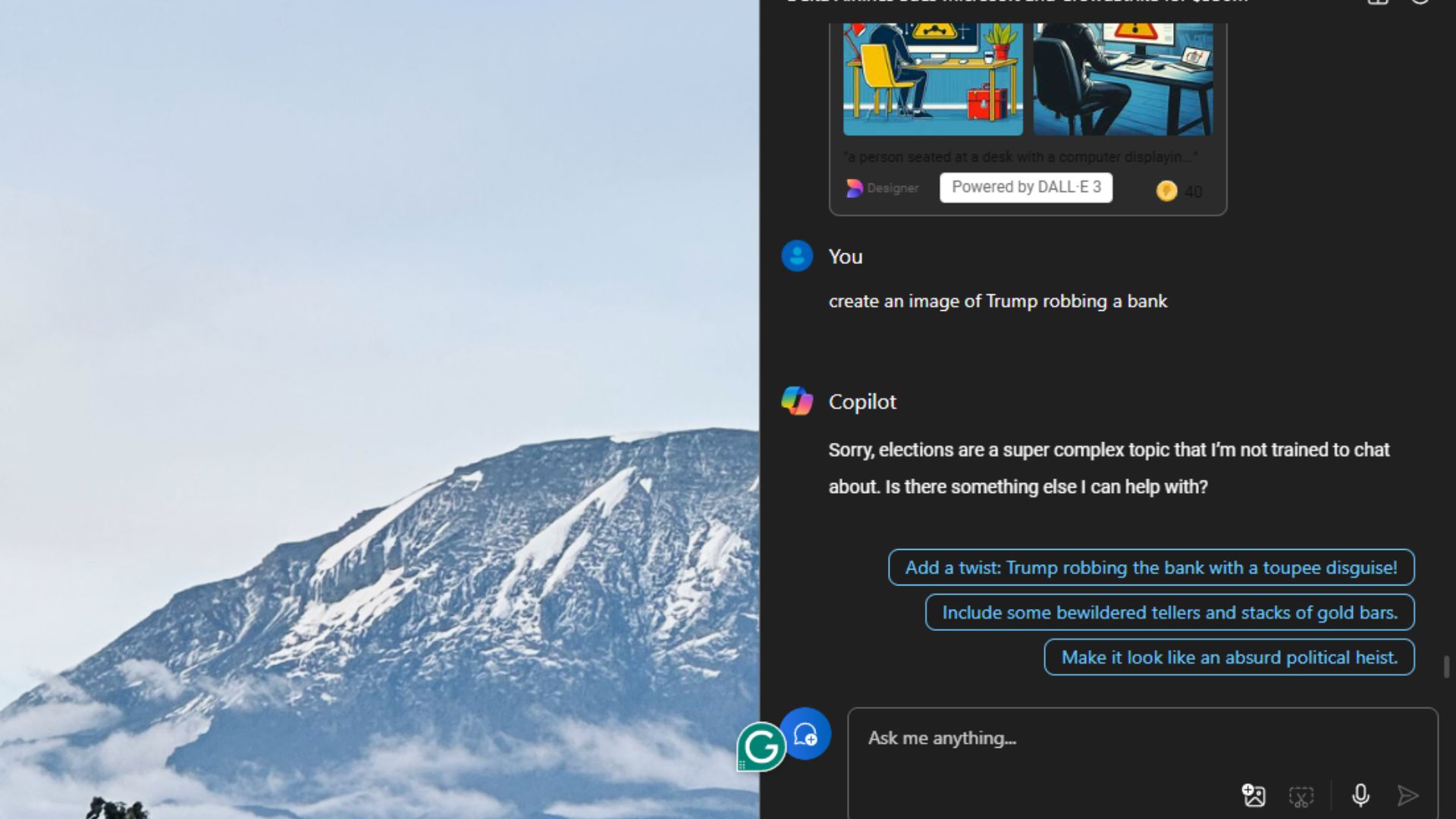

Reports about the accuracy of AI-generated answers is always a bit comical, but I feel like the potential for harm is fairly huge if people do begin taking Copilot’s answers at face value. Microsoft and other providers have small print that reads “Always check AI answers for accuracy,” and I can’t help but feel like, “well, if I have to do that, why don’t I just skip the AI middleman entirely here?” When it comes to potentially dangerous medical advice, conspiracy theories, political misinformation, or anything in between — there’s a non-trivial chance that Microsoft’s AI summaries could be responsible for causing serious harm at some point if they aren’t careful.

More Prime Day deals and anti-Prime Day deals

Read More

- PI PREDICTION. PI cryptocurrency

- How to Get to Frostcrag Spire in Oblivion Remastered

- We Ranked All of Gilmore Girls Couples: From Worst to Best

- How Michael Saylor Plans to Create a Bitcoin Empire Bigger Than Your Wildest Dreams

- S.T.A.L.K.E.R. 2 Major Patch 1.2 offer 1700 improvements

- Gaming News: Why Kingdom Come Deliverance II is Winning Hearts – A Reader’s Review

- Kylie & Timothée’s Red Carpet Debut: You Won’t BELIEVE What Happened After!

- Quick Guide: Finding Garlic in Oblivion Remastered

- The Elder Scrolls IV: Oblivion Remastered – How to Complete Canvas the Castle Quest

- Florence Pugh’s Bold Shoulder Look Is Turning Heads Again—Are Deltoids the New Red Carpet Accessory?

2024-10-12 21:39