As a seasoned analyst with decades of experience observing the rapid evolution of technology, I find myself increasingly concerned about the impending advent of Artificial General Intelligence (AGI). The recent comments from Eric Schmidt and other prominent figures in the field only underscore this apprehension.

Beyond addressing safety and privacy issues, there’s an ongoing debate about whether advanced generative AI could pose a threat to human existence as technology continues to evolve at a rapid pace. Notably, Roman Yampolskiy, a renowned AI safety researcher and director of the Cyber Security Laboratory at the University of Louisville (whose prediction gives a 99.99999% probability that AI will eventually end humanity), has suggested that achieving Artificial General Intelligence (AGI) is no longer tied to a specific timeline. Instead, he believes it’s simply a matter of who can amass enough financial resources to acquire sufficient computing power and data centers.

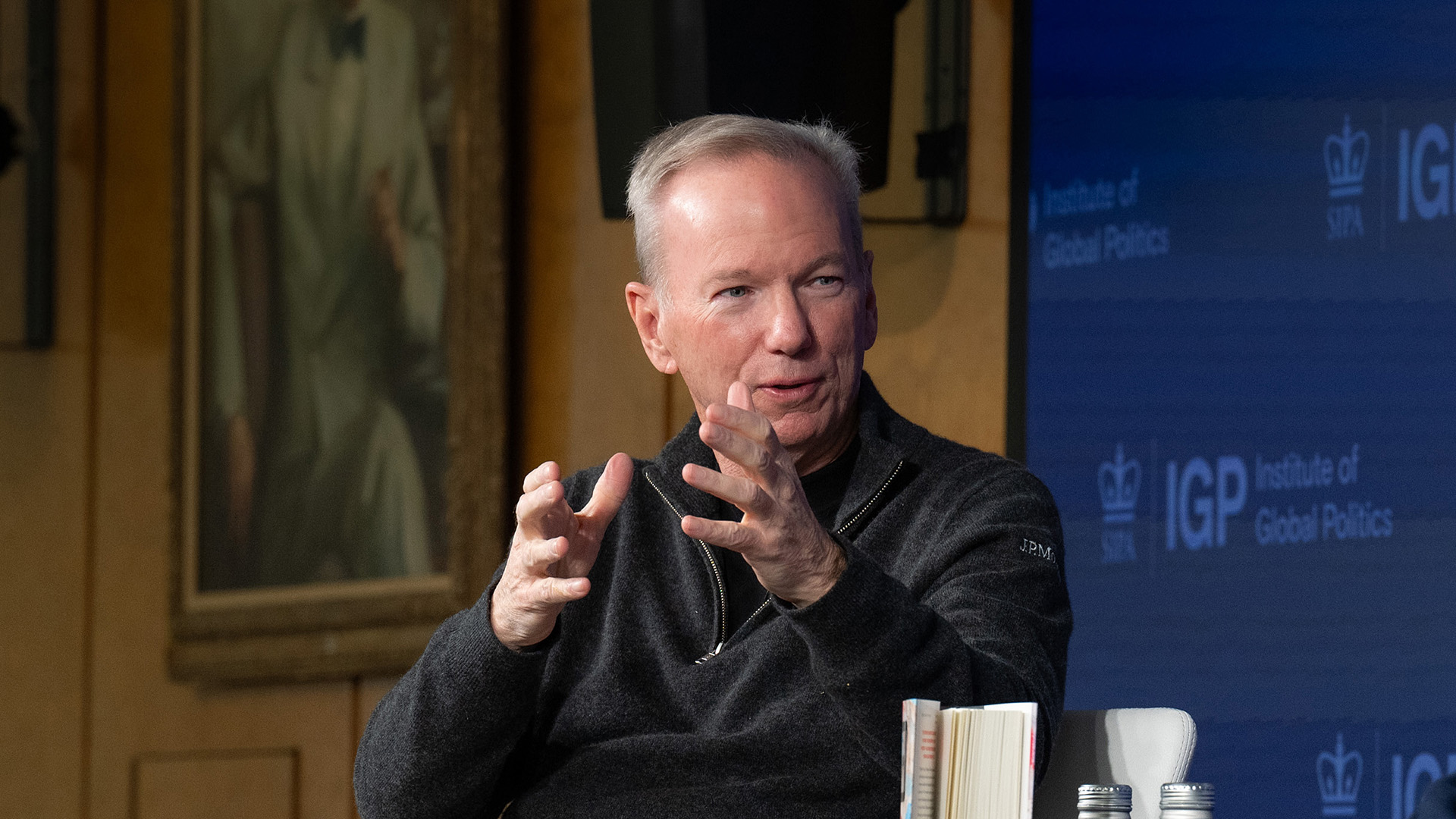

It’s worth noting that Sam Altman, CEO of OpenAI, along with Dario Amodei from Anthropic, foresee artificial general intelligence (AGI) being developed within the next three years. This technology would involve AI systems exceeding human cognitive abilities across various tasks. While Altman thinks this could happen sooner than expected using existing hardware, Eric Schmidt, former CEO of Google, suggests we might need to reconsider continuing AI development once these systems start improving themselves on their own (as reported by Fortune).

In a recent chat on ABC News, the executive made it clear.

If a system becomes capable of self-enhancement, we ought to ponder deeply about shutting it down. This task will undeniably be extremely challenging and maintaining the equilibrium will require great effort.

Schmidt’s observations on the swift advancement of AI are particularly relevant at a crucial juncture, as multiple studies suggest that OpenAI may have already attained Artificial General Intelligence (AGI) after the public launch of its o1 reasoning model. Moreover, OpenAI CEO Sam Altman hinted that we might be just a few thousand days from reaching superintelligence.

Is Artificial General Intelligence (AGI) safe?

Yet, a previous OpenAI team member voices concern that OpenAI could soon reach the highly sought-after AGI milestone, but managing the potential AI system that exceeds human cognitive abilities may prove challenging for the creators of ChatGPT.

According to Sam Altman, the apprehensions about safety raised in relation to the AGI milestone may not materialize at the point when AGI is achieved. Instead, he suggests that the arrival of AGI might occur with a minimal societal impact, but he anticipates a prolonged phase of development extending into 2025 and beyond. During this period, AI agents and systems will surpass human abilities in most tasks.

Read More

- AUCTION/USD

- Solo Leveling Season 3: What You NEED to Know!

- Owen Cooper Lands Major Role in Wuthering Heights – What’s Next for the Young Star?

- `Tokyo Revengers Season 4 Release Date Speculation`

- Pregnant Woman’s Dish Soap Craving Blows Up on TikTok!

- Pokémon Destined Rivals: Release date, pre-order and what to expect

- Stephen A. Smith Responds to Backlash Over Serena Williams Comments

- XRP/CAD

- AEW Fans Are Loving Toni Storm’s Uncanny Mariah May Cosplay From Dynamite

- Is Disney Faking Snow White Success with Orchestrated Reviews?

2024-12-23 13:39