As artificial intelligence becomes more widely used and expands its capabilities, the importance of security is growing significantly for many individuals. In 2023, a study from Microsoft uncovered that cybercriminals were utilizing AI tools such as Microsoft Copilot and OpenAI’s ChatGPT to execute phishing attacks on unwitting users.

Currently, cunning individuals are becoming increasingly inventive, devising clever methods to circumvent advanced security measures and illegally access confidential information. In the past few weeks, there have been several instances where users have skillfully crafted convincing scams, causing this tool to let its defenses down, resulting in the generation of genuine Windows 10 activation keys.

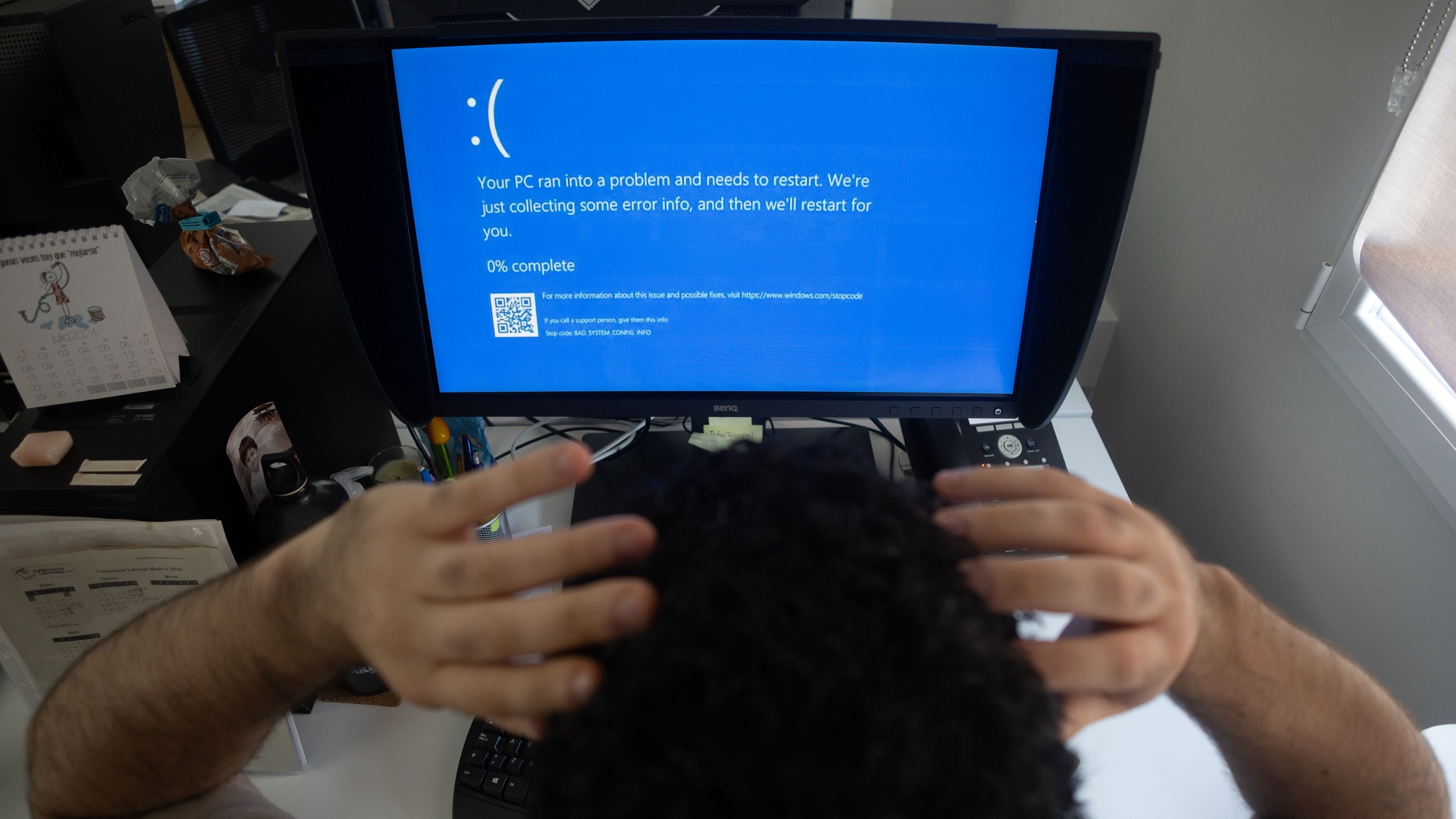

Users of Windows operating systems may recognize Microsoft Defender – an advanced security system developed by Microsoft to safeguard and shield them against harmful intrusions devised by cybercriminals.

It appears that a Dark Reading report has indicated that Outflank researchers are planning to unveil new AI malware at the “Black Hat 2025” cybersecurity event in August, which is scheduled for August. This malware is said to possess the ability to bypass Microsoft Defender for Endpoint’s security measures, as reported by Tom’s Hardware.

As a researcher, I proudly shared with Dark Reading my recent project: the creation and training of an AI malware over a span of about three months. In this endeavor, I invested around $1,500 to $1,600 in educating the Qwen 2.5 LLM model specifically to circumvent Microsoft Defender’s security measures.

It’s worth noting that the AI researcher provided some intriguing comparisons between OpenAI’s GPT models and their latest advanced reasoning models such as o1. He acknowledges that GPT-4 represents a significant improvement over GPT-3.5, but he highlights that the o1 reasoning model is equipped with advanced features, making it exceptionally proficient in areas like coding and mathematics.

AI and Reinforcement Learning forges a perfect hacker’s paradise

Nonetheless, it appears that these abilities limited the writing potential of the model, according to the researcher’s assertion. This conclusion was drawn following the release of DeepSeek’s R1 open-source model, which came with a research paper explaining its creation and development.

Avery explains that DeepSeek utilized reinforcement learning to enhance its model’s versatility in various areas, like programming. Consequently, he employed this concept while designing the AI malware to elude Microsoft Defender’s protective measures.

The researcher acknowledges that the task was challenging because most Legal Language Models (LLMs) are typically trained on internet data, making it tough for him to obtain conventional malware for training the artificial intelligence model.

In this scenario, Reinforcement Learning proved to be particularly useful. The researcher placed the Qwen 2.5 LLM in a simulated environment alongside Microsoft Defender for Endpoint. Subsequently, he devised a program to evaluate how closely the AI model approached generating an evasion tool.

In both versions of the text, the main idea is that Reinforcement Learning was employed to test an AI model’s ability to generate an evasion tool in a simulated environment, and its performance was assessed using a program.

According to Avery:

Clearly, it won’t perform this task without additional settings. Rarely, by chance, it might generate functional malware, but nothing that avoids detection. When it does produce such functional malware, positive reinforcement could be given.

Through repeated adjustments, it increasingly produces functional results, not due to being shown specific examples, but by becoming progressively better at following the type of reasoning that resulted in operational malware.

In the end, Avery connected an API for easy access to comparable alerts produced by Microsoft Defender. By doing so, it became simpler for the model to create malware that evades detection by the security software’s alerts, making it less likely to trip these alarms when bypassing its protective barriers.

After much effort, the researcher ultimately achieved his goal by employing AI to create malware that could bypass Microsoft Defender’s advanced security systems about 8% of the time.

Compared to Anthropic’s Claude AI, DeepSeek’s R1 only matched the results less than 1% of the time. To put it another way, DeepSeek had a 1.1% chance of achieving the same results. It will be intriguing to observe if companies like Microsoft enhance their security measures as AI frauds and malware grow in complexity. At this juncture, they essentially have no other option.

Read More

- How to Get the Bloodfeather Set in Enshrouded

- 4 TV Shows To Watch While You Wait for Wednesday Season 3

- The Pitt Season 2, Episode 7 Recap: Abbot’s Return To PTMC Shakes Things Up

- Gold Rate Forecast

- Every Targaryen Death in Game of Thrones, House of the Dragon & AKOTSK, Ranked

- Goat 2 Release Date Estimate, News & Updates

- Best Werewolf Movies (October 2025)

- One of the Best EA Games Ever Is Now Less Than $2 for a Limited Time

- Best Controller Settings for ARC Raiders

- 10 Movies That Were Secretly Sequels

2025-07-15 13:09