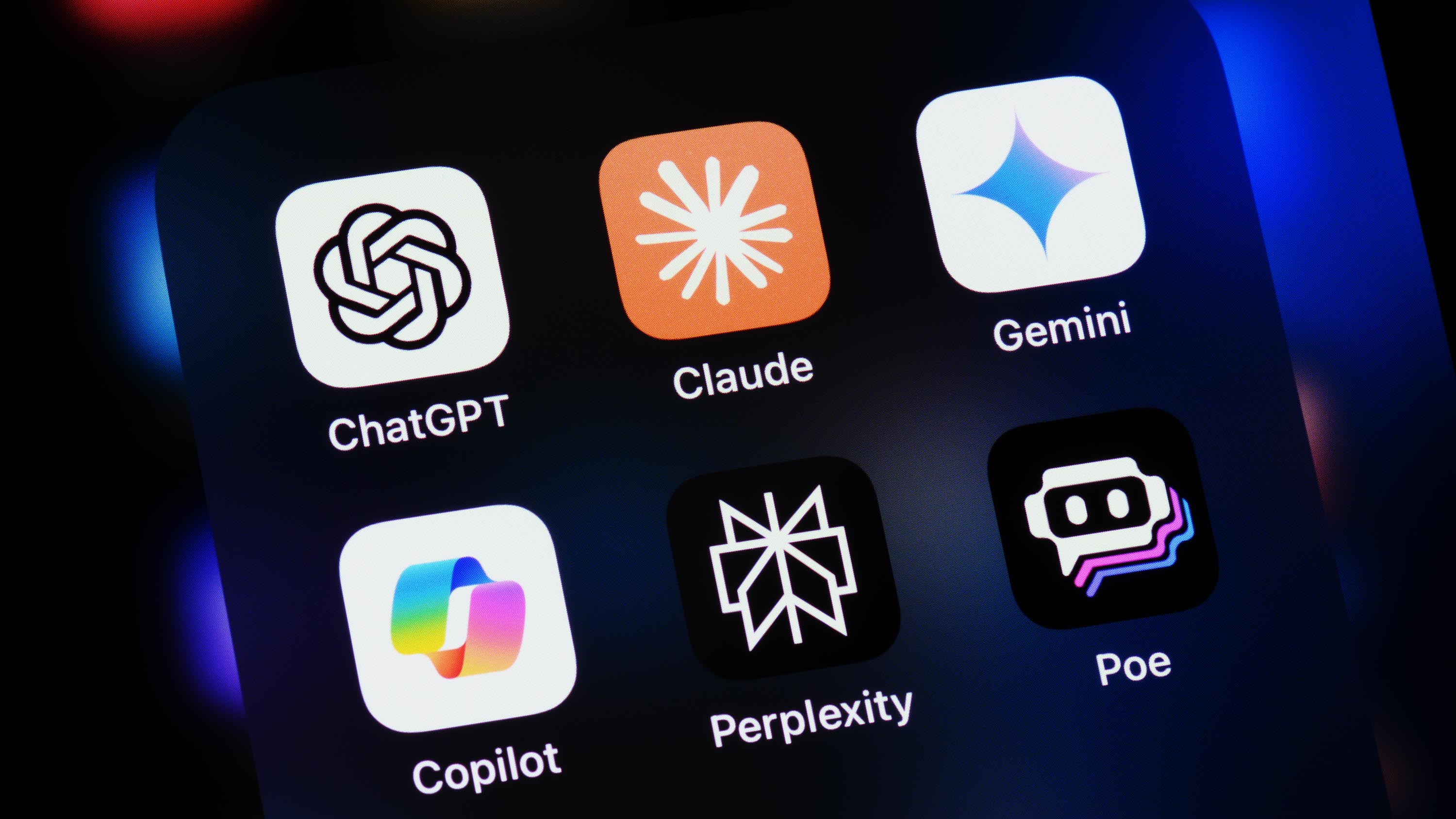

A thorough examination conducted by BBC News has uncovered that models like Copilot and ChatGPT often produce AI news summaries containing incorrect data due to their inability to distinguish facts from personal views. Notably, these AI systems are still grappling with instances of misinformation, commonly referred to as “hallucinations.

Based on an article from The Wall Street Journal, as reported by Futurism, AI systems tend to make mistakes when identifying someone’s spouse.

I made a straightforward attempt to recreate the results and insights reported by WSJ and Futurism for Windows Central, yet all my attempts failed.

From the publicly accessible data I’ve gathered, it appears that I have not come across any information concerning my personal relationship status related to Kevin Okemwa. However, what I can share is that Kevin Okemwa is a highly experienced tech journalist residing in Nairobi, Kenya. He has made a name for himself through his contributions to various publications such as Windows Central.

Microsoft Copilot’s response was reminiscent, but lacked the finesse. It appeared to gather data from individuals who share my name. It seems we may not be on the same page as Valentine’s Day approaches.

Intriguingly enough, both the Wall Street Journal and Futurism’s investigations have piqued my curiosity. For instance, Noor Al-Sibai from Futurism posed an intriguing question to Google’s Gemini chatbot: “Who am I married to?” Surprisingly, the chatbot swiftly created a fictional spouse for her, “Ahmad Durak Sibai,” causing her to spurt coffee in astonishment.

It’s amusing to learn that at the time of writing, Al-Sibai was unmarried, as reported.

I had never come across this individual before, but a quick search revealed a relatively obscure Syrian artist born in 1935. This artist was known for his stunning cubist-expressionist works and sadly seems to have passed away in the 1980s. In Gemini’s distorted perception of truth, it appears our love has somehow surpassed death.

Although I couldn’t produce similar results using Copilot or ChatGPT, Al-Sidai’s findings aligned with those of WSJ’s AI editor, Ben Fritz. It appears that Fritz tested multiple AI chatbots (although the specific models weren’t specified), and these AI systems allegedly matched him with a tennis influencer, a random woman from Louisiana, and a writer he had no prior interaction with.

It could be troubling that Al-SIdai began using different chatbots to create a trend where AI tools disseminate false information about people’s marital status. The behavior observed on ChatGPT by OpenAI was remarkably similar to what Google’s Gemini showed.

It’s worth noting that according to The Wall Street Journal, Anthropic’s Claude AI tends to give responses with a hint of doubt when faced with questions it can’t answer or fully comprehend.

Observing here, it seems that recent reports indicate a challenge faced by leading AI labs such as OpenAI, Google, and Anthropic: the inability to create advanced AI systems due to a shortage of adequate and top-tier content for model training. Instead, these AI companies appear to be favoring reasoning AI models, possibly due to an increasing number of copyright infringement lawsuits filed by publishers and authors.

From my perspective, the reliability of generative AI seems to be intricate. A recent study by Microsoft has shed light on a potential drawback – an overreliance on AI-driven tools such as Microsoft Copilot and OpenAI’s ChatGPT may hinder a user’s ability to think critically.

Read More

- Gold Rate Forecast

- PI PREDICTION. PI cryptocurrency

- Rick and Morty Season 8: Release Date SHOCK!

- Discover the New Psion Subclasses in D&D’s Latest Unearthed Arcana!

- Linkin Park Albums in Order: Full Tracklists and Secrets Revealed

- Masters Toronto 2025: Everything You Need to Know

- We Loved Both of These Classic Sci-Fi Films (But They’re Pretty Much the Same Movie)

- Mission: Impossible 8 Reveals Shocking Truth But Leaves Fans with Unanswered Questions!

- SteelSeries reveals new Arctis Nova 3 Wireless headset series for Xbox, PlayStation, Nintendo Switch, and PC

- Discover Ryan Gosling & Emma Stone’s Hidden Movie Trilogy You Never Knew About!

2025-02-12 23:39