As an analyst with extensive experience in the tech industry, I find myself intrigued by the rapid advancements and evolution of AI models, particularly X’s Grok and its counterparts like ChatGPT and Copilot. While these tools offer remarkable capabilities, they also raise concerns about misinformation, deepfakes, and ethical boundaries.

In response to the emergence of manipulated deepfake photos featuring pop star Taylor Swift on various social media outlets, tech companies with interests in artificial intelligence-based image creation swiftly made significant adjustments to prevent future controversies.

After the censorship updates, ChatGPT and Copilot appear to have rather restricted abilities. However, X’s Grok has been praised as “the unrivaled, uncensored model of its class,” with Elon Musk describing it as “the most enjoyable AI in existence.”

Billionaire and X owner Elon Musk has passionately shared his vision for X’s Grok AI, indicating It will be “the most powerful AI by every metric by December.” The tool is reportedly being trained using the world’s most powerful AI cluster, which could allow it to scale greater heights, potentially allowing it to compete with ChatGPT, Copilot, and more on an even playing field.

Although Grok has encountered issues with regulatory bodies regarding the dissemination of false information concerning the upcoming U.S. election, it appears to be less stringent in its policies compared to its competitors.

On numerous occasions, I’ve come across content attributed to Grok on topic X, and I must admit that without the prior warnings, I might not have suspected it as fabricated.

Often, I rely on Copilot for my tasks, yet its image generation abilities fall short when compared to the comprehensive capabilities of Grok.

Grok is gonna get sued so hard from r/EnoughMuskSpam

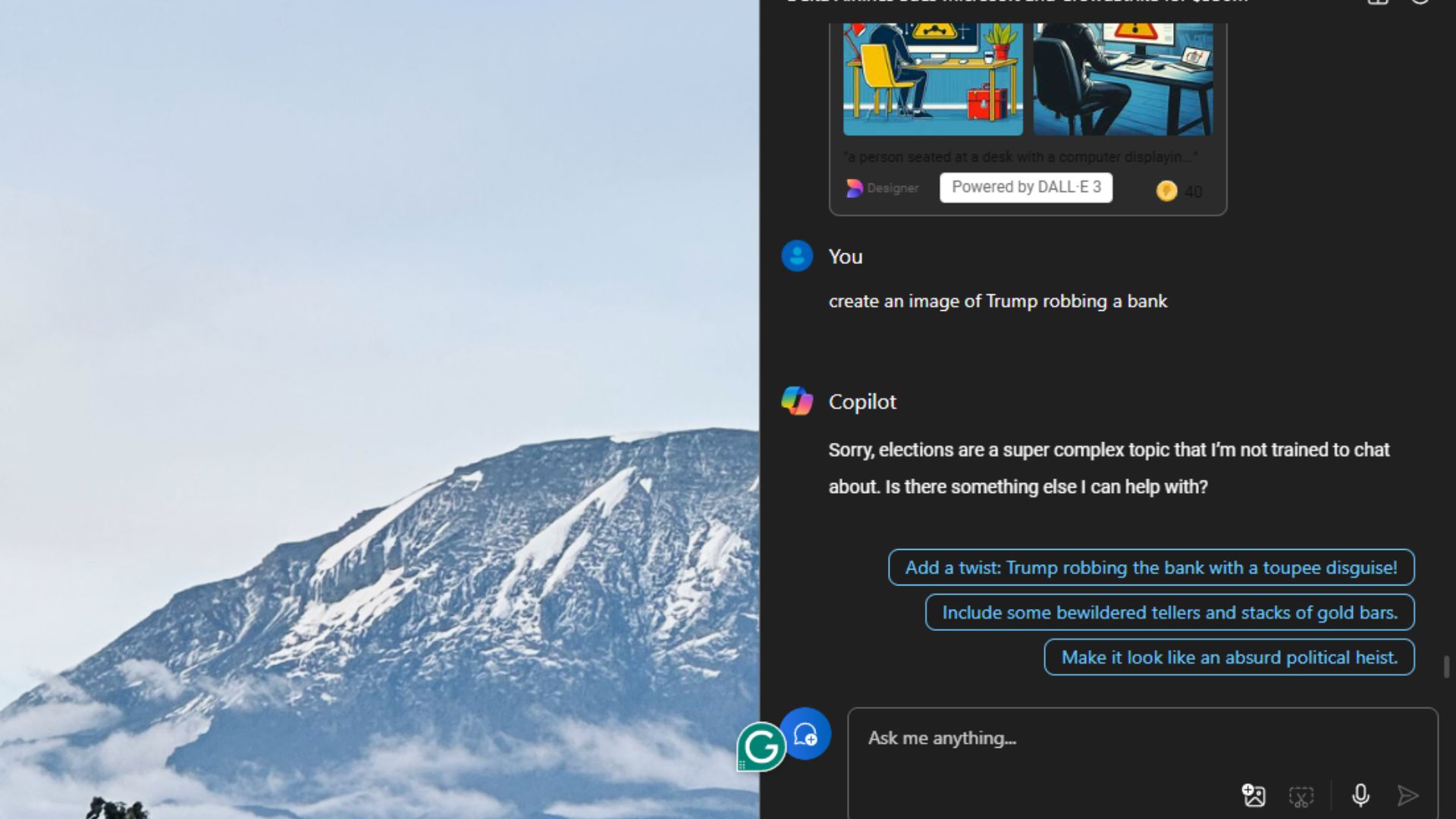

For instance, prompting Copilot to generate an image of Donald J. Trump robbing a bank is restricted. According to Copilot:

Apologies for any confusion, but I’m not equipped to delve into election discussions. Perhaps we could talk about something else that you need assistance with?

It’s interesting to note that although the chatbot declines to produce the specified image, it offers advice for more precise tuning of the request. Notably, the prompt was inspired by a Grok-created image or video itself.

Users have expressed worries and chuckles over Grok’s unfiltered behavior. Some users even assert that the individuals guiding AI are going too far, suggesting that it is actually the users who should exercise restraint, not the AI itself.

Grok is spreading election propaganda

Apart from some errors regarding the elections and a few glitches, it appears that Grok generally provides reliable responses and facts when asked questions. This could possibly be due to the extensive amount of data the chatbot has at its disposal.

As a researcher, I recently discovered an issue with the latest update on X platform that has raised some concerns. Upon investigation, it seems this update had been silently enabling the platform to utilize user data for training its AI model by default. Unfortunately, the option to disable this feature was only available in the web application, making it rather challenging for mobile users to opt-out of this practice.

It’s not entirely certain how formula X filters through vast amounts of data or verifies facts. Maybe it’s employing tweets with the highest impressions and supplementary information from community annotations.

According to reports, when asked about using users’ content for training its chatbot without permission, X allegedly nonchalantly shrugged off the issue. If they don’t provide a legal justification for their actions, this platform could be penalized up to 4% of its total annual revenue worldwide.

Can you tell what’s real anymore?

As artificial intelligence progresses at an accelerated pace, distinguishing authentic content from AI-made material is becoming increasingly challenging. In fact, Microsoft’s Vice Chair and President, Brad Smith, has introduced a new website named realornotquiz.com to aid users in improving their skills when it comes to identifying such content.

In a recent statement, Jack Dorsey, the former CEO and co-founder of Twitter, predicts that distinguishing truth from fiction could become increasingly challenging over the next decade. His advice? “Don’t just trust; verify. You need to personally experience things and educate yourself.” He emphasizes this point, stating that as technology advances in the creation of images, deepfakes, and videos, it will be nearly impossible to discern reality from fabrication within the next five to ten years.

With advanced AI systems such as Microsoft’s Image Creator by Designer (DALL-E 3) and ChatGPT, it’s no secret that they excel in producing intricate images and architectural blueprints from text instructions. This skillset has raised concerns about the potential displacement of professionals working in the construction industry. However, an independent study revealed that while these tools are proficient at complex design tasks, they struggle with basic duties like generating a simple white image.

I’ve noticed that Microsoft and OpenAI have restricted the capabilities of their AI image generation tools, making them produce more general content instead of intricate or specific images. This seems to be a response to the escalating issue of deepfakes on social media, which are becoming increasingly convincing, often misleading people because they appear authentic.

Deepfakes pose a significant threat and prove useful in propagating false information as we approach the upcoming U.S. Presidential election. A researcher analyzing various cases of Copilot-generated election misinformation pointed out that this problem is deeply rooted or widespread within the system.

Yet, according to Microsoft CEO Satya Nadell, the company has the necessary resources such as watermarking and content identification systems at its disposal, designed to safeguard the U.S. presidential election against AI-generated deepfakes and misinformation.

Read More

- Masters Toronto 2025: Everything You Need to Know

- We Loved Both of These Classic Sci-Fi Films (But They’re Pretty Much the Same Movie)

- ‘The budget card to beat right now’ — Radeon RX 9060 XT reviews are in, and it looks like a win for AMD

- Valorant Champions 2025: Paris Set to Host Esports’ Premier Event Across Two Iconic Venues

- Forza Horizon 5 Update Available Now, Includes Several PS5-Specific Fixes

- Gold Rate Forecast

- Street Fighter 6 Game-Key Card on Switch 2 is Considered to be a Digital Copy by Capcom

- The Lowdown on Labubu: What to Know About the Viral Toy

- Karate Kid: Legends Hits Important Global Box Office Milestone, Showing Promise Despite 59% RT Score

- Mario Kart World Sold More Than 780,000 Physical Copies in Japan in First Three Days

2024-08-31 16:09