What you need to know

- LinkedIn quietly shipped a new feature dubbed Data for Generative AI Improvement. This feature leverages your data in the employment-focused social network to train Microsoft and its affiliates’ AI models.

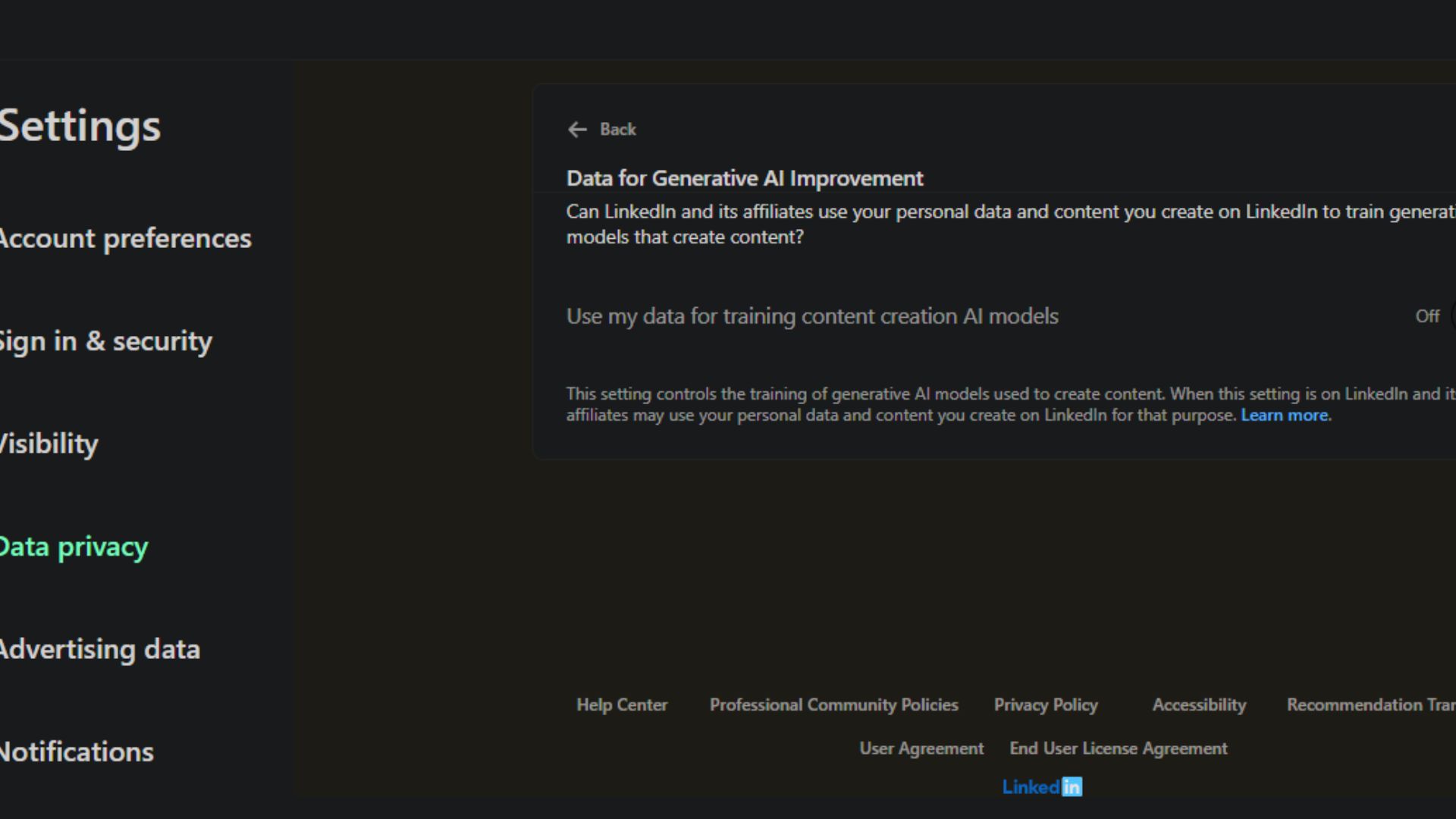

- The feature is enabled by default, but you can turn it off via the Data Privacy settings on the web and mobile.

- EU, EEA, and Switzerland users have been exempted from the controversial feature, presumably because of the EU AI Act’s stringent rules.

As a long-time user of LinkedIn and a tech enthusiast, I find myself increasingly concerned about the latest development regarding the platform’s new feature for Generative AI Improvement. It seems that without explicit consent, my data is being used to train AI models, which I find troubling given the ongoing copyright infringement lawsuits against other AI firms.

Microsoft and OpenAI have found themselves embroiled in numerous legal disputes due to allegations of copyright violations. Some writers and publishing houses have voiced concerns as AI-driven models are trained using data from the web, a practice they find unsettling.

In a response to one of the numerous copyright lawsuits against OpenAI, CEO Sam Altman stated that it would be unfeasible to create innovative tools such as ChatGPT without utilizing copyrighted materials. However, he also emphasized that existing copyright laws do not prevent the use of copyrighted content for educating AI systems.

In my role as a researcher, I find myself reflecting on recent developments in the AI industry, specifically concerning data usage. Just like Anthropic, another AI firm, Microsoft’s professional networking platform, LinkedIn, has been under scrutiny for potentially using users’ data to train their AI models. This practice, if true, raises questions about consent and compensation, much like the lawsuit filed against Anthropic by a group of authors.

LinkedIn recently introduced a new function (enhancing AI models through Data Improvement), which automatically grants AI systems the ability to utilize your data for learning purposes. Concerningly, this feature is turned on by default. This disclosure means that Microsoft and its partners can now leverage the data of LinkedIn’s enormous 134.5 million daily active users for AI training without explicit user consent.

According to a representative from LinkedIn who spoke with PCMag, when they mention “affiliates,” they are referring to any companies owned by Microsoft. It is important to note that LinkedIn is not passing on the collected data to OpenAI.

It appears that users from the EU, EEA, and Switzerland are notably absent. This could be due to the strict regulations set by the EU AI Act in the region, which forbids the development, testing, and validation of high-risk AI systems.

LinkedIn highlights its terms and conditions:

When developing our AI models on LinkedIn, we strive to limit the inclusion of personal information within the datasets we use for training. This includes employing confidentiality measures such as redaction or elimination of personal data in the training data.

It’s uncertain if the latest feature applies only to posts on LinkedIn or encompasses all aspects such as direct messages. LinkedIn clarified that when interacting with their platform, they gather and utilize data related to your platform usage, which can include personal details. This may involve AI-generated content, your posts, articles, frequency of use, preferred language, and any feedback you’ve given to their teams.

How to disable LinkedIn’s Data for Generative AI Improvement feature

As a data analyst, I would advise you to customize your LinkedIn settings to restrict the usage of your data in improving Artificial Intelligence. To do this, simply follow these steps: Navigate to the “Settings” tab, then select “Data Privacy”, and finally find the option for “Data for Generative AI Improvement”. Here, you can turn off the toggle switch to disable this feature.

Elon Musk’s AI project, Grok, received criticism in July for conducting AI model training without obtaining user consent, much like LinkedIn’s recent controversial feature. Similar to LinkedIn, Grok’s AI training function was activated by default. Moreover, the option to disable this feature was only accessible via the web platform, making it challenging for mobile users to opt-out of it.

X’s ambitious goal of turning Grok into the AI with the highest performance across all benchmarks by December this year has brought it under close examination from regulatory bodies. If X fails to find a legitimate reason for utilizing user data to train its chatbot without explicit consent, it could face fines amounting to 4% of its worldwide revenue under the General Data Protection Regulation (GDPR).

Read More

- Gold Rate Forecast

- SteelSeries reveals new Arctis Nova 3 Wireless headset series for Xbox, PlayStation, Nintendo Switch, and PC

- PI PREDICTION. PI cryptocurrency

- Eddie Murphy Reveals the Role That Defines His Hollywood Career

- Discover the New Psion Subclasses in D&D’s Latest Unearthed Arcana!

- Rick and Morty Season 8: Release Date SHOCK!

- We Loved Both of These Classic Sci-Fi Films (But They’re Pretty Much the Same Movie)

- Discover Ryan Gosling & Emma Stone’s Hidden Movie Trilogy You Never Knew About!

- Masters Toronto 2025: Everything You Need to Know

- Mission: Impossible 8 Reveals Shocking Truth But Leaves Fans with Unanswered Questions!

2024-09-19 20:39