Recently, NVIDIA has faced some criticism due to their choice of equipping the newly launched RTX 50-series graphics processing units (GPUs) with 8GB of video random access memory (VRAM).

In simpler terms, you’ll find both 8GB and 16GB versions of the RTX 5060 Ti, but only an 8GB variant for the RTX 5060 non-Ti. As for the RTX 5050, it has more pressing issues than its 8GB VRAM capacity.

At its CES 2025 keynote, NVIDIA touted lower VRAM needs for its new GPU lineup, the Blackwell series, before they were available in the market. However, some users have encountered issues running specific games at high settings with an 8GB GPU.

The potential resolution to the ongoing debate about 8GB VRAM might be imminent, assuming the preliminary tests conducted by user @opinali (as reported by Wccftech) using NVIDIA’s latest Neural Texture Compression (NTC) and Microsoft’s DirectX Raytracing 1.2 Cooperative Vector yield accurate results.

First, you need to install the preview driver (version 590.26) in order to use NVIDIA’s Neural Texture Compression with DXR1.2 Cooperative Vector. I installed it, but my screen became corrupted at first and only started working after several hard resets. Laughing emoji and thread emoji included for emphasis: 😅🧵

[Link to article about the issue with NVIDIA’s Neural Texture Compression with DXR1.2 Cooperative Vector] (https://t.co/szgX1jVtcY)

July 15, 2025

A user obtained a preliminary version (590.26) of NVIDIA’s driver software and paired it with a beta version of the RTX NTC SDK from GitHub. In order to evaluate the performance, they conducted some rendering tests to compare the newly integrated NTC and DXR’s Cooperative Vectors functionality.

These tests are early and rudimentary, but on an NVIDIA RTX 5080 GPU, they’re certainly promising.

Besides enhancing rendering performance by almost 80%, the blend of NVIDIA NTC and DXR 1.2 Cooperative Vectors significantly reduces VRAM utilization, potentially lowering it by as much as 90%. That’s correct—I’m referring to a reduction of up to 90%.

How well does it run? With v-sync turned off, using the RTX 5080 graphics card, and starting at the demo position: (Here’s a breakdown for each setting)

* Default: 2,350 frames per second (fps) / 9.20MB

* Without FP8: 2,160 fps / 9.20MB

* Without Int8: 2,350 fps / 9.20MB

* DP4A: 1,030 fps / 9.14MB

* Transcoded: 2,600 fps / 79.38MBThese statistics are from July 15, 2025.

It’s true that a GPU’s VRAM is not solely responsible for handling textures, but it’s worth noting that textures tend to consume a significant amount of its memory.

According to Opinali’s explanation, textures often consume 50%-70% of the VRAM used by games. This is significant because it affects factors like bandwidth, GPU copy costs, and cache efficiency. I suspect that NTC will also offer improvements in performance and frames per second (FPS) as a result.

In initial trials conducted by Opinali, it was observed that this method notably boosts overall rendering speed. Specifically, it increased from approximately 1,030 frames per second (FPS) with DP4A (standard) to an impressive 2,350 FPS when using the default NTC and Cooperative Vectors setting.

Without a doubt, this technology is nothing short of amazing. If these performance improvements reach the user’s side of the process, the debate about whether 8GB VRAM is sufficient might be rendered irrelevant.

What is Neural Rendering and what are Cooperative Vectors?

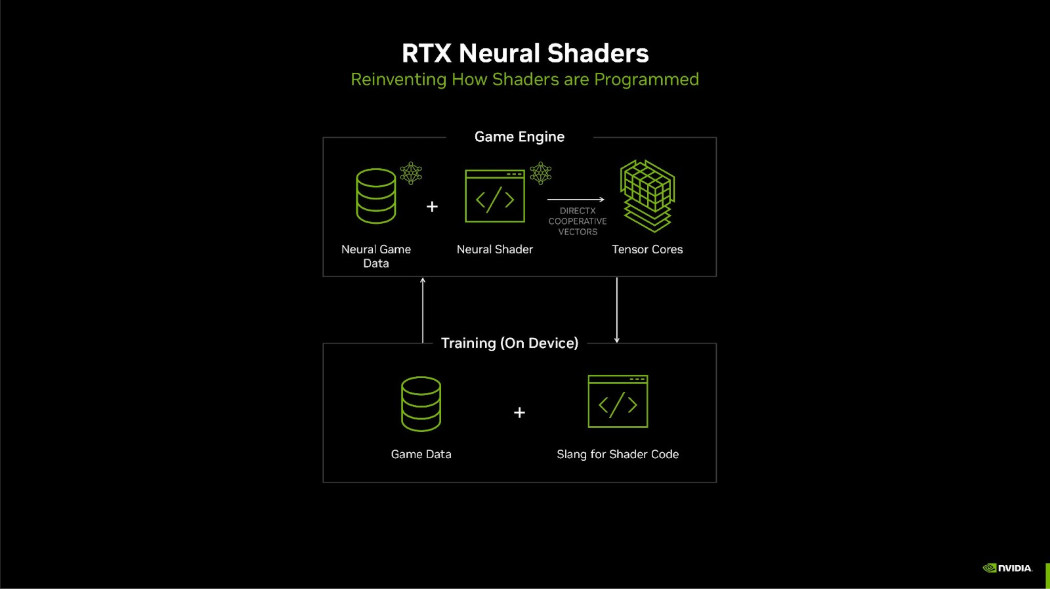

For a brief pause, Microsoft disclosed in January 2025 their collaboration with NVIDIA to incorporate neural rendering techniques into DirectX using Cooperative Vectors.

In simpler terms, NVIDIA’s Neural Shaders are tiny artificial intelligence networks integrated into customizable graphics processors. This technology is said to significantly decrease the need for Video Random Access Memory (VRAM) by over seven times, as claimed by NVIDIA, when compared to conventional texture compression methods.

Neural Materials, as stated by NVIDIA, are designed to manage intricate shaders, potentially increasing processing speed up to five times over. Additionally, the Neural Radiance Cache is reported to enhance the efficiency of path-traced indirect lighting effects.

In simpler terms, the Cooperative Vectors within Microsoft’s DirectX 12 enable NVIDIA’s artificial intelligence (AI) system to perform operations instantly on AI-specific hardware such as NVIDIA graphics processors (GPUs).

We are getting our first glimpse into what the collaboration between NVIDIA and Microsoft might bring, and it seems quite promising indeed.

What about AMD and Intel GPUs?

In simpler terms, Opinali came back from X with details about the performance of AMD RDNA 4 when utilized with Neural Texture Compression, specifically on an AMD Radeon RX 9070 XT graphics card.

Since the DXR 1.2 driver for AMD graphics cards hasn’t been released yet, opinali could only perform the rendering test in DP4A mode. However, early indications suggest positive outcomes.

In tests using Vulkan, the 9070 XT outperformed NVIDIA’s RTX 5080 by approximately 10%. Remarkably, when DirectX 12 was employed in DP4A rendering, there was a nearly double (110%) increase in performance. It’s worth mentioning that NVIDIA’s current driver for preview may not be optimal, which could explain the substantial boost observed with DirectX 12.

In these initial trials, both RDNA 4 GPUs and NVIDIA’s Blackwell hardware seem to be off to a good start, showing promise.

Read More

- Where Winds Meet: How To Defeat Shadow Puppeteer (Boss Guide)

- Best Controller Settings for ARC Raiders

- Survivor’s Colby Donaldson Admits He Almost Backed Out of Season 50

- Resident Evil Requiem cast: Full list of voice actors

- Best Thanos Comics (September 2025)

- Gold Rate Forecast

- PlayStation Plus Game Catalog and Classics Catalog lineup for July 2025 announced

- PS5, PS4’s Vengeance Edition Helps Shin Megami Tensei 5 Reach 2 Million Sales

- Best Shazam Comics (Updated: September 2025)

- The 10 Best Episodes Of Star Trek: Enterprise

2025-07-16 22:09