It’s understandable that when people think about AI, they often picture ChatGPT or other popular online chatbots like Copilot, Google Gemini, Perplexity, which have been making waves in the news. However, it’s important to note that AI encompasses a much broader range of technologies beyond just chatbots.

There are numerous situations where artificial intelligence (AI) can be beneficial without relying on cloud-based tools, such as enhancing performance in Copilot+ PC, video editing, audio transcription, running local language models, or improving Microsoft Teams meetings.

Here are five compelling reasons to consider utilizing local AI instead of relying on online alternatives.

In this way, the text maintains a conversational tone while providing clear and concise information about the benefits of using local AI compared to online options.

But first, a caveat

Before we begin, let me draw your attention to an important factor – the hardware. It’s crucial to note that unless you possess hardware that meets the requirements, you won’t be able to directly use some of the newest Local Language Models (LLMs), such as the one I mentioned.

Copilot along with Personal Computers offer a variety of built-in capabilities you can leverage, thanks in part to the Neural Processing Unit (NPU). While not every AI needs an NPU, the computational power must originate from some source. Before diving into using any local AI tool, make sure to understand its requirements first.

1. You don’t have to be online

It’s clear that this type of AI functions directly on your personal computer. However, while the Copilot application is integrated with Windows 11, both ChatGPT and Copilot need a continuous internet connection to function effectively, despite this integration.

20b) can be used right from your local computer without any internet connection. Although it may not be as speedy as the latest GPT-5, this model being based on GPT-4, it offers flexibility to use it anytime and anywhere.

Similarly, this rule holds true for other software, like image generators. Unlike online tools where you must be connected to the internet to create images, Stable Diffusion can operate offline directly on your computer. Meanwhile, AI-powered tools within DaVinci Resolve video editor function offline as well, utilizing your local system’s resources.

By having a local AI, you gain complete portability and enhanced control over your ownership. This means you’re no longer reliant on server capacity and its associated limitations or terms of service. Additionally, you avoid being affected by changes in these companies’ models or losing access to older ones that you might prefer. The shift towards GPT-5 has sparked this discussion for many users due to these very reasons.

2. Better privacy controls offline

This point is an expansion of the initial one and warrants separate emphasis. When you interact with an online tool, it involves sharing your data with a powerful cloud-based computer. A recent example is the ChatGPT conversations that were reportedly extracted and displayed in Google Search outcomes under specific circumstances.

When you utilize an online AI tool, you’re essentially surrendering control over your data compared to when you use a local AI on your device. Using a local AI guarantees that your data remains within your machine, a crucial aspect if you deal with confidential or sensitive information, as maintaining security and privacy is of utmost importance in such cases.

Although ChatGPT offers an incognito-like feature, your data is nonetheless transmitted from your device. Local AI, on the other hand, operates entirely offline, keeping all information within your system. This setup makes it simpler to adhere to data sovereignty laws and regional data protection rules since less data needs to be shared or stored externally.

Remember this: If you upload an LLM from your device to platforms like Ollama, any changes you’ve made will be shared. Similarly, activating web search for local models such as gpt-oss:20b or 120b means sacrificing some privacy.

3. Cost and environmental impact

Operating large Language Model Machines (LLMs) requires an substantial amount of energy, whether you’re using them at home or in a platform like ChatGPT. However, managing both your financial expenses and environmental footprint is more feasible when these machines are run at home.

The basic version of ChatGPT doesn’t come without a price tag. Behind the scenes, a substantial data center is running your interactions, consuming vast amounts of electricity, which carries an environmental toll. As energy usage in AI grows and its impact on our environment becomes more apparent, finding solutions to this problem will remain a pressing concern.

In contrast, managing an LLM (Local Learning Machine) on your own gives you the reins. Imagining a perfect scenario, my dream home would be adorned with solar panels, charging a massive battery to power multiple computers and gaming equipment. Alas, I don’t possess such a dwelling currently. However, that could be a reality for me – it serves as an illustrative example of control and self-sufficiency.

The financial implications of using these services are more easily understood when visualized. While free versions of online AI tools are useful, they don’t offer the most advanced features. This is why companies like OpenAI, Microsoft, and Google all provide premium tiers that offer additional benefits. For instance, ChatGPT Pro costs $200 per month, which amounts to $2,400 annually for accessing its top-tier services. If you’re using something like the OpenAI API, you’ll be charged based on your usage.

Instead, consider utilizing an LLM on your current gaming setup. Just like me! Fortunately, I own a system equipped with an RTX 5080 and 16GB of VRAM. When I’m not playing games, I can leverage the same graphics card for AI tasks using a free, open-source LLM. If you possess the hardware, why not make the most of it instead of spending extra money?

4. Integrating LLMs with your workflow

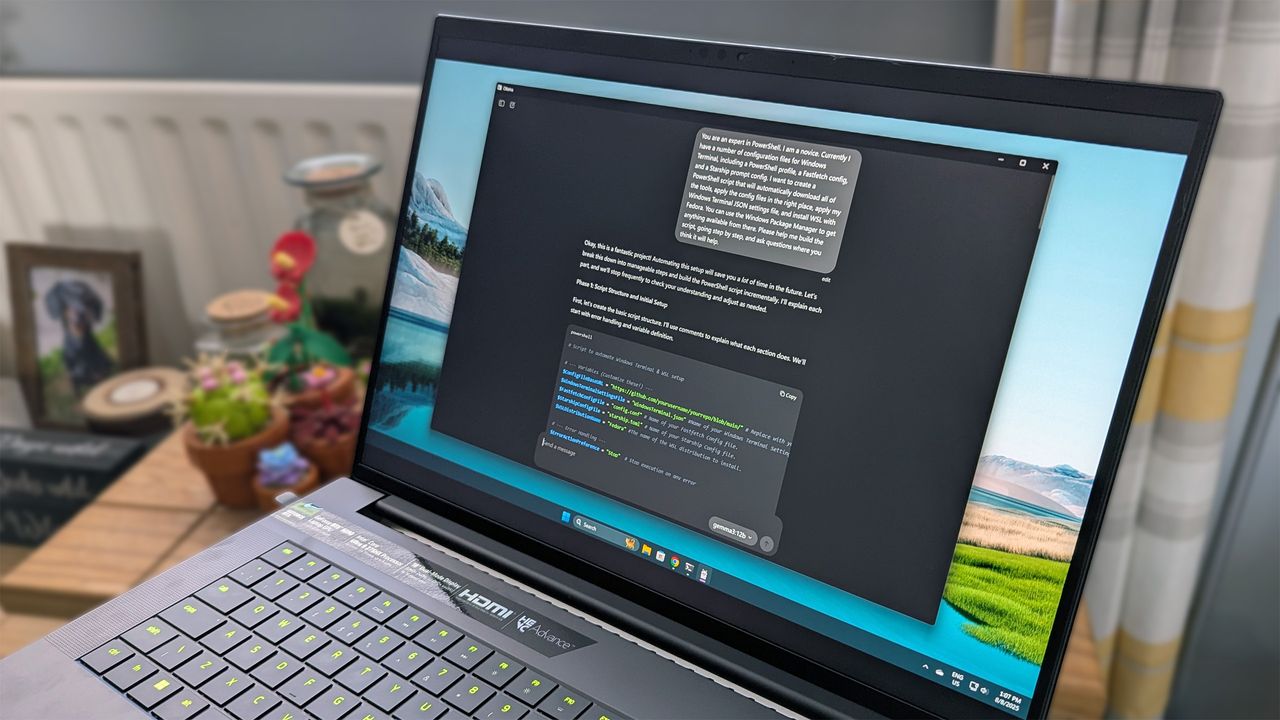

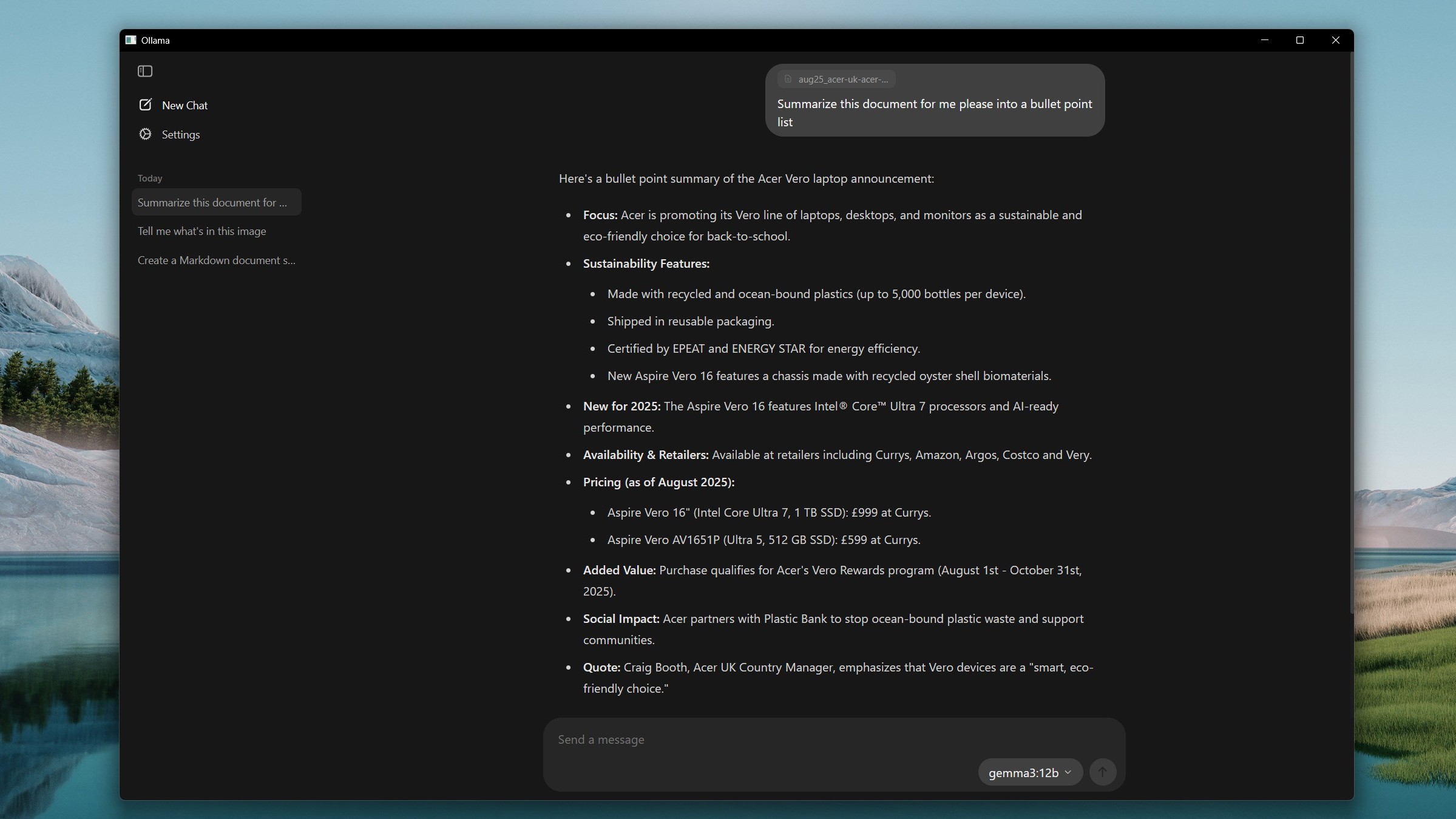

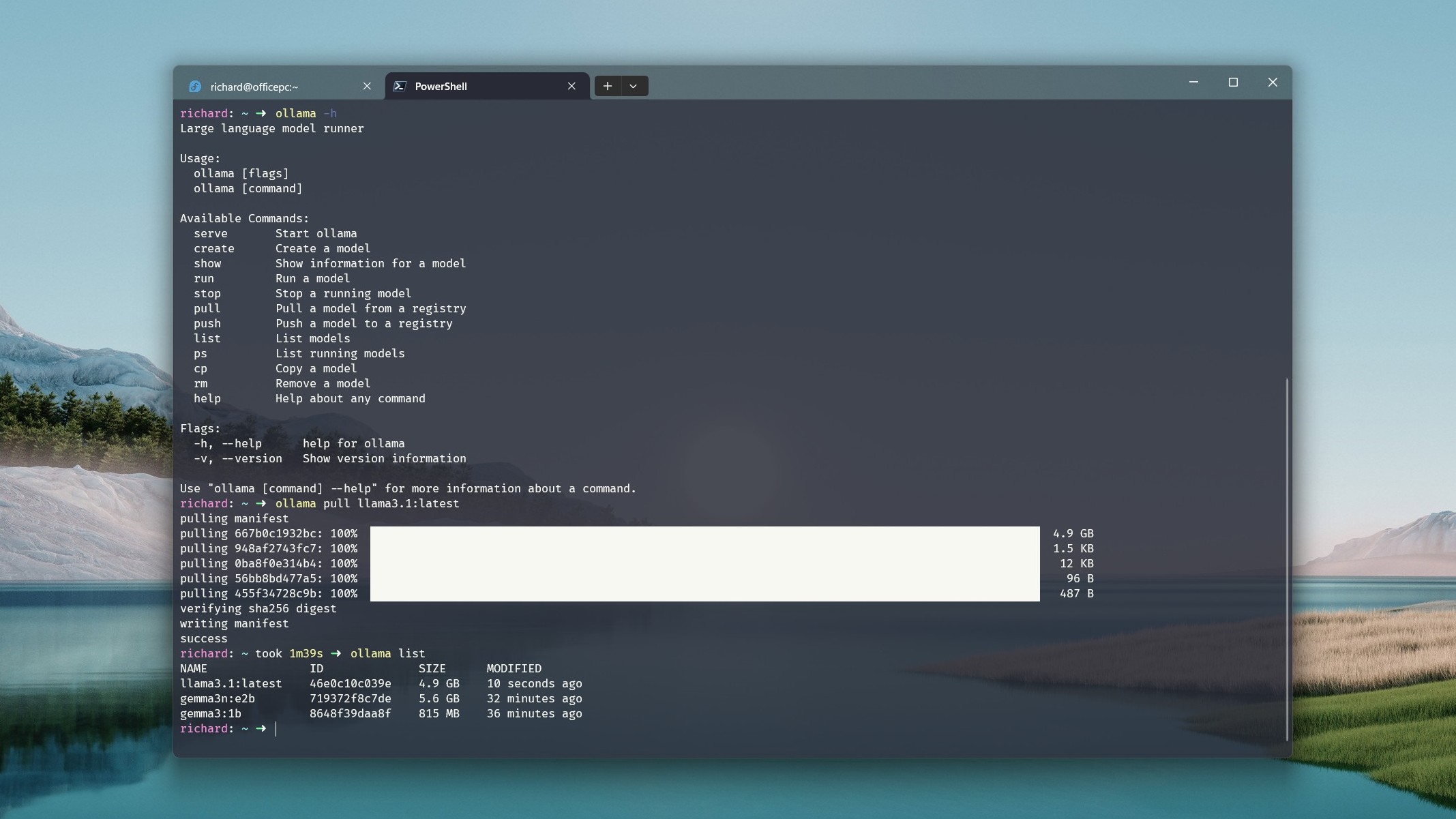

I’ve just started exploring this, as I’m still very new to coding. However, by installing Ollama on my computer along with an open-source Language Learning Model (LLM), I can now set up an AI programming partner compatible with VS Code on Windows 11. This local setup allows me to have a personal AI coding assistant.

Additionally, there’s some overlap with the other points on this list. While GitHub Copilot offers a free tier, its capabilities are limited compared to the premium version, which requires payment for optimal use. It’s important to note that using GitHub Copilot, regular Copilot, ChatGPT, or Gemini all necessitate an internet connection. By running a local LLM, you can bypass these limitations and potentially integrate it more effectively into your workflow as it operates independently of an internet connection.

Similarly, when it comes to AI tools that aren’t chatbots, Copilot+ serves a similar function. Though I’ve been skeptical about its current capabilities warranting the level of excitement, its main objective is to utilize your computer to seamlessly incorporate AI into your everyday work tasks.

A locally-based AI provides you with greater flexibility in choosing the tools that suit your requirements best. Different Learning Machine Models (LLMs) may excel in different areas, such as coding versus other tasks. When you engage an online chatbot, you’re utilizing pre-set models they provide, not one tailored to a specific purpose. However, with a locally-stored LLM, you also acquire the capacity to customize the model according to your unique needs.

Ultimately, with local tools you can build your own workflow, tailored to your specific needs.

5. Education

Instead of traditional classroom learning, I’m referring to self-taught education on AI, which you can do conveniently at home using your personal devices. You have the opportunity to gain a deep understanding of AI and how it applies to your life right there in your own space.

There’s something extraordinary about ChatGPT – it can accomplish so much with just a few words typed into a box within an app or browser. It’s truly incredible, there’s no denying that. Yet, understanding the inner workings of this technology is crucial. Knowing its hardware requirements, resources, and even building your own AI server or fine-tuning open-source Language Model Models can provide valuable insights.

AI is not going anywhere, so it might be beneficial for you to create your own space to explore it further. Whether you’re an enthusiast or a professional, utilizing these tools on a local level offers you the flexibility to conduct experiments. By using your own data, you can avoid relying on one model, being tied to a specific company’s cloud, or being bound by a subscription.

While there are certainly benefits to using smaller language models locally for excellent performance, such as Gemma, Llama, or Mistral, it’s important to note that larger open-source models like OpenAI’s new gpt-oss:120b may not function optimally even on high-end systems like today’s top gaming PCs. In other words, unless you have a significantly powerful hardware setup, these larger models might struggle to deliver satisfactory performance.

20b, which benefits from its reasoning abilities but may operate at a slower pace as a result.

At the moment, you might not immediately have access to the most advanced models like GPT-5 for personal use at home. There are exceptions, such as Llama 4 that you can download, but running it requires substantial hardware until scaled-down versions become available. Additionally, older models come with earlier knowledge cutoff dates.

Nevertheless, there are numerous persuasive arguments in favor of experimenting with homegrown AI solutions instead of solely relying on web-based options. In essence, if your equipment is capable, why not test out this approach and see the results for yourself?

Read More

- Best Controller Settings for ARC Raiders

- How to Get to Heaven from Belfast soundtrack: All songs featured

- 10 Best Anime to Watch if You Miss Dragon Ball Super

- 10 Most Memorable Batman Covers

- Netflix’s Stranger Things Replacement Reveals First Trailer (It’s Scarier Than Anything in the Upside Down)

- Wife Swap: The Real Housewives Edition Trailer Is Pure Chaos

- Best X-Men Movies (September 2025)

- How to Froggy Grind in Tony Hawk Pro Skater 3+4 | Foundry Pro Goals Guide

- 32 Kids Movies From The ’90s I Still Like Despite Being Kind Of Terrible

- Star Wars: Galactic Racer May Be 2026’s Best Substitute for WipEout on PS5

2025-08-09 23:41