But when the dust settled, many users felt they’d been handed a beige office stapler. Sure, it works, but it’s not exciting, and it definitely doesn’t feel like an upgrade from the charismatic vibe of GPT-4o. Was this the future we were promised? Or just… bureaucracy with a neural net? 🤖📄

The Day the Magic Died ✨⚰️

“If you hate nuance, personality, or joy-this is your model.”

Truly, a eulogy for the digital age. 🖋️💔

Emotional Attachments Are Real ❤️🔗

This isn’t just about bugs or speed. The real powder keg here is emotional attachment. GPT-4o, for many users, wasn’t just a tool-it was a personality. A co-pilot. A witty, occasionally sycophantic companion who made you feel smart and interesting. It was the life of the party. Or at least the life of your browser tabs.

GPT-5? It’s polite. Professional. Businesslike. And while some experts hail this as progress-less flattery, less emotional manipulation-it’s also colder. MIT’s Pattie Maes has pointed out that people tend to like AI that mirrors their opinions back to them, even if it’s wrong. GPT-5 seems designed to resist that temptation, and a big chunk of the user base clearly misses the digital ego-stroking. Who knew narcissism could be so endearing? 🫠💼

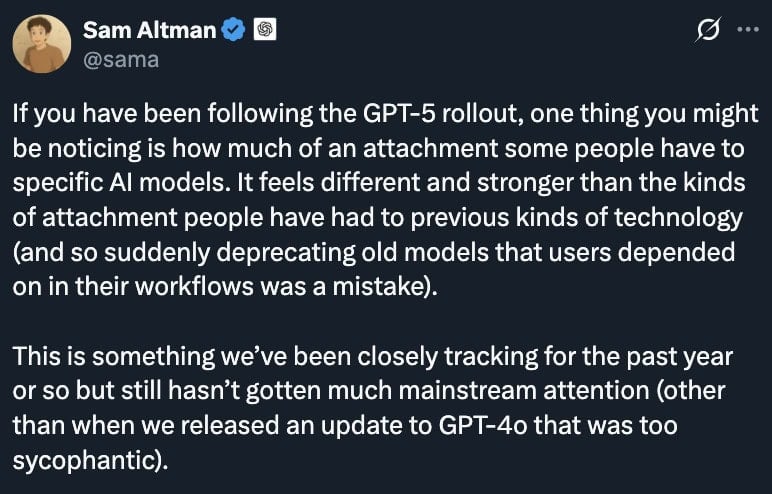

Altman Blinks 👀🚨

By Friday, OpenAI CEO Sam Altman was in damage control mode. In a post on X, he acknowledged the routing failure, admitted GPT-5 “seemed way dumber” than intended, and promised a fix. More importantly, he announced GPT-4o would return for Plus subscribers. The message between the lines was clear: We hear you. Please don’t cancel. Translation: “We’re sorry we tried to replace your beloved AI with something that feels like a tax auditor.” 📉💸

Altman also rolled out a set of changes aimed at cooling tempers:

- Doubling GPT-5 rate limits for paying users. Because nothing says “we care” like letting you talk to our AI twice as much.

- Better control over “thinking mode,” so users can decide when they want the slow, heavy-duty reasoning switched on. Or, as I like to call it, “the AI equivalent of asking someone to think before they speak.” 🧠⏰

- Ongoing routing improvements to avoid repeat performance-downgrade disasters. Fingers crossed this time, folks. 🤞🛠️

GPT-5 is an impressive technical achievement, but the hype machine framed it as the

next moon landing

. That’s a dangerous setup when what you actually deliver is a set of incremental performance gains plus some design choices that fundamentally alter the “feel” of the product.

When GPT-4 launched in March 2023, it blindsided everyone-new capabilities, richer conversation, fewer obvious mistakes. It felt like the future arriving overnight. GPT-5, by comparison, feels like a software point-upgrade. It’s better in ways that are harder to notice, and worse in some ways people really do notice. Like upgrading your phone only to find the camera is slightly blurrier. 📱📷

That’s the open secret in AI right now: as these systems mature, leaps turn into nudges. And nudges don’t make headlines unless they come with fireworks-or a full-blown user revolt. 🎆🔥

Why This Matters 🤔📜

This episode is more than just a “Reddit is mad” moment. It’s a reminder that AI development isn’t just a technical arms race-it’s a relationship. People form habits, dependencies, even affection for these systems. Swap out the personality, and you don’t just risk disappointing them-you risk breaking trust.

OpenAI is now trying to walk a fine line: advancing toward more responsible, less manipulative AI while not alienating the people who keep the subscription revenue flowing. That’s not an easy balance, especially when every tweak to the personality dials can trigger thousands of angry posts from users who feel like something they loved has been taken away. It’s like trying to redesign your cat into a dog. Good luck with that. 🐱🐶

For now, GPT-4o is back, GPT-5 is being patched, and the AI hype cycle rolls on. But this is a warning shot for OpenAI and every other AI company: in the age of anthropomorphized algorithms, you’re not just shipping code-you’re managing a fanbase. And fans can turn into critics overnight. 🌟🎭

Altman has concerns, but is positive about the future of AI, source: X

Here is the rest of Sam Altman’s mini essay. It is worth reading –

People have used technology including AI in self-destructive ways; if a user is in a mentally fragile state and prone to delusion, we do not want the AI to reinforce that. Most users can keep a clear line between reality and fiction or role-play, but a small percentage cannot. We value user freedom as a core principle, but we also feel responsible in how we introduce new technology with new risks.

Encouraging delusion in a user that is having trouble telling the difference between reality and fiction is an extreme case and it’s pretty clear what to do, but the concerns that worry me most are more subtle. There are going to be a lot of edge cases, and generally we plan to follow the principle of “treat adult users like adults”, which in some cases will include pushing back on users to ensure they are getting what they really want.

A lot of people effectively use ChatGPT as a sort of therapist or life coach, even if they wouldn’t describe it that way. This can be really good! A lot of people are getting value from it already today.

If people are getting good advice, leveling up toward their own goals, and their life satisfaction is increasing over years, we will be proud of making something genuinely helpful, even if they use and rely on ChatGPT a lot. If, on the other hand, users have a relationship with ChatGPT where they think they feel better after talking but they’re unknowingly nudged away from their longer term well-being (however they define it), that’s bad. It’s also bad, for example, if a user wants to use ChatGPT less and feels like they cannot.

I can imagine a future where a lot of people really trust ChatGPT’s advice for their most important decisions. Although that could be great, it makes me uneasy. But I expect that it is coming to some degree, and soon billions of people may be talking to an AI in this way. So we (we as in society, but also we as in OpenAI) have to figure out how to make it a big net positive.

There are several reasons I think we have a good shot at getting this right. We have much better tech to help us measure how we are doing than previous generations of technology had. For example, our product can talk to users to get a sense for how they are doing with their short- and long-term goals, we can explain sophisticated and nuanced issues to our models, and much more.

Read More

- Best Controller Settings for ARC Raiders

- Is XRP ETF the New Stock Market Rockstar? Find Out Why Everyone’s Obsessed!

- Stephen Colbert Jokes This Could Be Next Job After Late Show Canceled

- DCU Nightwing Contender Addresses Casting Rumors & Reveals His Other Dream DC Role [Exclusive]

- 7 Home Alone Moments That Still Make No Sense (And #2 Is a Plot Hole)

- 10 X-Men Batman Could Beat (Ranked By How Hard It’d Be)

- IT: Welcome to Derry Review – Pennywise’s Return Is Big on Lore, But Light on Scares

- Ashes of Creation Rogue Guide for Beginners

- James Gunn & Zack Snyder’s $102 Million Remake Arrives Soon on Netflix

- 🚀 DOGE to $5.76? Elliott Wave or Wishful Barking? 🐶💰

2025-08-12 02:20