120b language model.

Typically, an open-source model like this one is usually operated locally using tools such as Ollama or LMStudio. However, due to DuckDuckGo’s implementation, it’s accessible to everyone, allowing you to utilize it in a private setting at no cost.

What’s the significance of this? If you were to incorporate gpt-oss:120b with its impressive 120 billion parameters into Ollama for optimal performance, it would require an amount of VRAM that even two RTX 5090 graphics cards combined cannot provide.

With a size of 65GB, running this software might be challenging on typical consumer hardware unless you have a powerhouse graphics card or a high-end PC equipped with AMD Strix Halo’s unified memory for smoother performance.

Especially tough to run well.

Duck.ai offers the use of this model without any charges, however, instead of running it on your personal computer, they utilize their own servers. Given that DuckDuckGo, a company recognized for its dedication to privacy, provides it, you can expect this tool to be quite trustworthy when it comes to online services of its kind.

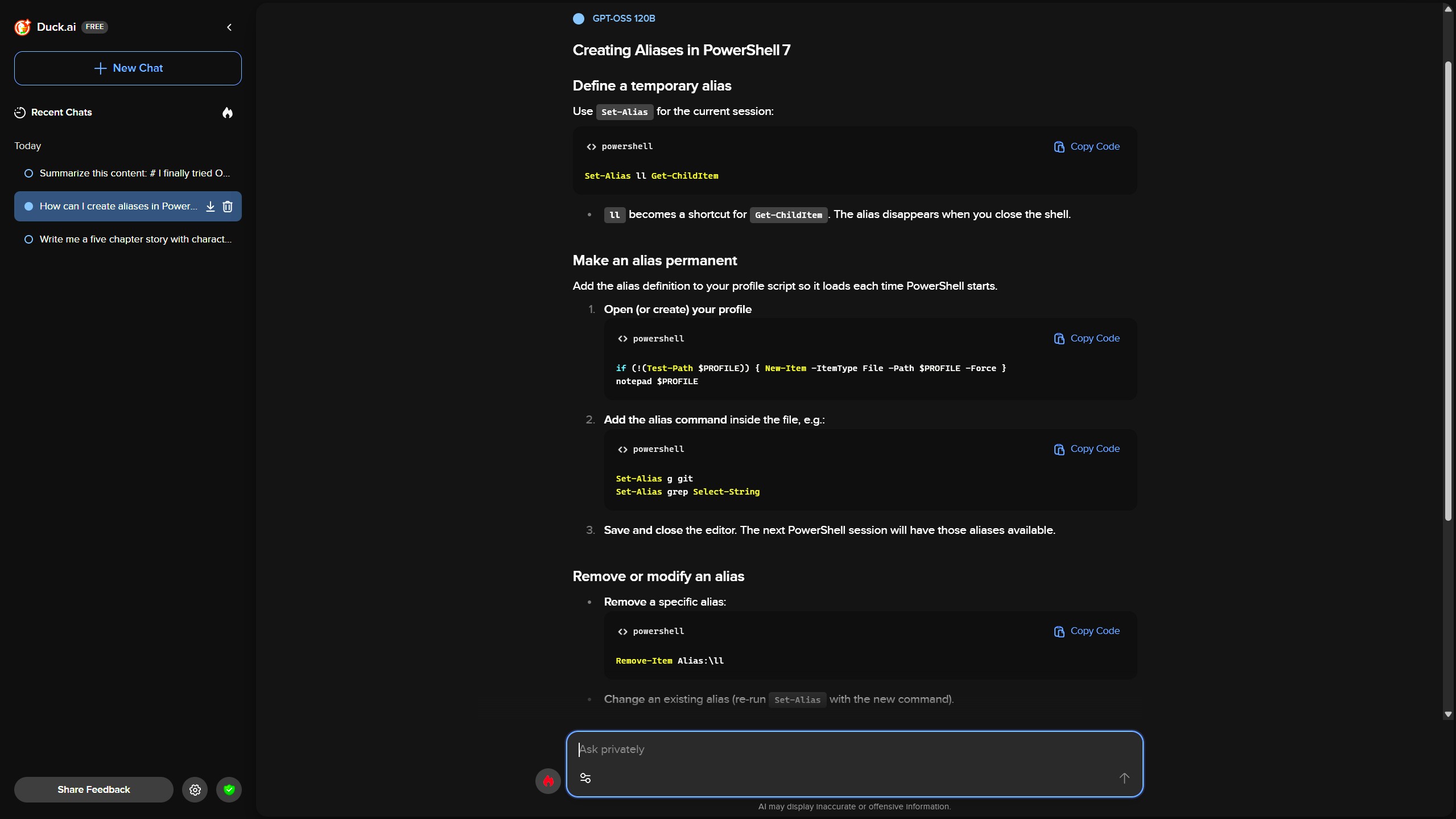

DuckDuckGo clearly declares that all conversations are made anonymous, and it doesn’t require registration for users. Unlike other free services, you won’t need to create an account or provide an email address. Instead, simply access their website and begin asking questions without any prior setup.

How do you find it? It’s incredibly fast. Keep in mind, though, it’s running on an enormous hardware network elsewhere, not at your home. In the brief time I’ve experimented with it, its response speed is comparable to that of the 20b model on my RTX 5090 – Duck.ai doesn’t display token per second stats – but there’s a unique aspect I’m still undecided about.

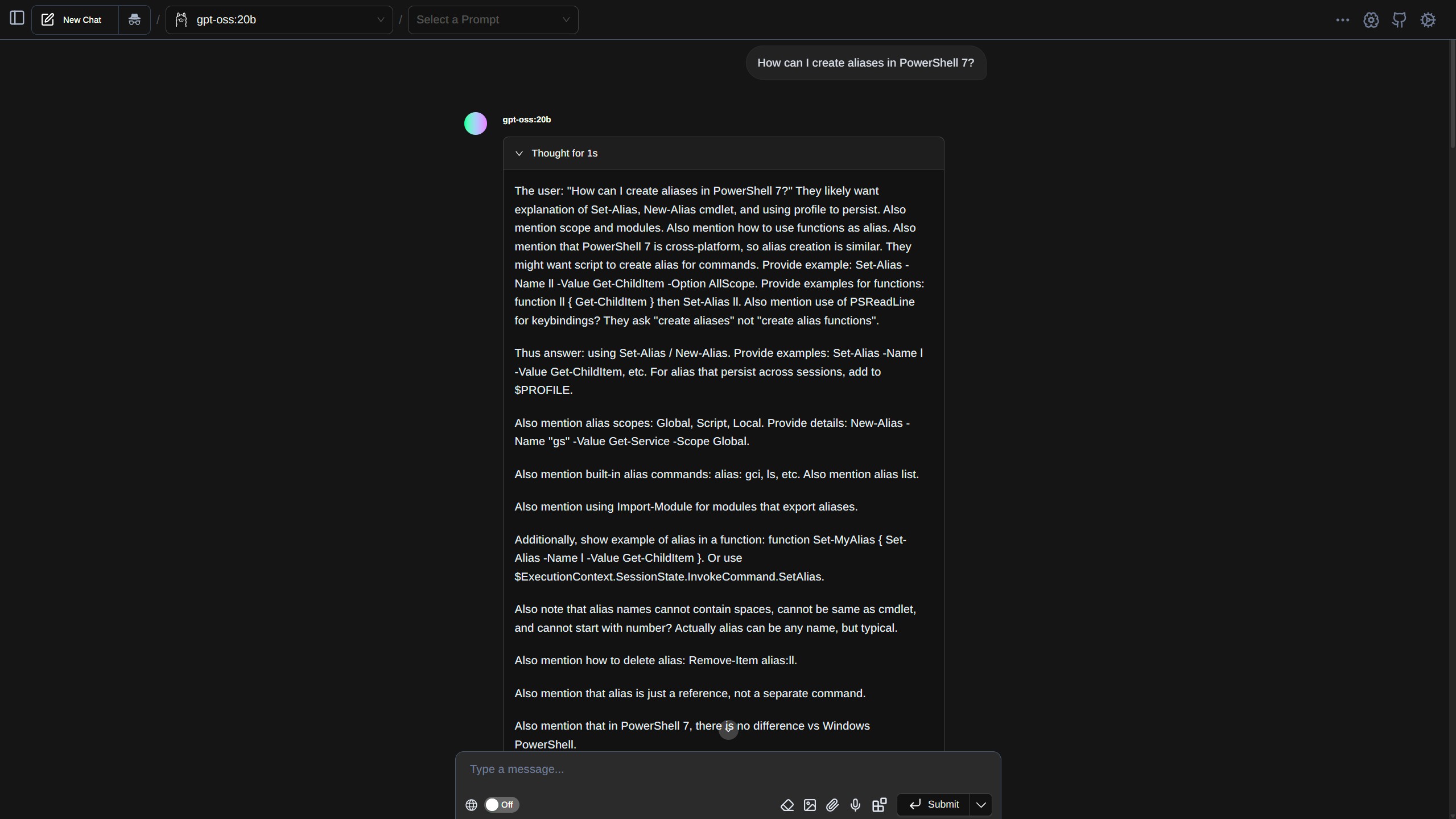

As a tech enthusiast, I’ve been fascinated by the way gpt-oss:120b operates within Duck.ai. Instead of witnessing the thought process itself, it simply spits out the solution. Over time, I’ve grown to value being able to observe this information, as it deepens my understanding of how it works.

It can be fascinating for me to observe the process behind the model’s output, as it often sheds light on areas where errors might have occurred. I think understanding how these models work is important and could even be beneficial. It would be great if Duck.ai gave users the option to view or hide this information in their settings.

The model doesn’t support uploading personal files like documents or code samples for use with it. While other alternatives offer image upload capabilities, none, as I can see, provide this feature. This might be due to privacy concerns, but it does restrict the ways you can utilize the tool.

It’s generally really good, though, and since it’s built inside a web app that feels a lot like ChatGPT or Google Gemini, it’s welcoming and easy to use. It saves your recent chats in the sidebar, and the settings on hand to tweak how you want your responses are pretty thorough. These all apply, of course, to any of the models you use on Duck.ai, not just gpt-oss:120b.

As a tech enthusiast, I think I might’ve stumbled upon something special in my AI toolkit – GPT-oss:120b. But before I can definitively say it’s a gem, I need to spend some quality time with it. For now though, I’m just thrilled that I can tinker with this powerful model without the necessity of a massive GPU farm or an NVIDIA Blackwell Pro!

Read More

- Best Controller Settings for ARC Raiders

- Ashes of Creation Rogue Guide for Beginners

- 7 Home Alone Moments That Still Make No Sense (And #2 Is a Plot Hole)

- Stephen Colbert Jokes This Could Be Next Job After Late Show Canceled

- DCU Nightwing Contender Addresses Casting Rumors & Reveals His Other Dream DC Role [Exclusive]

- 10 X-Men Batman Could Beat (Ranked By How Hard It’d Be)

- Is XRP ETF the New Stock Market Rockstar? Find Out Why Everyone’s Obsessed!

- 10 Most Brutal Acts Of Revenge In Marvel Comics History

- Katy Perry and Justin Trudeau Hold Hands in First Joint Appearance

- XRP: Will It Crash or Just… Mildly Disappoint? 🤷

2025-09-11 13:10