Author: Denis Avetisyan

Advancements in first-principles nuclear theory are refining calculations of beta decay, opening new avenues for searching for physics beyond our current understanding of the universe.

This review details recent progress in ab initio methods for calculating nuclear structure corrections to beta decay observables, enhancing sensitivity to potential new interactions.

Precision tests of the Standard Model increasingly rely on sensitive measurements of nuclear beta decay, yet accurate interpretation is hampered by theoretical uncertainties in calculating radiative and higher-order corrections. This review, ‘The Role of Ab Initio Beta-Decay Calculations in Light Nuclei for Probes of Physics Beyond the Standard Model’, details recent advances in systematically improvable ab initio nuclear theory, demonstrating unprecedented precision in calculating these corrections for light nuclei. These improved calculations enhance the sensitivity of beta decay experiments to potential new physics beyond the Standard Model, particularly in searches for exotic currents and precise determinations of electroweak parameters. How can these methods be extended to heavier nuclei and more complex decay modes to further constrain models of fundamental symmetries and interactions?

The Razor’s Edge of Precision: Testing the Standard Model

The Standard Model of particle physics, while remarkably successful, remains a theory demanding rigorous scrutiny. High-precision measurements of fundamental parameters, such as those extracted from the study of Beta Decay, serve as essential tests of its internal consistency and predictive power. Beta decay, a radioactive process involving the emission of electrons or positrons, allows physicists to precisely determine parameters like the decay constant and the energy spectrum of emitted particles. These measurements aren’t simply about confirming known values; they represent a search for subtle deviations from theoretical predictions. Any statistically significant discrepancy, however small, could indicate the presence of new particles or interactions not currently accounted for within the Standard Model, opening a window onto the realm of physics beyond its established boundaries. The pursuit of ever-greater precision in these fundamental measurements, therefore, represents a cornerstone of modern particle physics research, pushing the limits of our understanding and potentially revealing the first cracks in this seemingly impenetrable framework.

The Standard Model of particle physics, while remarkably successful, isn’t considered the final word on reality. Scientists actively search for deviations between experimental observations and the Model’s predictions, as these discrepancies offer tantalizing glimpses beyond current understanding. These “new physics” signals aren’t necessarily dramatic; they can manifest as subtle differences in particle properties or interactions. High-precision experiments are designed to meticulously measure these parameters, seeking out any breakdown in the Standard Model’s predictions. Such findings wouldn’t invalidate the existing framework entirely, but instead suggest the presence of additional particles, forces, or dimensions – components of a more complete theory awaiting discovery. Essentially, the Standard Model defines the boundaries of known physics, and any confirmed deviation represents a crucial step towards unraveling the mysteries that lie beyond.

The quest to understand the fundamental building blocks of matter relies heavily on the precise measurement of parameters within the Standard Model, and the Cabibbo-Kobayashi-Maskawa (CKM) matrix plays a pivotal role. Determining the V_{ud} element of this matrix with high accuracy is not merely an exercise in refinement; it’s a crucial test of the Standard Model’s internal consistency. This is because the CKM matrix elements must satisfy unitarity – the sum of the probabilities of all possible interactions must equal one. Any deviation from unitarity, signaled by a precise measurement of V_{ud} and other matrix elements, would strongly suggest the existence of physics beyond the Standard Model, potentially revealing new particles or interactions currently unknown to science. These unitarity tests represent a powerful, indirect method for probing the limits of our current understanding and searching for the cracks that may lead to revolutionary discoveries.

The Nuclear Complexity: A Necessary Hurdle

Accurate determination of beta decay rates relies fundamentally on the precise calculation of Nuclear Matrix Elements (NMEs). These elements represent the quantum mechanical transition probabilities between the initial and final nuclear states during beta decay. Specifically, NMEs quantify the overlap between the initial and final wavefunctions, considering the interactions responsible for the decay process. The beta decay rate is directly proportional to the square of the NME and the phase space integral, making the accurate calculation of the NME a critical component of any beta decay analysis. The complexity arises from the many-body nature of the nucleus, requiring sophisticated theoretical approaches to model these transitions effectively and account for the contributions of all nucleons and their interactions.

Theoretical uncertainties in Nuclear Matrix Elements originate from the complex many-body problem inherent in describing atomic nuclei. Nuclei consist of nucleons – protons and neutrons – interacting via the strong nuclear force. Accurately calculating the collective behavior of these nucleons requires accounting for correlations arising from these interactions, which are not simply additive. These many-body effects include contributions from two-nucleon, three-nucleon, and higher-order forces, alongside the complex interplay between individual nucleon motion and overall nuclear structure. Consequently, approximations are necessary in modeling these interactions and many-body effects, introducing theoretical uncertainties into the calculated matrix elements and, ultimately, into predicted Beta decay rates.

Ab initio calculations in nuclear physics represent a computational approach to solving the many-body Schrödinger equation for atomic nuclei, starting solely from fundamental interactions between nucleons – protons and neutrons – and without the use of phenomenological parameters adjusted to experimental data. These methods, which include techniques like Coupled Cluster, No-Core Shell Model, and Self-Consistent Green’s Function, aim to predict nuclear properties directly from the bare nucleon-nucleon and three-nucleon forces. The computational demands of these calculations scale rapidly with the number of nucleons, limiting their applicability to lighter nuclei; however, ongoing developments in algorithms and computational hardware are extending the reach of ab initio methods to heavier systems, providing crucial tests of nuclear theory and input for astrophysical calculations.

First Principles: Constructing Reality from the Bottom Up

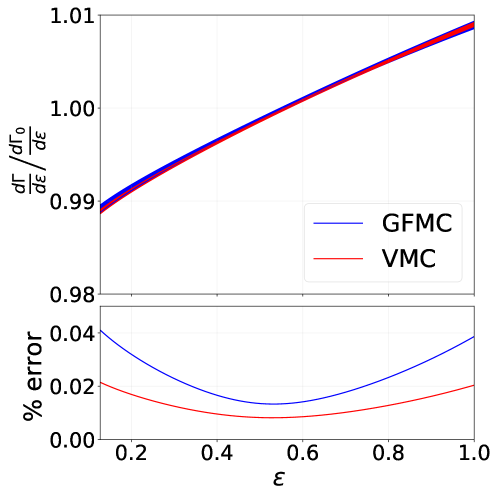

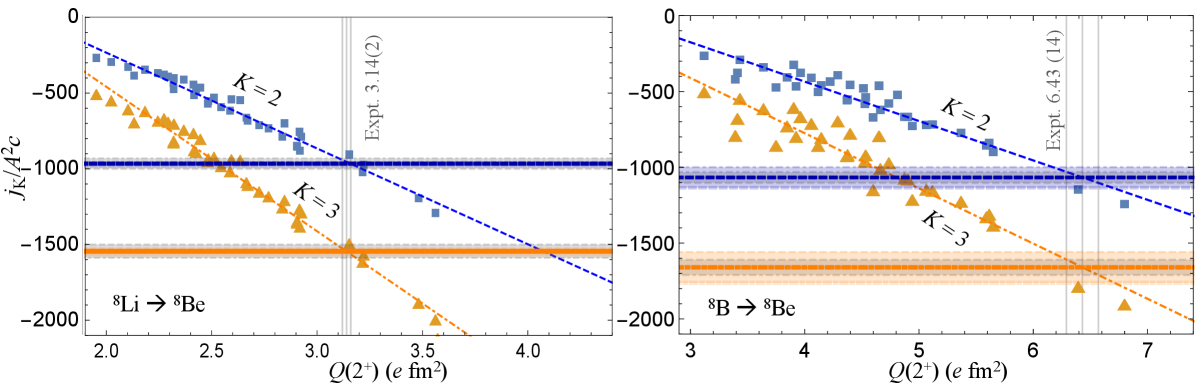

The No-Core Shell Model (NCSM) and Quantum Monte Carlo (QMC) represent two leading ab initio approaches to solving the many-body problem in nuclear physics. NCSM utilizes a systematically convergent expansion of the nuclear wave function in a finite-dimensional harmonic oscillator basis, allowing for calculations of bound and unbound states, but is computationally limited by the exponential growth of the basis dimension with nucleon number. QMC, conversely, employs stochastic methods-specifically, the Green Function Monte Carlo (GFMC) and Diffusion Monte Carlo (DMC) algorithms-to directly evaluate many-body wave functions and energies. While QMC avoids the explicit construction of a basis, it faces challenges related to the fixed-node approximation and computational scaling with system size. Both NCSM and QMC require significant computational resources, but offer complementary strengths in addressing the complexities of nuclear structure and reactions.

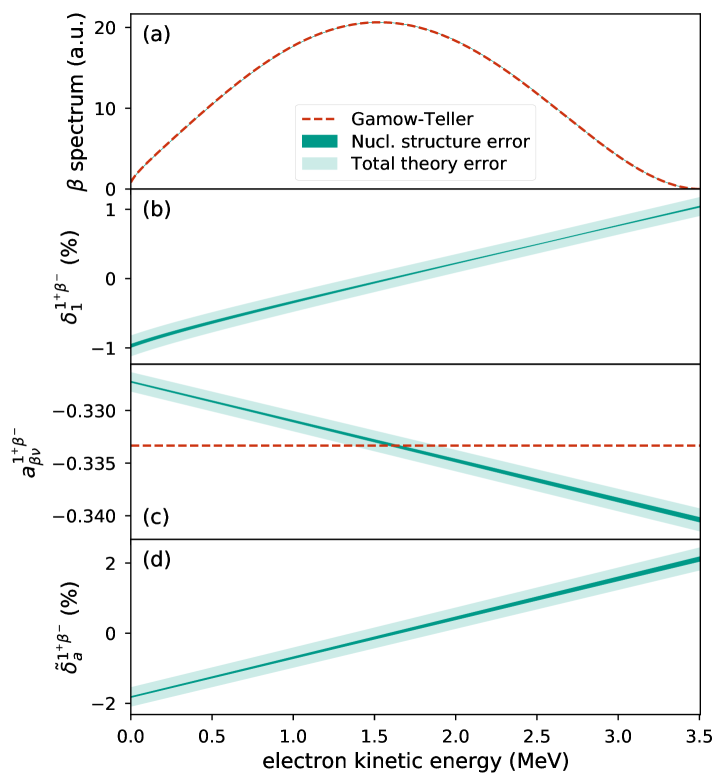

Achieving accurate results with ab initio nuclear many-body methods necessitates computationally intensive calculations that go beyond the initial many-body solution. Specifically, RadiativeCorrections account for the effects of emitted and absorbed photons on nuclear processes, including beta decay, and are essential for matching theoretical predictions with experimental measurements. Similarly, RecoilOrderCorrections address the kinetic energy of the recoiling daughter nucleus after particle emission, which can significantly influence decay rates and spectra. These corrections, often calculated perturbatively, demand substantial computational resources and careful consideration of higher-order terms to minimize systematic uncertainties and maintain the precision required for modern nuclear physics calculations.

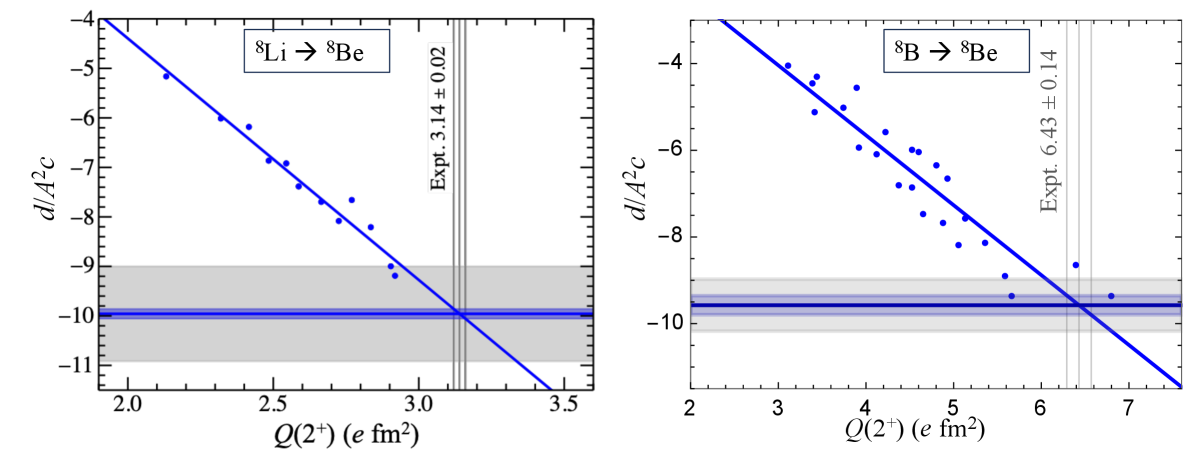

Recent progress in ab initio nuclear many-body theories has significantly reduced theoretical uncertainties in beta decay calculations. Specifically, uncertainties have been lowered to the order of 10-4, a substantial improvement enabling more precise tests of the Standard Model. This reduction is achieved through advancements in methods like NoCoreShellModel and QuantumMonteCarlo, coupled with accurate accounting for effects such as RadiativeCorrections and RecoilOrderCorrections. The resulting precision allows for stringent validation of the Standard Model’s predictions regarding weak interactions and the determination of fundamental parameters with increased accuracy, approaching the sub-per-mil level for beta decay corrections.

Recent progress in ab initio nuclear theory has facilitated beta decay corrections with precision at the sub-per-mil level, representing a fractional uncertainty of less than 10-3. This level of accuracy is achieved through rigorous calculations incorporating many-body effects and careful treatment of nuclear structure. These precise corrections are crucial for accurately predicting beta decay rates and spectra, which are then used in tests of the Standard Model and searches for physics beyond it. The ability to reliably calculate these corrections minimizes systematic uncertainties in experimental determinations of fundamental parameters and provides stringent constraints on theoretical models.

Pushing the Boundaries: The Search Beyond Established Laws

The cornerstone of the Standard Model’s description of quark mixing is the Cabibbo-Kobayashi-Maskawa (CKM) matrix, and its unitarity – the principle that the probabilities of all possible quark transitions must sum to one – provides a powerful consistency check. Precise measurements of the matrix element V_{ud}, which governs the probability of a down quark transforming into an up quark, are crucial for rigorously testing this unitarity. Any deviation from unitarity would signal the presence of physics beyond the Standard Model (BSMPhysics), potentially revealing new particles or interactions. Current efforts to refine V_{ud} through precise beta decay measurements are therefore not merely exercises in Standard Model validation; they represent a sensitive search for the subtle fingerprints of new physics, probing energy scales exceeding 10 TeV and offering a unique window into the fundamental structure of the universe.

The pursuit of increasingly precise determinations of the weak interaction parameter V_{ud} is inextricably linked to the refinement of theoretical calculations surrounding beta decay. Subtle discrepancies between experimental results and Standard Model predictions are often masked by lingering uncertainties in these calculations – ambiguities arising from complex nuclear effects and approximations. Recent advancements in quantum chromodynamics and nuclear theory are actively diminishing these theoretical limitations, allowing for a more sensitive search for physics beyond the Standard Model. By meticulously reducing these uncertainties, scientists enhance the ability to detect minuscule deviations indicative of new particles or interactions, potentially unveiling subtle effects at energy scales previously inaccessible and bolstering the power of unitarity tests as a probe for new phenomena.

The relentless pursuit of precision in measuring fundamental parameters, coupled with increasingly sophisticated theoretical calculations, has opened a new frontier in the search for physics beyond the Standard Model. Recent advancements in beta decay studies, for instance, aren’t simply refining existing knowledge; they are extending the reach of experimental inquiry to unprecedented energy scales. Currently, these high-precision measurements, bolstered by theoretical progress, enable scientists to probe potential new physics phenomena at energies up to 10 TeV – a regime previously inaccessible through direct experimentation. This capability allows for the stringent testing of the Standard Model and offers the potential to indirectly detect the presence of undiscovered particles or interactions that might manifest as subtle deviations from predicted behavior, effectively pushing the boundaries of known reality.

The relentless pursuit of precision in subatomic measurements, coupled with increasingly sophisticated theoretical frameworks, is now enabling scientists to scrutinize the fundamental symmetries governing the universe with unprecedented detail. These advancements aren’t merely about confirming existing models; they are actively pushing the boundaries of known physics, offering a pathway to resolve long-standing mysteries about the nature of reality. By meticulously examining particle interactions, researchers are seeking to identify subtle deviations from established predictions – anomalies that could hint at the existence of previously unknown forces or particles. This rigorous testing extends beyond the Standard Model, potentially revealing symmetries that are broken or hidden, and ultimately providing a more complete and nuanced understanding of the universe’s building blocks and their interactions, thus reshaping our perception of fundamental laws.

The pursuit of increasingly precise calculations in nuclear physics, as detailed in this review of ab initio methods, mirrors a fundamental need for rigorous self-critique. The article highlights the importance of recoil-order corrections and nuclear structure effects in beta decay – refinements essential for extracting meaningful signals beyond the Standard Model. This resonates with Nietzsche’s assertion that “There are no facts, only interpretations.” Each calculation, each correction applied, is an interpretation of the underlying reality, and its validity rests not on initial plausibility, but on repeated attempts to disprove it. The value lies not in achieving absolute certainty, but in continually refining the model through persistent, critical examination, ensuring the results are robust against alternative explanations and systematic errors. If it can’t be replicated, it didn’t happen.

What’s Next?

The pursuit of increasingly precise ab initio calculations in light nuclei isn’t about achieving a final answer; it’s about meticulously mapping the territory of error. Each refinement of electroweak interaction models, each inclusion of higher-order corrections, doesn’t necessarily bring truth closer, but it defines the boundaries of what remains unexplained. The current advancements detailed in this review suggest a pathway towards extracting meaningful signals of physics beyond the Standard Model from beta decay – but only if one acknowledges the inherent limitations of the theoretical framework itself.

Future progress will likely hinge not on brute-force computational power, but on innovative approaches to systematically address the remaining uncertainties. Nuclear structure corrections, even when seemingly minor, can mask or mimic new physics. Therefore, a critical focus must be placed on developing robust methods to quantify and control these effects, perhaps through direct comparison with experimental data from diverse decay channels. An error isn’t a failure, it’s a message – one that directs further investigation.

Ultimately, the field’s trajectory depends on a willingness to abandon cherished assumptions when confronted with contradictory evidence. The sensitivity gains promised by precise calculations are only valuable if researchers remain vigilant against self-deception. The real test isn’t whether the models can predict, but whether they can be demonstrably disproven.

Original article: https://arxiv.org/pdf/2602.00341.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Best Controller Settings for ARC Raiders

- DCU Nightwing Contender Addresses Casting Rumors & Reveals His Other Dream DC Role [Exclusive]

- Stephen Colbert Jokes This Could Be Next Job After Late Show Canceled

- Ashes of Creation Rogue Guide for Beginners

- Is XRP ETF the New Stock Market Rockstar? Find Out Why Everyone’s Obsessed!

- 10 X-Men Batman Could Beat (Ranked By How Hard It’d Be)

- 7 Home Alone Moments That Still Make No Sense (And #2 Is a Plot Hole)

- 10 Most Brutal Acts Of Revenge In Marvel Comics History

- Heather Rae El Moussa Reacts to Critics of Christina Haack Friendship

- James Gunn Is Open to Giving a Fan-Favorite DC Movie a Sequel (But There’s One Thing Missing)

2026-02-03 12:12