Author: Denis Avetisyan

Recent experiments are challenging our understanding of how beauty and charm quarks decay, revealing intriguing discrepancies that could point to new physics.

This review details recent progress in heavy flavor physics, focusing on anomalies in lepton universality and the tension between inclusive and exclusive determinations of key CKM matrix elements.

Persistent discrepancies between Standard Model predictions and experimental observations in heavy flavor decays motivate a thorough re-evaluation of our understanding of fundamental interactions. This review, ‘Recent progress in decays of $b$ and $c$ hadrons’, summarizes recent theoretical advancements in the study of b and c hadron decays, focusing on anomalies in b \to s and b \to c transitions, as well as tensions in the determination of key parameters like V_{ub} and V_{cb}. These developments, spurred by measurements from experiments like LHCb and Belle II, highlight potential signs of physics beyond the Standard Model. Will future high-precision measurements and refined theoretical calculations resolve these intriguing puzzles and illuminate the path towards a more complete understanding of flavor physics?

The Subtle Language of Decay: Hints of New Physics

B meson decays, meticulously scrutinized by experiments like LHCb, consistently deviate from predictions made by the Standard Model of particle physics. These aren’t merely statistical fluctuations; repeated, high-precision measurements reveal a stubborn mismatch between observed decay rates and theoretical calculations. This suggests the existence of previously unknown particles or fundamental interactions influencing these decays, potentially beyond the currently established framework. The discrepancies aren’t limited to a single decay pathway, but appear across multiple channels, strengthening the hypothesis that new physics is at play. While no single anomaly reaches the definitive ‘5-sigma’ threshold for discovery, the accumulation of evidence is prompting physicists to re-evaluate long-held assumptions about the fundamental forces governing the universe and to explore extensions to the Standard Model that could account for these persistent deviations.

B meson decays, meticulously scrutinized by experiments like LHCb, are not behaving as predicted by the Standard Model, giving rise to what are known as ‘BBAnomalies’. These aren’t isolated incidents; discrepancies appear across multiple decay pathways, indicating a systemic challenge to established physics. The observed deviations aren’t large enough to constitute definitive proof of new physics, but their persistence and variety compel physicists to revisit fundamental assumptions about how particles interact and transform. This necessitates a thorough re-evaluation of the Standard Model’s framework for ‘flavor physics’ – the study of elementary particles differing in taste – and fuels intense investigation into potential extensions that could account for these unexpected results. The subtle but consistent deviations are prompting a search for new particles mediating these decays, or alternatively, new forces influencing the behavior of quarks and leptons, potentially reshaping the landscape of particle physics.

Investigations into the fundamental forces governing particle decay are increasingly focused on lepton universality tests, which examine whether leptons – electrons, muons, and taus – interact identically with the strong and weak forces. Recent measurements of R_K, a ratio of decay rates involving muons and electrons, consistently deviate from predictions based on the Standard Model of particle physics, exhibiting a significance between 2.1 and 2.5 sigma. While not yet definitive proof of new physics, this persistent discrepancy suggests the possibility of undiscovered particles or interactions influencing these decays, prompting rigorous theoretical and experimental efforts to either confirm or refute these intriguing anomalies and refine the boundaries of established physics.

The Experimental Lens: Precision Decay Measurements

The LHCb and Belle II experiments are specifically designed for high-luminosity data collection focused on B meson decays. LHCb, located at the Large Hadron Collider, leverages proton-proton collisions to generate a large sample of B mesons, while Belle II, at the SuperKEKB collider, utilizes electron-positron annihilation. Both experiments employ sophisticated tracking systems, calorimeters, and particle identification detectors to reconstruct decay vertices and identify decay products with high efficiency. This allows for the collection of statistically significant datasets essential for precision measurements of decay branching ratios, lifetimes, and kinematic properties of B mesons, ultimately probing for deviations from Standard Model predictions.

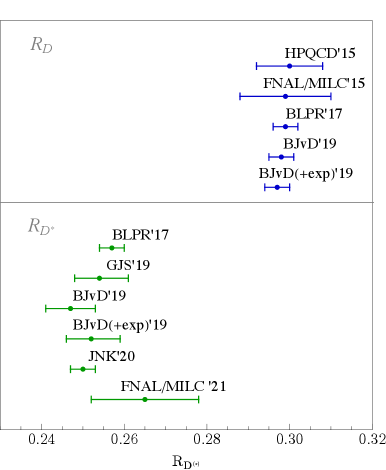

The R_{D^{<i>}}\ ratio, representing the ratio of the branching fraction of B meson decays into a D^{</i>}\ lepton plus neutrino to B meson decays into a lepton plus neutrino, is a key observable in searches for physics beyond the Standard Model. Discrepancies between Standard Model predictions and experimental measurements of R_{D^{*}}\ could indicate contributions from new particles or interactions. Current combined measurements from experiments such as LHCb and Belle II show a deviation from the Standard Model prediction at a significance level of 3.3σ, prompting continued investigation and higher-precision measurements to confirm or refute this potential new physics signal.

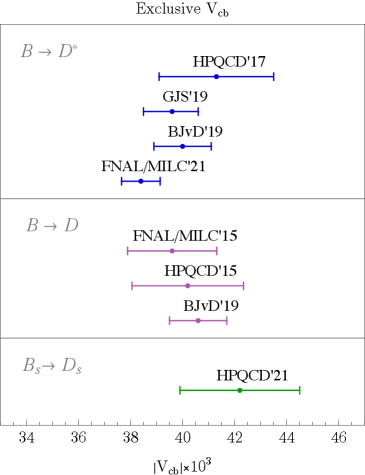

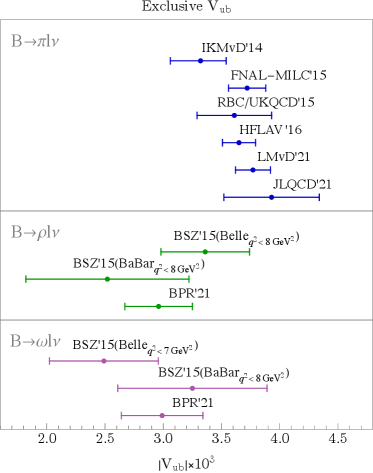

Current and planned upgrades to the LHCb and Belle II experiments are projected to significantly improve the precision of several key Standard Model parameters. Specifically, measurements of the Cabibbo-Kobayashi-Maskawa (CKM) matrix elements V_{ub} and V_{cb} are anticipated to achieve sub-percent level precision with the collection of upcoming data. Furthermore, measurements of R_K, a quantity sensitive to lepton universality, are projected to reach sub-percent precision with the accumulation of 300 fb-1 of integrated luminosity at the LHCb experiment. These improved measurements will provide stringent tests of the Standard Model and offer increased sensitivity to potential new physics contributions.

The Theoretical Framework: Calculating Decay Rates

Form factors are essential quantities in calculating hadronic decay rates because they parameterize the non-perturbative strong interaction dynamics that govern the transition between the initial and final hadronic states. Hadronic decays, involving particles composed of quarks and gluons, cannot be calculated solely from perturbative Quantum Chromodynamics (QCD) due to the confinement of quarks. Form factors, denoted symbolically as f(q^2), effectively model the probability amplitude for the strong interaction process occurring within the hadron. These factors depend on the momentum transfer q^2 and encapsulate the complex internal structure of the hadrons involved, including their size, shape, and quark distribution. Determining form factors requires either phenomenological models fitted to experimental data or non-perturbative calculations, such as Lattice QCD, to provide reliable predictions for decay rates.

Lattice Quantum Chromodynamics (LQCD) calculations represent a non-perturbative approach to determining form factors, which are essential parameters in the analysis of hadronic decays. These calculations discretize spacetime into a four-dimensional lattice, allowing for the numerical solution of the strong interaction equations. By simulating the interactions of quarks and gluons, LQCD directly computes the matrix elements defining these form factors without relying on phenomenological models. The process involves significant computational resources and requires careful control of systematic uncertainties arising from lattice spacing, finite volume effects, and the approximation of quark masses. Results from LQCD calculations provide a crucial theoretical baseline for comparison with experimental measurements, enabling precise determination of parameters such as the CKM matrix elements and searches for physics beyond the Standard Model.

Heavy Quark Effective Theory (HQET) corrections are systematically applied to Lattice QCD (LQCD) calculations of form factors to enhance predictive power. These corrections account for the finite mass of the heavy quark-typically a bottom or charm quark-which is treated as infinite in the leading-order HQET expansion. By including terms proportional to 1/m_Q (where m_Q represents the heavy quark mass), HQET significantly reduces the computational cost associated with simulating heavy quarks on the lattice while maintaining theoretical accuracy. Specifically, HQET modifies the operator basis used in the calculation, leading to a more well-behaved discretization and reduced continuum extrapolation errors. The inclusion of higher-order corrections, such as 1/m_Q^2 terms, further improves the precision of theoretical predictions for hadronic decay rates.

Beyond the Standard Model: Implications and the Road Ahead

The observed decay rates of B \to K^<i> \ell \ell transitions and anomalies in B_s meson decays consistently deviate from predictions made by the Standard Model of particle physics. These aren’t statistical fluctuations; they represent a growing body of experimental evidence hinting at the involvement of previously unknown particles or forces. Specifically, the measured branching fractions and angular distributions in B \to K^</i> \ell \ell don’t align with the Standard Model’s calculated values, while the B_s \to \mu \mu decay rate is lower than expected. Taken together, these results strongly suggest that the Standard Model is incomplete and that new physics-perhaps involving additional dimensions, new particles mediating interactions, or modifications to known particle properties-is required to fully explain these observations, prompting intensive research into potential extensions of the current theoretical framework.

The observed discrepancies in B meson decays are not merely dismissed as statistical fluctuations; instead, physicists employ a powerful tool known as Wilson coefficients to systematically explore potential new physics. These coefficients effectively serve as handles, allowing theorists to parameterize deviations from Standard Model predictions without committing to a specific new physics model upfront. By precisely measuring these coefficients – which quantify the strength of various interactions – researchers can constrain the parameter space of numerous beyond-the-Standard-Model scenarios, including those involving leptoquarks, heavy bosons, or extra dimensions. This approach enables a model-independent analysis, where experimental results directly inform the viability of a broad range of theoretical frameworks, ultimately guiding the search for the fundamental laws governing particle behavior and potentially revealing a more complete picture of the universe.

The future of particle physics hinges on a concerted effort to refine both experimental precision and theoretical understanding within the realm of flavor physics. Current discrepancies demand investigations that push the boundaries of measurement accuracy, requiring upgrades to existing experiments and the development of new facilities capable of scrutinizing particle decays with unprecedented detail. Simultaneously, advanced theoretical calculations-incorporating techniques beyond standard perturbative approaches-are essential to reduce uncertainties in predictions and accurately interpret experimental results. This synergistic approach, combining data from ongoing experiments like LHCb and Belle II with sophisticated theoretical modeling, promises to either solidify the Standard Model or, more excitingly, reveal the signatures of new particles and interactions, potentially revolutionizing humanity’s understanding of the fundamental constituents of the universe and the forces that govern them.

The pursuit of precision in heavy flavor physics, as detailed in this review, reveals a landscape where even subtle discrepancies demand rigorous examination. The observed anomalies in lepton universality tests and the tensions between inclusive and exclusive determinations of parameters like Vcb and Vub highlight the inherent fragility of seemingly established models. This echoes Blaise Pascal’s sentiment: “The eloquence of youth is that it knows nothing.” In this context, the ‘eloquence’ represents the confidence in current theoretical frameworks, and the ‘knowing nothing’ signifies the gaps in understanding exposed by experimental results. A simple, elegant theoretical structure, much like a well-designed system, must account for all observations; when it fails to do so, a more fundamental reassessment is required. The continued work at facilities like LHCb and Belle II is essential to resolve these tensions and refine the underlying theory.

The Road Ahead

The persistent tensions between inclusive and exclusive determinations of parameters like Vcb and Vub suggest the current theoretical infrastructure requires more than superficial patching. One does not simply replace a cracked paving stone without considering the load-bearing capacity of the entire street. The field now demands a holistic reassessment of the underlying assumptions and methodologies employed in both approaches. The apparent anomalies in lepton universality, while intriguing, serve as insistent reminders that established frameworks may not fully encompass the observed reality.

Future progress hinges not solely on accumulating more data – though the continued operation of LHCb and the burgeoning capabilities of Belle II are undeniably crucial – but on refining the theoretical models. The challenge lies in building a cohesive structure, one where predictions from different theoretical avenues converge with greater consistency. This necessitates a deeper understanding of hadronic form factors, non-perturbative QCD effects, and the potential influence of new physics beyond the Standard Model.

Ultimately, the evolution of heavy flavor physics will resemble the gradual remodeling of a city, not a sudden demolition and rebuild. The existing foundations, while imperfect, provide a valuable framework for future development. The task now is to reinforce, adapt, and expand upon that framework, ensuring that the resulting structure is both robust and elegantly designed to accommodate the complexities of the subatomic world.

Original article: https://arxiv.org/pdf/2602.11997.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Best Controller Settings for ARC Raiders

- Stephen Colbert Jokes This Could Be Next Job After Late Show Canceled

- 10 X-Men Batman Could Beat (Ranked By How Hard It’d Be)

- DCU Nightwing Contender Addresses Casting Rumors & Reveals His Other Dream DC Role [Exclusive]

- 7 Home Alone Moments That Still Make No Sense (And #2 Is a Plot Hole)

- Is XRP ETF the New Stock Market Rockstar? Find Out Why Everyone’s Obsessed!

- Bitcoin or Bust? 🚀

- XRP’s Cosmic Dance: $2.46 and Counting 🌌📉

- JRR Tolkien Once Confirmed Lord of the Rings’ 2 Best Scenes (& He’s Right)

- Why Juliana Pasquarosa, Grant Ellis and More Bachelor Duos Have Split

2026-02-13 17:59