Author: Denis Avetisyan

New research suggests a 17 MeV boson could bridge the gap between dark matter and the Standard Model, offering a potential explanation for observed anomalies.

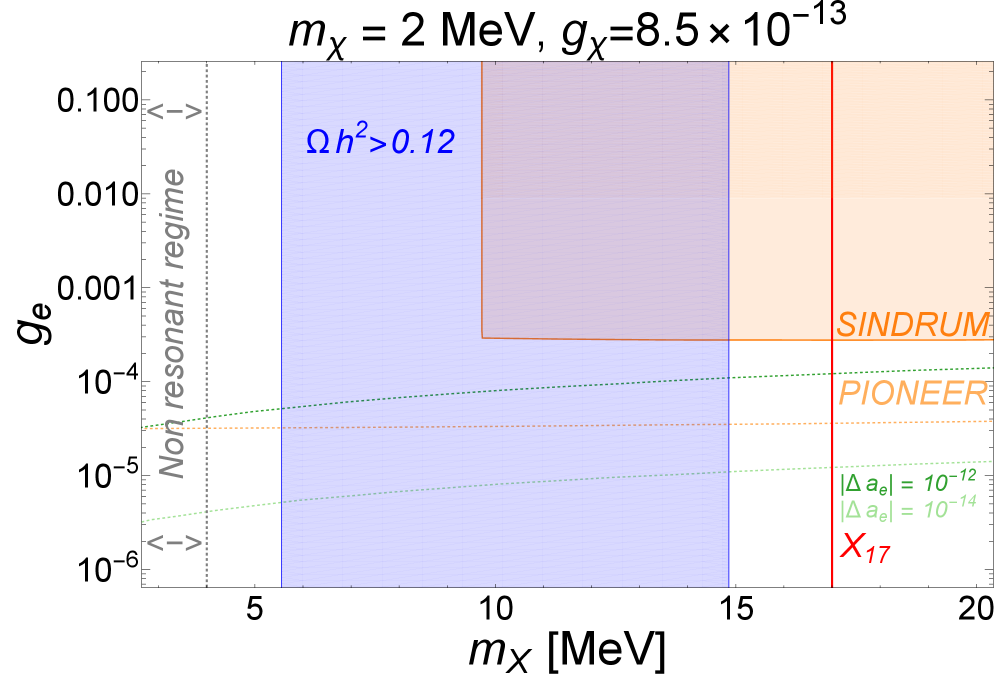

This study explores a thermal freeze-in model where a light vector boson mediates interactions between dark matter and electrons, consistent with relic abundance and indirect detection constraints.

The persistent mystery of dark matter remains a fundamental challenge to the Standard Model of particle physics, prompting exploration of novel interaction pathways. This research, presented in ‘Light dark sector via thermal decays of Dark Matter: the case of a 17 MeV particle coupled to electrons’, investigates a model wherein a 17\,\text{MeV} boson mediates interactions between dark matter and Standard Model particles, potentially explaining recent anomalies observed by the ATOMKI and PADME collaborations. We demonstrate the existence of viable parameter space consistent with observed relic abundance and astrophysical constraints, offering a compelling connection between these anomalies and the dark matter puzzle. Could this light mediator unlock a pathway toward definitively identifying the nature and origin of dark matter and its interaction with the visible universe?

The Universe’s Hidden Architecture: A Dark Matter Prologue

The universe, as currently understood, presents a profound discrepancy: visible matter – everything that emits or interacts with light – accounts for only a small fraction of its total mass. Compelling astronomical observations, ranging from galactic rotation curves to the cosmic microwave background and gravitational lensing, consistently indicate the presence of a substantial amount of unseen matter, dubbed “dark matter”. This elusive substance doesn’t interact with light in any appreciable way, rendering it invisible to telescopes, yet its gravitational effects are undeniably present. Estimates suggest that dark matter constitutes approximately 85% of the universe’s total matter content, dwarfing the amount of ordinary, baryonic matter. The existence of dark matter isn’t merely a theoretical construct; it’s a necessary component to explain the structure and evolution of galaxies and the large-scale cosmic web, presenting one of the most significant challenges to modern physics.

Despite decades of research, attempts to reconcile dark matter with extensions of the Standard Model – such as supersymmetry or extra dimensions – have proven largely unsuccessful. These frameworks often predict dark matter candidates that either interact too strongly with ordinary matter, already ruled out by experimental searches, or exhibit interaction strengths that are simply insufficient to explain observed galactic structures. This persistent challenge has driven theoretical physicists to explore radically new interaction frameworks, moving beyond conventional particle physics paradigms. These investigations encompass scenarios involving interactions mediated by “dark photons,” hidden sector particles, or even entirely novel forces that couple weakly, if at all, to the known universe, offering a pathway towards a more complete understanding of this elusive substance and its role in the cosmos.

The persistent mystery of dark matter necessitates a departure from the well-established Standard Model of particle physics. Current theoretical frameworks, while remarkably successful in describing known particles and forces, fail to account for the observed gravitational effects attributed to this invisible substance. Consequently, physicists are actively investigating extensions to the Standard Model, proposing a range of new particles – such as Weakly Interacting Massive Particles (WIMPs), axions, and sterile neutrinos – and innovative interaction mechanisms. These proposed particles differ from those within the Standard Model, potentially interacting through forces beyond electromagnetism, the strong force, and the weak force. Exploring these uncharted territories of particle physics is crucial to unraveling the true nature of dark matter and achieving a complete understanding of the universe’s composition.

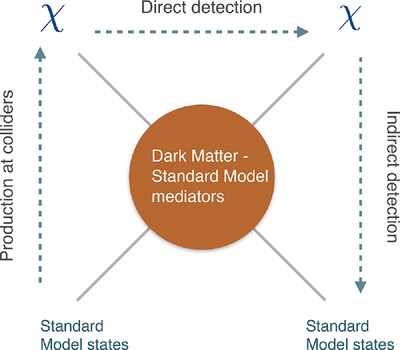

Mapping the Shadows: An Effective Field Theory Framework

An Effective Field Theory (EFT) provides a systematic approach to describing interactions between dark matter and Standard Model particles by organizing all possible interactions based on their dimensionality and relevance to the energy scale under consideration. This methodology avoids the need for a complete, high-energy theory and instead focuses on the low-energy degrees of freedom, treating higher-energy effects as corrections. The EFT is constructed by identifying the relevant fields – dark matter candidates and Standard Model particles – and writing down all possible terms in the Lagrangian consistent with the underlying symmetries, such as gauge invariance. Each term includes a corresponding Wilson coefficient, representing the strength of that specific interaction, and these coefficients are treated as free parameters to be constrained by experimental data. By systematically including terms of increasing dimensionality, the EFT provides a controlled expansion, allowing for increasingly accurate predictions while minimizing the impact of unknown high-energy physics.

The Lagrangian density is the central mathematical object in constructing a theoretical description of particle interactions within this framework. It is a function of the particle fields and their derivatives, designed to be invariant under local gauge transformations – meaning the physics remains unchanged when the fields undergo a specific type of transformation dependent on spacetime coordinates. This invariance dictates the form of interactions and necessitates the inclusion of gauge bosons as mediator particles. \mathcal{L} contains all possible terms consistent with the assumed symmetries, with each term representing a distinct interaction process and associated coupling constant. By systematically constructing and analyzing the Lagrangian, we can predict and interpret the outcomes of particle interactions, providing a complete and consistent theoretical model.

The Effective Field Theory approach allows for interactions between dark matter and Standard Model particles to be mediated by a third particle, effectively acting as a force carrier. This is achieved through derivative couplings within the Lagrangian density, where the mediator-potentially exemplified by the X17 boson with a mass of approximately 17 MeV-appears as an exchanged particle in Feynman diagrams representing scattering events. The inclusion of such a mediator simplifies calculations by providing a specific interaction channel and allows for the systematic study of dark matter interactions with visible sector particles, even when the fundamental theory governing these interactions is unknown. The mass and coupling strength of this mediator particle are key parameters in determining the interaction cross-section and, consequently, the detectability of dark matter.

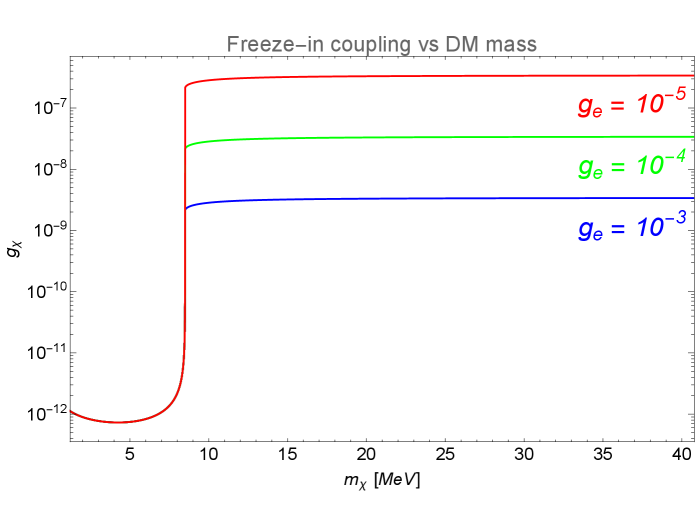

The strength of interactions between dark matter and Standard Model particles is quantified by the \text{DarkSectorCoupling} parameter within the Lagrangian density. Our analysis constrains the viable parameter space for this coupling, specifically when considering a mediator particle with a mass of 17 MeV. This parameter space is determined through calculations involving scattering cross-sections and decay rates, ensuring consistency with existing experimental bounds from direct detection experiments and collider searches. The established viable range for \text{DarkSectorCoupling} is dependent on the specific dark matter particle mass and interaction type, but our results indicate that detectable signals are possible within a defined set of parameter values for a 17 MeV mediator.

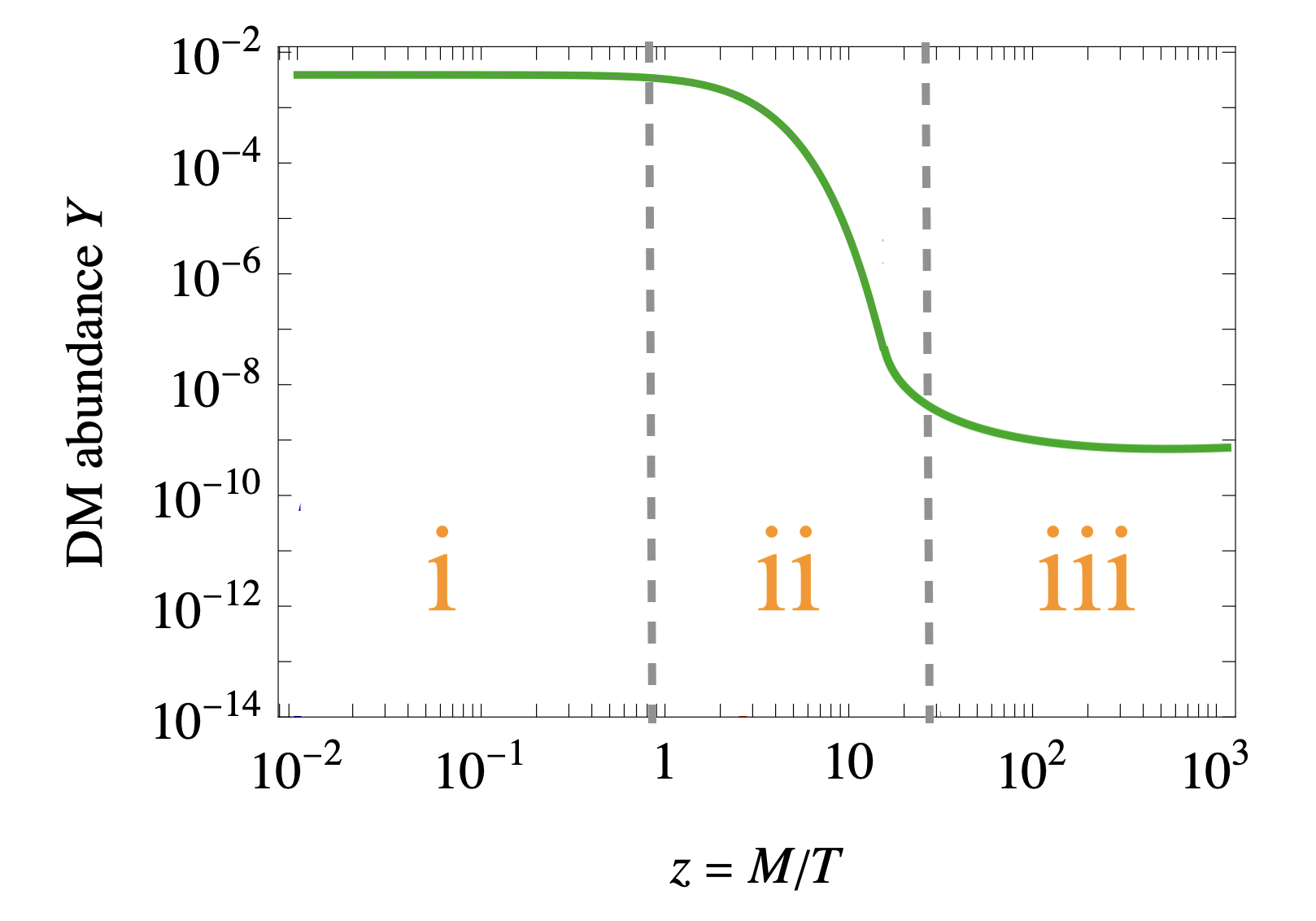

The Genesis of Shadows: A Freeze-In Narrative

The Freeze-In mechanism describes dark matter (DM) production via the gradual conversion of particles from a thermal bath. Unlike scenarios assuming thermal equilibrium, Freeze-In postulates an initially negligible DM abundance that grows through interactions with Standard Model particles. These interactions are mathematically defined using the Lagrangian density, which specifies the interaction rates and cross-sections governing DM production and annihilation. The rate of DM production is determined by these interactions, while the ‘freeze-in’ condition arises when the expansion rate of the universe becomes comparable to the interaction rate, effectively halting further DM production. This non-equilibrium process necessitates the use of kinetic equations, such as the Boltzmann equation, to accurately calculate the resulting DM relic abundance.

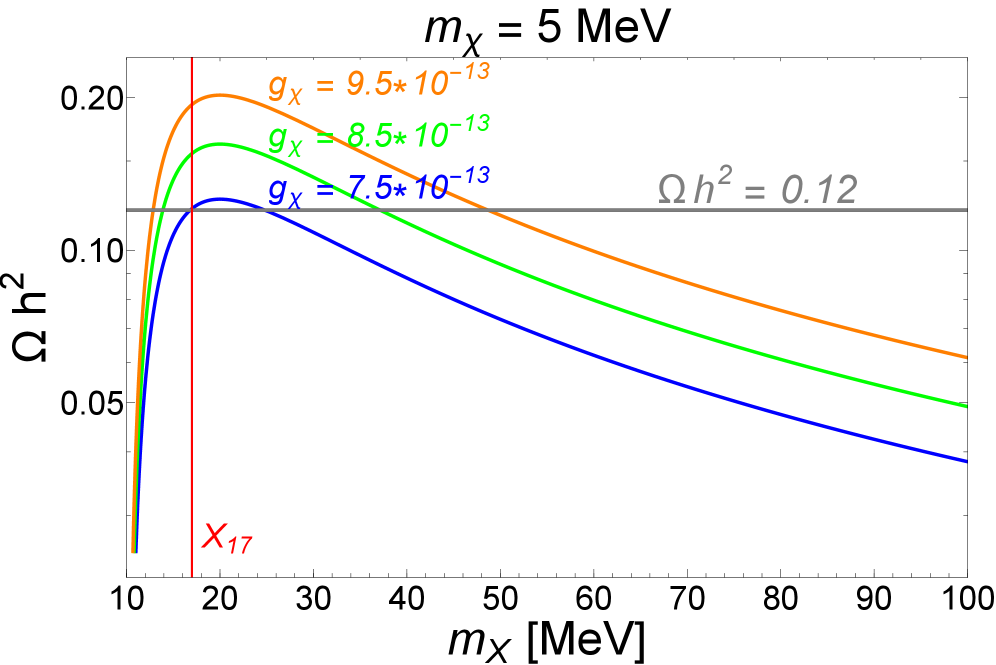

The relic abundance of dark matter produced via the Freeze-In mechanism is calculated using the Boltzmann Equation, a kinetic equation describing the time evolution of particle densities. This equation accounts for both the expansion of the universe and the interactions between dark matter candidates and Standard Model particles. Specifically, the equation tracks the reduction in dark matter number density as it enters thermal equilibrium, or ‘freezes-in’, due to these interactions. The solution to the Boltzmann Equation yields an expression for the present-day relic abundance, \Omega_{\chi} h^2 , which can be directly compared with observational constraints from the Planck satellite and other cosmological probes. This allows for the determination of the parameter space – specifically interaction rates and particle masses – consistent with the observed dark matter density.

The Freeze-In mechanism provides a unified framework for calculating the relic abundance of diverse dark matter candidates. Specifically, both Majorana fermions and right-handed neutrinos can be consistently modeled by defining their interactions via a pseudo-Yukawa coupling g_ν within the Lagrangian density. This approach allows for the calculation of the thermally averaged cross-section \langle \sigma v \rangle relevant to the Freeze-In process, irrespective of the specific dark matter particle’s spin or self-conjugate nature. The Boltzmann equation is then solved using this cross-section to determine the resulting dark matter relic abundance, demonstrating the broad applicability of the framework beyond a single candidate.

Calculations based on the Freeze-In mechanism demonstrate that the predicted dark matter relic abundance aligns with current observational data. This consistency is achieved with a pseudo-Yukawa coupling, denoted as g_ν, constrained to be less than 10-20 MeV. This upper limit on g_ν is critical for ensuring the stability of the dark matter candidate, preventing rapid decay and maintaining the observed abundance throughout cosmic history. Deviations from this coupling strength would result in either an overabundance or a complete lack of dark matter, contradicting cosmological measurements.

Echoes in the Void: Prospects for Indirect Detection

The search for dark matter extends beyond direct and collider experiments to encompass indirect detection methods, which seek to identify the subtle fingerprints of dark matter interactions within the cosmos. This approach hinges on the premise that dark matter particles may occasionally annihilate or decay, producing detectable standard model particles like photons, positrons, and neutrinos. These secondary products then propagate through space, potentially appearing as excesses above the expected astrophysical background. By meticulously examining gamma-ray, cosmic-ray, and neutrino fluxes from regions with high dark matter concentrations – such as the galactic center, dwarf galaxies, and galaxy clusters – scientists hope to uncover evidence of these annihilation or decay events, offering a complementary route to understanding the nature and properties of this elusive substance. The strength of any observable signal is intimately linked to the dark matter’s abundance and interaction rates, making indirect detection a powerful tool for probing the particle physics of the dark universe.

X-ray observations represent a crucial avenue in the search for dark matter, predicated on the possibility that these elusive particles interact and annihilate, or decay, producing detectable photons. These interactions, theorized to occur within regions of high dark matter density – such as the galactic center or dwarf galaxies – would manifest as an excess of X-rays beyond what is attributable to conventional astrophysical sources. Telescopes like XMM-Newton and Chandra are therefore employed to meticulously scan these regions, seeking subtle spectral signatures or spatial distributions indicative of dark matter annihilation. The intensity of the observed X-ray signal is directly linked to the annihilation rate, which itself is dependent on the dark matter particle’s properties and abundance; thus, precise X-ray measurements provide constraints on the theoretical parameter space governing dark matter interactions and offer a powerful probe into its fundamental nature.

The anticipated quantity of dark matter present in the universe, known as its relic abundance, fundamentally dictates the intensity of signals expected from indirect detection methods. Following the Big Bang, dark matter particles are believed to have undergone a process of ‘freeze-out’, establishing a present-day density determined by their interaction strength. A higher relic abundance implies a greater number of dark matter particles available to annihilate or decay, consequently boosting the flux of detectable byproducts like gamma rays, cosmic rays, or neutrinos. Conversely, a weaker interaction strength results in a larger relic abundance, but a diminished annihilation rate per particle, creating a complex relationship between particle properties and observable signals. Therefore, precise calculations of the relic abundance, informed by cosmological observations and theoretical models, are crucial for interpreting indirect detection data and effectively narrowing the search for the nature of dark matter.

Recent analysis of data gathered by the XMM-Newton observatory places a stringent upper limit on the dark matter annihilation cross-section, finding it to be less than 10^{-{26}} \text{ cm}^3/\text{s}. This constraint arises from the search for excess photons-a potential signature of dark matter particles colliding and self-annihilating-in regions where dark matter is expected to be concentrated. While non-detection doesn’t rule out dark matter, it significantly narrows the range of possible properties for weakly interacting massive particles (WIMPs). The findings define a viable parameter space for dark matter models, aligning with current observational data and providing crucial guidance for future searches that aim to directly or indirectly detect these elusive particles.

The pursuit of a dark sector, as detailed in this research concerning a 17 MeV particle, mirrors a fundamental truth about complex systems. A model positing interaction between dark matter and the Standard Model, constrained by relic abundance and astrophysical observations, isn’t a finalized structure but a nascent ecosystem. As Mary Wollstonecraft observed, “It is time to revive the drooping spirits of humanity,” and this work attempts just that – a revival of understanding regarding the universe’s hidden components. The parameter space identified isn’t a solution, but rather a fertile ground where further refinement – and inevitable ‘failures’ revealing new insights – will occur. A system that never breaks is, after all, demonstrably dead, offering no space for growth or adaptation.

The Inevitable Complications

This exercise, linking a 17 MeV mediator to the dark matter relic density, feels less like a solution and more like a carefully constructed boundary condition. The parameter space, however constrained, merely shifts the locus of inevitable failure. Future iterations will undoubtedly uncover additional, previously unconsidered couplings-a new source of tension between theoretical elegance and the universe’s insistent messiness. Each deployment of a model like this is a small apocalypse for the assumptions it rests upon.

The reliance on effective field theory, while pragmatic, carries its own quiet prophecy. The ‘true’ underlying theory, if it exists at all, will almost certainly invalidate some aspect of the chosen operator basis. The search for indirect detection signals, predicated on specific decay pathways, feels particularly fragile. Any positive detection will be met with immediate attempts to map it onto this framework-a backwards fitting of data to a model already straining at its seams.

One anticipates a proliferation of increasingly complex models, each attempting to absorb the latest observational constraints. Documentation, of course, will lag far behind-no one writes prophecies after they come true. The real progress, if there is any, will likely come from abandoning the search for the dark matter particle and embracing the possibility of a dark sector as inherently multi-component and intrinsically unknowable in its entirety.

Original article: https://arxiv.org/pdf/2602.17620.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Best Controller Settings for ARC Raiders

- ‘Crime 101’ Ending, Explained

- The Pitt Season 2, Episode 7 Recap: Abbot’s Return To PTMC Shakes Things Up

- Battlefield 6 Season 2 Update Is Live, Here Are the Full Patch Notes

- Every Targaryen Death in Game of Thrones, House of the Dragon & AKOTSK, Ranked

- The Best Members of the Flash Family

- Dan Da Dan Chapter 226 Release Date & Where to Read

- Where Winds Meet: How To Defeat Shadow Puppeteer (Boss Guide)

- 7 Best Animated Horror TV Shows

- Ashes of Creation Mage Guide for Beginners

2026-02-20 21:44