Author: Denis Avetisyan

Researchers have developed a flexible Python framework to streamline complex optical experiments on quantum materials, boosting reproducibility and data acquisition speed.

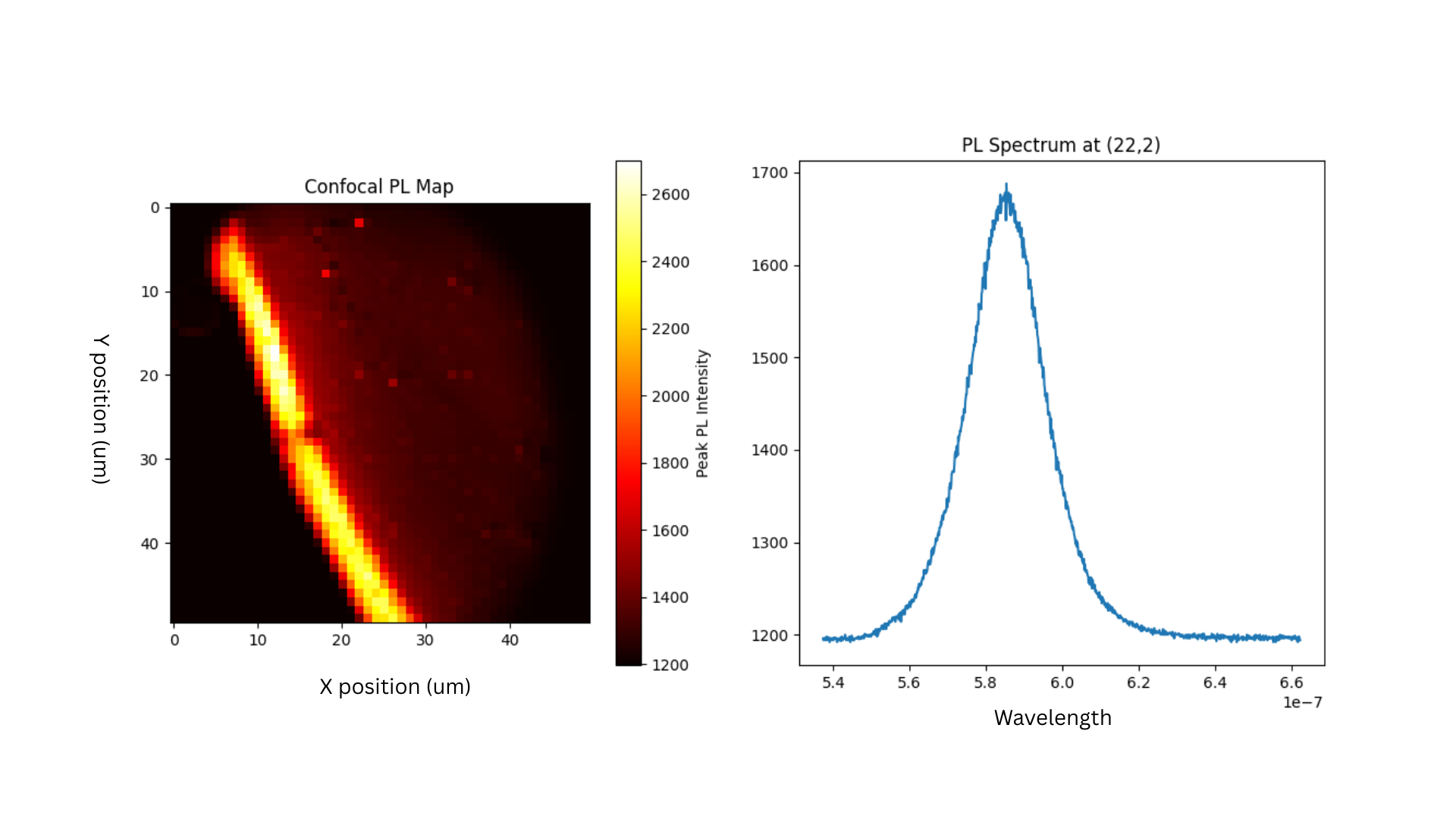

PhoQuPy enables automated photoluminescence mapping, single-photon detection, and other techniques crucial for characterizing quantum materials and devices.

Reproducible and efficient characterization remains a significant challenge in the rapidly evolving field of quantum materials research. To address this, we present ‘PhoQuPy: A Python framework for Automation of Quantum Optics experiments’, a modular software package designed to automate complex optical workflows. This framework streamlines experiments-including photoluminescence mapping, stitched imaging, and single-photon correlation measurements-enabling high-throughput data acquisition and improved experimental consistency. By integrating hardware control with automated analysis routines, can PhoQuPy accelerate the discovery and benchmarking of novel quantum emitters and facilitate more robust materials characterization?

Unveiling Quantum Behavior: The Need for Automated Material Characterization

The investigation of quantum materials fundamentally relies on detailed optical characterization, yet this process has historically been hampered by its time-consuming and largely manual nature. Determining properties like reflectivity, transmittance, and absorption – crucial indicators of a material’s quantum behavior – often demands painstaking alignment of optical components, meticulous data collection over extended periods, and significant operator expertise. This reliance on manual techniques not only restricts the speed at which new materials can be screened but also introduces potential for human error and inconsistencies between experiments. Consequently, the advancement of quantum material discovery is frequently bottlenecked by the limitations inherent in traditional optical measurement workflows, highlighting the need for innovative solutions to accelerate and refine this essential research process.

The painstaking process of characterizing quantum materials often relies on manual data acquisition, a methodology demonstrably susceptible to inconsistencies stemming from human error and subjective interpretations. Each measurement, dependent on individual technique, introduces subtle variations that can obscure genuine material properties and hinder comparative analysis. More critically, this manual approach severely limits the throughput of material discovery; the time required to meticulously collect and analyze data for even a single sample dramatically slows the pace of innovation. Consequently, the identification and optimization of novel quantum materials-essential for advancements in fields like superconductivity and quantum computing-is bottlenecked not by theoretical understanding, but by the practical limitations of experimental data gathering.

The advancement of quantum material discovery is fundamentally bottlenecked by the laborious and time-consuming nature of materials characterization; an automated framework addresses this critical need by enabling high-throughput experimentation and data acquisition. Such a system doesn’t merely speed up the process, but drastically improves data quality through minimized human error and enhanced consistency. By automating precise optical measurements – and integrating them with data analysis pipelines – researchers can systematically explore vast material spaces, identify promising candidates with greater efficiency, and ultimately accelerate the development of novel quantum technologies. This transition towards automation facilitates not only the sheer volume of research but also ensures reproducibility, a cornerstone of scientific validity, by standardizing procedures and reducing subjective interpretations of experimental results.

Constructing the Analytical Engine: Core System Components

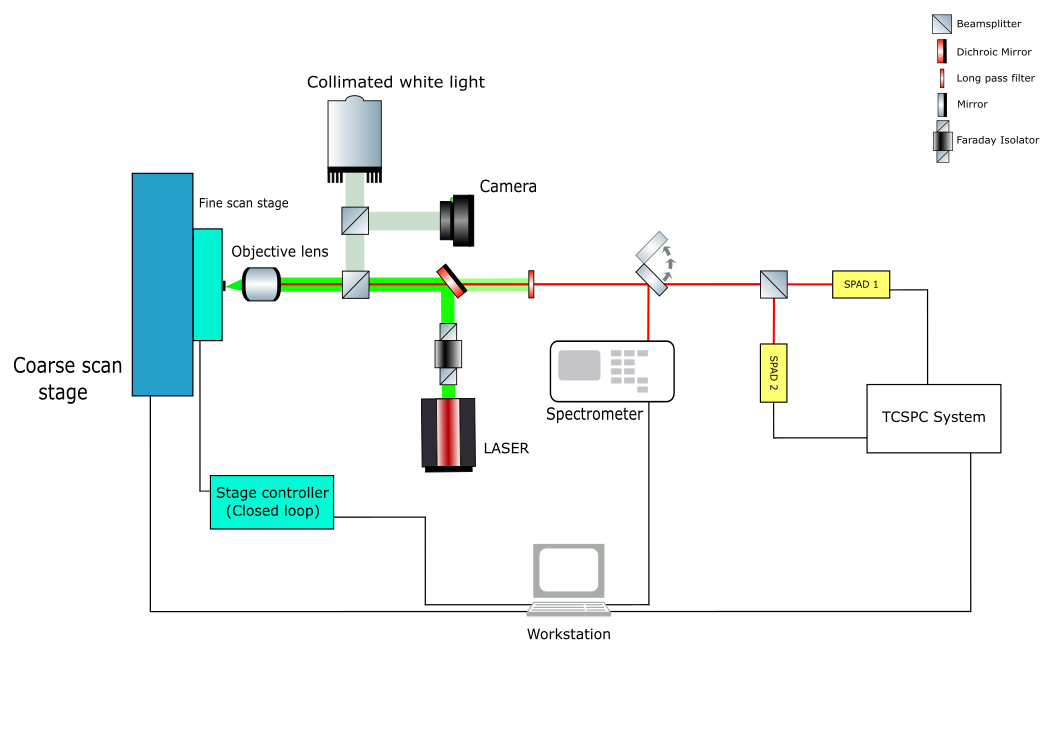

The system’s control is managed by a Python framework implemented to address the complexity of coordinating heterogeneous optical equipment. This framework provides a unified interface for controlling and synchronizing components such as mechanical stages, spectrometers, cameras, and single-photon detectors. It abstracts low-level communication protocols – including serial, USB, and network interfaces – to enable programmatic control of hardware functions like positioning, triggering, and data acquisition. The framework’s modular design facilitates integration of new hardware and allows for customized sequencing of operations, ensuring precise timing and coordination between devices for complex experimental protocols.

Precise sample positioning and scanning are achieved through the integration of two distinct mechanical stage systems: the NanoMax Stage and the Zaber Stage. The NanoMax Stage provides high-resolution movement for detailed sample manipulation, while the Zaber Stage offers a larger range of motion suitable for broad area scanning. Both stages are computer-controlled, enabling automated movement profiles and synchronization with other optical components. Stage control software allows for the definition of scan patterns, velocity control, and precise positioning accuracy, crucial for repeatable experiments and data acquisition across defined sample areas. These stages support various sample holders and are compatible with the optical pathways of the system’s spectrometers and detectors.

Optical signal acquisition is performed using a combination of spectrometers, cameras, and single-photon detectors. The Andor Kymera 328i spectrometer provides spectral analysis of emitted light. Complementing this, the Newton iDUS and Princeton Teledyne ProEM HS-512 cameras capture two-dimensional spatial data of optical signals. For measurements requiring extremely low light levels, Excelitas SPCM-AQRH single-photon detectors are integrated, enabling the detection of individual photons and facilitating sensitive quantitative analysis where traditional cameras lack sufficient sensitivity.

Galvanometer mirrors, or galvo mirrors, facilitate rapid and precise beam steering through the deflection of a mirrored surface controlled by electromagnets. This technology enables significantly faster scanning speeds compared to traditional mechanical beam steering methods, directly impacting data acquisition rates. The system utilizes these mirrors to direct the optical beam across the sample surface, allowing for data collection at kilohertz frequencies or higher. This is crucial for applications requiring high temporal resolution, such as time-resolved spectroscopy and high-speed imaging, where capturing dynamic processes necessitates rapid beam positioning and data capture.

![A galvo scanning setup, as detailed in reference [4], utilizes moving mirrors to direct a beam across a sample.](https://arxiv.org/html/2602.04505v1/Fig7.png)

Addressing Measurement Artifacts: Precision and Data Integrity

Piezo-stage hysteresis describes the lag between applied voltage and actual physical displacement in piezoelectric positioning systems. This phenomenon introduces non-linearity and positional error during scanning procedures, as the stage’s response to a command depends on its prior movement history. To mitigate these errors, careful calibration routines are essential, typically involving characterizing the hysteresis curve and implementing correction algorithms within the control software. These algorithms often employ feedforward compensation, pre-calculating the required voltage adjustments based on the stage’s current position and desired trajectory, or feedback loops utilizing high-resolution encoders to measure actual position and correct for deviations. Precise control strategies are vital for maintaining accuracy and repeatability in experiments reliant on precise stage positioning.

Cosmic rays, high-energy particles originating from outside the Earth’s atmosphere, interact with the charge-coupled device (CCD) sensors used in spectral imaging, creating spurious signals that appear as bright spots or lines in the recorded spectra. These artifacts can obscure or mimic actual spectral features, compromising data accuracy. The Double-Acquisition Method addresses this issue by acquiring two separate images of the same sample in quick succession. Cosmic ray events are unlikely to occur at the exact same pixel location in both exposures; therefore, by comparing the two images and identifying pixels that differ significantly, cosmic ray artifacts can be effectively flagged and removed, resulting in cleaner and more reliable spectral data.

Stitched imaging extends the observable area beyond the limitations of the detector’s field of view by assembling multiple, overlapping images into a single, high-resolution map. The system implements this through the MIST (Multi-Image Stitching Tool) algorithm, which performs image registration and blending to minimize seam lines and correct for geometric distortions. This process allows for the creation of large-area maps suitable for characterizing samples exceeding the physical boundaries of individual detector exposures, effectively expanding the system’s imaging capacity.

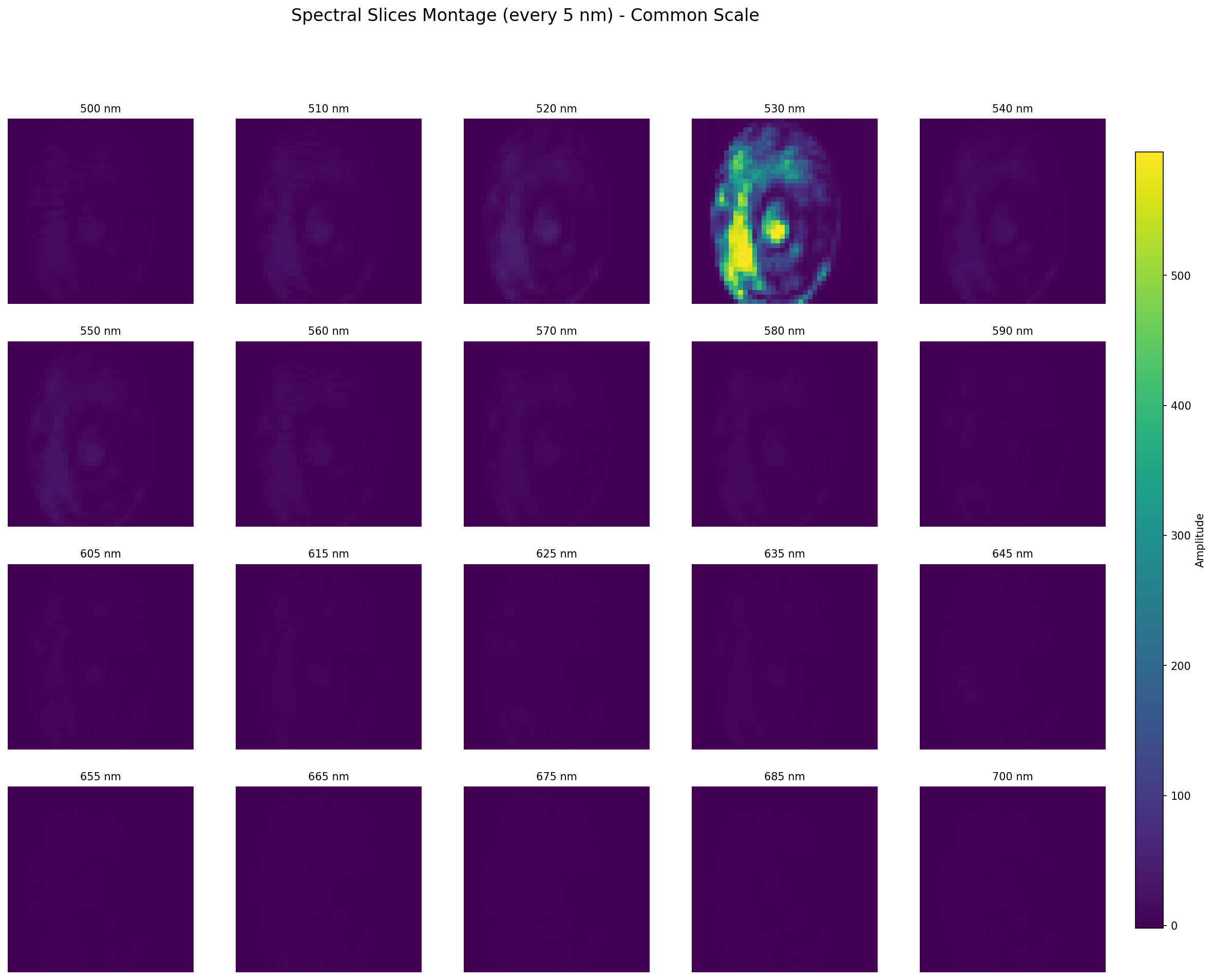

Hyperspectral imaging, facilitated by the Nireos Gemini Interferometer, acquires detailed spectral data for material characterization by capturing images across numerous contiguous wavelengths. This technique allows for the identification of materials based on their unique spectral signatures. To optimize computational efficiency during testing and data processing, a Discrete Fourier Transform (DFT) grid size of 40×40 is employed. This reduced grid size balances data resolution with processing speed, enabling faster analysis of the acquired hyperspectral data without significant loss of critical information.

Expanding the Analytical Horizon: Material Exploration and Discovery

This novel framework facilitates a comprehensive investigation of a broad spectrum of quantum materials, extending beyond traditional silicon-based systems. Researchers can now systematically explore the unique optical properties of materials like hexagonal boron nitride (hBN), silicon nitride (SiN), tungsten diselenide (WSe2), and even colloidal quantum dots – nanocrystals exhibiting quantum mechanical behavior. By providing a unified platform for analysis, the system allows for direct comparison of these diverse materials, potentially revealing previously obscured relationships between composition, structure, and performance, and ultimately accelerating the discovery of new quantum technologies.

The advent of automated data acquisition and analysis represents a substantial leap forward in the field of quantum materials discovery. Traditional materials research often relies on manual data collection and processing, a process that is both time-consuming and prone to human error. This new framework bypasses these limitations by employing robotic control and sophisticated algorithms to rapidly gather and interpret experimental results. Consequently, researchers can now explore a vastly larger parameter space and assess numerous materials with unprecedented speed, effectively compressing years of work into months. This accelerated throughput not only facilitates the identification of promising new materials but also allows for more detailed characterization and optimization of existing ones, ultimately paving the way for breakthroughs in diverse technological applications.

A cornerstone of reliable materials research lies in the ability to meticulously regulate experimental conditions, and this framework delivers precisely that. By maintaining tight control over parameters such as temperature, excitation power, and measurement timing, the system ensures that observed phenomena are not simply artifacts of inconsistent setup. This level of control isn’t merely about avoiding errors; it fundamentally enables quantitative comparisons between diverse materials – hBN, SiN, WSe2, and colloidal quantum dots, for example – allowing researchers to discern intrinsic differences in their properties. The resulting data is thus highly reproducible, forming a solid foundation for theoretical modeling and ultimately accelerating the discovery of materials with tailored characteristics. This rigorous approach transforms materials analysis from a descriptive exercise into a predictive science.

The system’s exceptional sensitivity is demonstrated by its ability to reliably resolve photon lifetimes even with a relatively low signal-to-noise ratio, achieving approximately 20 excitation pulses for each detected photon during bi-exponential lifetime curve fitting. This high sensitivity stems from optimized data acquisition and analysis protocols, allowing for accurate characterization of quantum materials with minimal light output. The ability to extract meaningful data from such faint signals is crucial for studying emerging materials where luminescence may be intrinsically weak, or where sample volumes are limited – ultimately accelerating the pace of discovery in areas like nanoscale optoelectronics and quantum information science.

The development of PhoQuPy exemplifies a systematic approach to understanding complex quantum systems. This framework isn’t merely about automating tasks; it’s about establishing a rigorous, repeatable methodology for exploring photoluminescence and correlation phenomena. As Max Planck stated, “An appeal to the authority of science is not an argument, but an expression of opinion.” This sentiment underscores the need for frameworks like PhoQuPy, which move beyond subjective observation and toward data-driven analysis. By meticulously controlling experimental parameters and automating data acquisition, researchers can build a more objective understanding of quantum material properties, verifying hypotheses through repeatable experiments and solidifying the foundation of scientific knowledge.

Future Horizons

The automation presented by PhoQuPy, while a step towards standardized data acquisition in quantum materials research, merely addresses the surface of a deeper challenge. Much like early microscopes revealed a world previously unseen but offered limited quantitative rigor, this framework highlights the limitations of relying on bespoke experimental setups. The true bottleneck isn’t necessarily the speed of data collection, but the propagation of tacit knowledge-the ‘feel’ for optimal parameters, the subtle corrections made during observation-that is often lost when transitioning from skilled experimenter to automated system. Future iterations must incorporate active learning algorithms, allowing the framework to iteratively refine its protocols, effectively ‘teaching itself’ to perform experiments with increasing proficiency.

One anticipates a convergence with techniques borrowed from biological imaging. Just as super-resolution microscopy overcomes the diffraction limit, future quantum optics automation could integrate computational methods to enhance signal extraction from noisy detectors. The current emphasis on photoluminescence mapping resembles a ‘static’ image of the quantum system. However, true understanding requires capturing ‘movies’ – time-resolved measurements that reveal the dynamics of quantum states. This necessitates synchronization with more complex cryogenic control systems and potentially, feedback loops driven by real-time data analysis.

Ultimately, the long-term viability of such frameworks hinges on fostering a community-driven ecosystem. Like the open-source nature of Python itself, a shared repository of experimental protocols, analysis tools, and data standards will be crucial. This collaborative approach will allow researchers to move beyond isolated observations and towards a more holistic, predictive understanding of quantum materials – a process resembling the construction of a complex, self-correcting model of the universe.

Original article: https://arxiv.org/pdf/2602.04505.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- How to Get the Bloodfeather Set in Enshrouded

- Gold Rate Forecast

- 4 TV Shows To Watch While You Wait for Wednesday Season 3

- Goat 2 Release Date Estimate, News & Updates

- Best Werewolf Movies (October 2025)

- 10 Movies That Were Secretly Sequels

- 32 Kids Movies From The ’90s I Still Like Despite Being Kind Of Terrible

- These Are the 10 Best Stephen King Movies of All Time

- Auto 9 Upgrade Guide RoboCop Unfinished Business Chips & Boards Guide

- One of the Best EA Games Ever Is Now Less Than $2 for a Limited Time

2026-02-05 19:41