Fragile Vacuum: Quantizing Fields in Anti-de Sitter Space

A new analysis explores the quantum stability of empty space within the unique geometry of Anti-de Sitter spacetime.

A new analysis explores the quantum stability of empty space within the unique geometry of Anti-de Sitter spacetime.

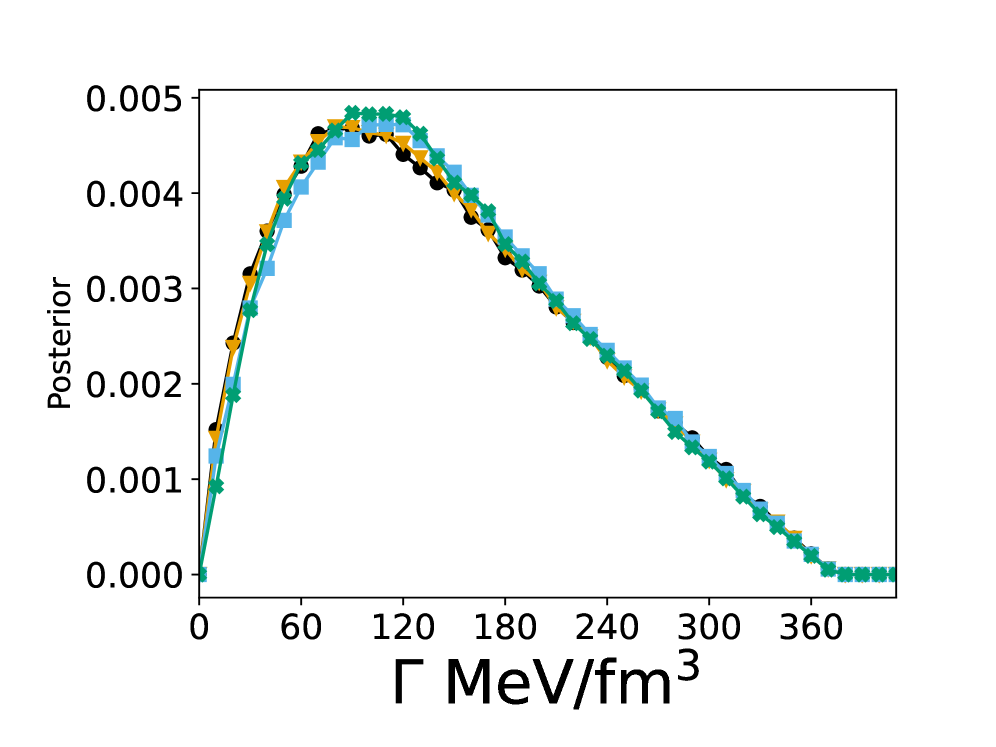

New research extends theoretical models to understand how many-body forces and hyperons influence the behavior of extremely dense matter at high temperatures, impacting our understanding of neutron star structure.

![The analysis of quasiparticle tunneling during layer sliding reveals that intervalley-filtered real-space modulations of the form [latex]\rho^{\text{filtered}}\_{l^{\prime}\sigma^{\prime},l\sigma}(\omega,\mathbf{r})[/latex] exhibit charge variations - quantified by wavefront differentials and observed at [latex]\omega=0.2~\mathrm{eV}[/latex] above half filling with [latex]m=0.05~\mathrm{eV}[/latex] - that characterize the stacking configuration and impurity channel dynamics.](https://arxiv.org/html/2602.06397v1/x2.png)

New research reveals that the emergence of wavefront dislocations in bilayer honeycomb lattices is governed by the material’s intrinsic pseudospin texture, challenging previous assumptions about topological charge.

![The study of the one-dimensional transverse-field Ising model at [latex] J=g=1 [/latex] and [latex] \beta=1 [/latex] with [latex] L=512 [/latex] reveals that locality-dependent quantities - specifically, the von Neumann entropy density [latex] S_{\mathrm{vN}}[\rho_{L|\ell}^{\mathrm{tot}}]/\ell^{d} [/latex] and [latex] S_{\mathrm{vN}}[\rho_{L|\ell}^{\bm{r}=\bm{0}}]/\ell^{d} [/latex] - converge towards the exact thermodynamic entropy density in the thermodynamic limit, as determined through analysis of [latex] 10^5 [/latex] Monte Carlo samples and confirmed by the inset’s depiction of standard deviation behavior at [latex] \ell=8 [/latex], with imaginary-time evolution achieved via second-order Trotter decomposition using a step size of [latex] \delta\beta=0.01 [/latex] and truncation threshold of [latex] 10^{-{14}} [/latex].](https://arxiv.org/html/2602.06657v1/x2.png)

A new theoretical framework rigorously establishes the second law of thermodynamics within closed quantum systems, addressing a long-standing challenge in statistical mechanics.

Relativistic heavy-ion collisions generate incredibly strong magnetic fields that dramatically alter the behavior of the quark-gluon plasma formed in these extreme events.

![The analysis demonstrates that distinguishable particles initially separated by a wall possess [latex]2N\ln 2[/latex] nats of information regarding their respective sides, a quantity lost upon wall removal and manifesting instead as a mixing entropy of [latex]2kN\ln 2[/latex], while indistinguishable particles, or those freely intermixed, exhibit no discernible information difference-reflecting a fundamental equivalence in the observer’s knowledge of particle positions across scenarios.](https://arxiv.org/html/2602.06505v1/figure_1.png)

New research demonstrates that the long-standing Gibbs paradox isn’t a flaw in classical statistical mechanics, but rather stems from how we understand entropy and information itself.

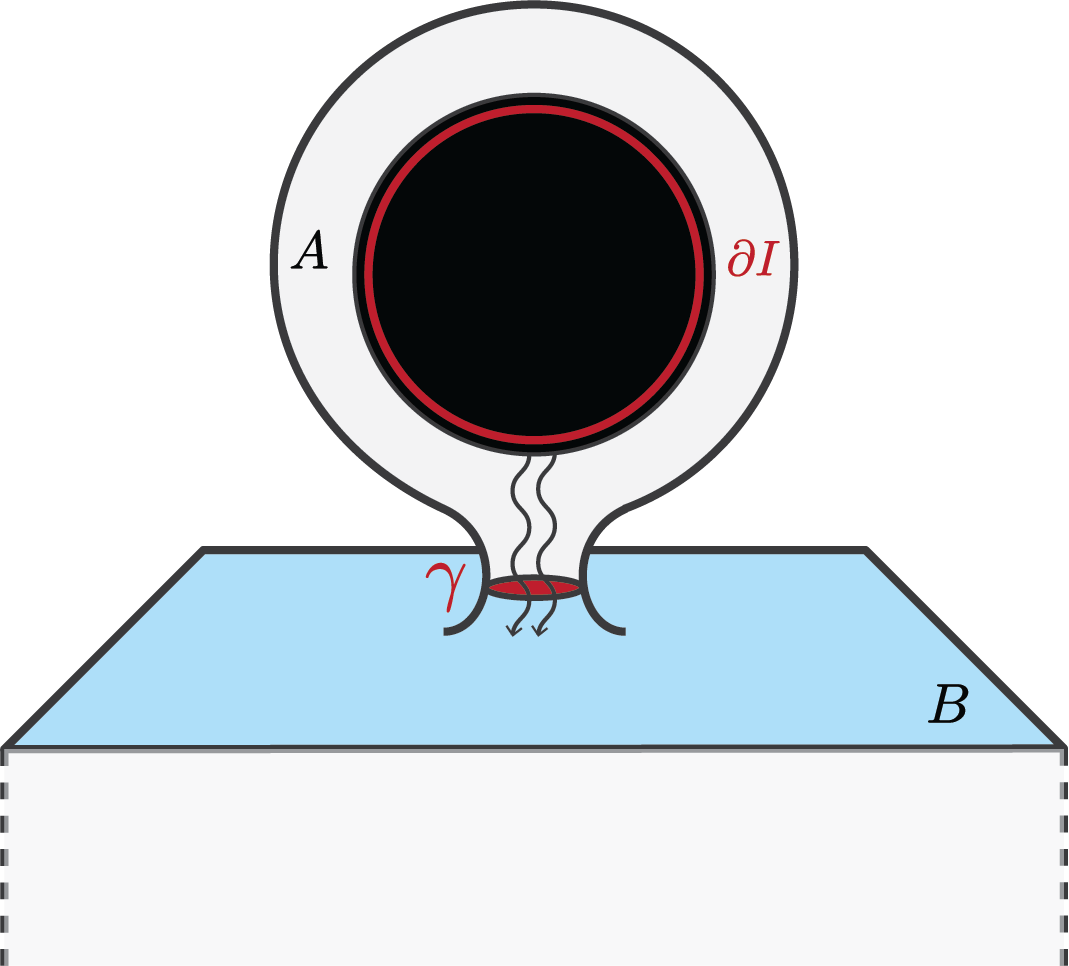

A new analysis suggests the black hole information paradox may be resolved by a complete understanding of holographic principles, eliminating the need for proposed solutions like ‘islands’ or modified Page curves.

![Dark matter axion detection strategies are diversified by interaction type and experimental parameters, with preferred mass ranges varying across methods and constrained by astronomical observations-specifically, an upper bound of approximately 1 meV and a lower limit-defining the fuzzy dark matter frontier-of [latex]10^{-{22}}\,\rm{eV}[/latex]-which influences tuning strategies based on axion coherence time and experiment duration.](https://arxiv.org/html/2602.06726v1/Figures/YG_cheat_sheat.png)

A new review details the diverse approaches scientists are taking to find axions – leading candidates for the mysterious substance making up dark matter.

New research leverages Bayesian statistics to refine our understanding of the ultra-dense matter residing within neutron stars, revealing the limits of current observational constraints.

![The differential decay rate of [latex]B \to K_{0}^{*}(1430) \ell^{+} \ell^{-} [/latex] is analyzed within both the Standard Model and a scalar Lepton Quark (LQ) scenario, demonstrating that for [latex] \tau^{+} \tau^{-} [/latex] decays, the accessible momentum transfer squared, [latex] q^{2} [/latex], is limited by the tau mass, effectively precluding observation of the decay spectrum in the low-[latex] q^{2} [/latex] region.](https://arxiv.org/html/2602.06892v1/x3.png)

A new analysis of the B meson’s decay into a K₀*(1430) and pairs of leptons offers a sensitive probe for deviations from the Standard Model and potential evidence for leptoquarks.