Fleeting Order: How Heat Reveals Hidden Symmetry Breaking

![The study of a QCD-like gauge-fermion theory-with three colors and three chiral flavors-reveals how a chiral order parameter [latex]\sigma_0(r)[/latex] evolves with spatial separation and temperature, transitioning from a symmetric phase at high temperatures to a precondensation regime-where the condensate exists only over finite length scales-and ultimately to a broken phase characterized by a macroscopic condensate at large distances, with the domain size ξ delineating the extent of precondensation and a UV length scale [latex]r_{UV}[/latex] marking its effective vanishing point.](https://arxiv.org/html/2602.11265v1/x1.png)

New research explores how thermal fluctuations drive the formation of short-range condensates in complex quantum systems.

![The study of a QCD-like gauge-fermion theory-with three colors and three chiral flavors-reveals how a chiral order parameter [latex]\sigma_0(r)[/latex] evolves with spatial separation and temperature, transitioning from a symmetric phase at high temperatures to a precondensation regime-where the condensate exists only over finite length scales-and ultimately to a broken phase characterized by a macroscopic condensate at large distances, with the domain size ξ delineating the extent of precondensation and a UV length scale [latex]r_{UV}[/latex] marking its effective vanishing point.](https://arxiv.org/html/2602.11265v1/x1.png)

New research explores how thermal fluctuations drive the formation of short-range condensates in complex quantum systems.

New research connects the subtle world of quantum entanglement to the geometry of spacetime, revealing a deeper link between gravity and quantum information.

Researchers have successfully created and studied molecular rings assembled from triangulene units, opening new avenues for exploring the behavior of quantum spins.

Researchers have developed a mathematical approach to defining where quantum events occur, bridging the gap between quantum mechanics and the principles of relativity.

![The study delineates the bounds of applicability for critical function space estimations-specifically, for [latex]L^{q}(\mathbb{R}^{n})[/latex] potentials, Strichartz pairs [latex](r,p)[/latex], and the Stein-Tomas extension [latex]L^{2}(\mathbb{S}^{n-1})\to L^{p}(\mathbb{R}^{n})[/latex]-demonstrating that the Kenig-Ruiz-Sogge estimate holds for [latex]p\in[q\_{2},\in fty)[/latex] in two dimensions and [latex]p\in[q\_{n},p\_{n}][/latex] for higher dimensions, thereby establishing a nuanced understanding of their dimensional dependence.](https://arxiv.org/html/2602.12122v1/x3.png)

New research demonstrates a powerful method for uniquely determining a quantum potential by analyzing how a system evolves between initial and final states.

![The study demonstrates that alterations in the Kondo lattice temperature [latex]T_{K}[/latex]-induced by applied strain-directly modulate electrical resistance, manifesting as a sign change in temperature dependence and a positive elastoresistance under a magnetic field, thereby revealing the interplay between material properties and externally applied forces.](https://arxiv.org/html/2602.12141v1/Fig1.jpg)

New research demonstrates a powerful connection between mechanical strain and the emergence of magnetic entropy in a heavy-fermion material, offering insights into its quantum critical behavior.

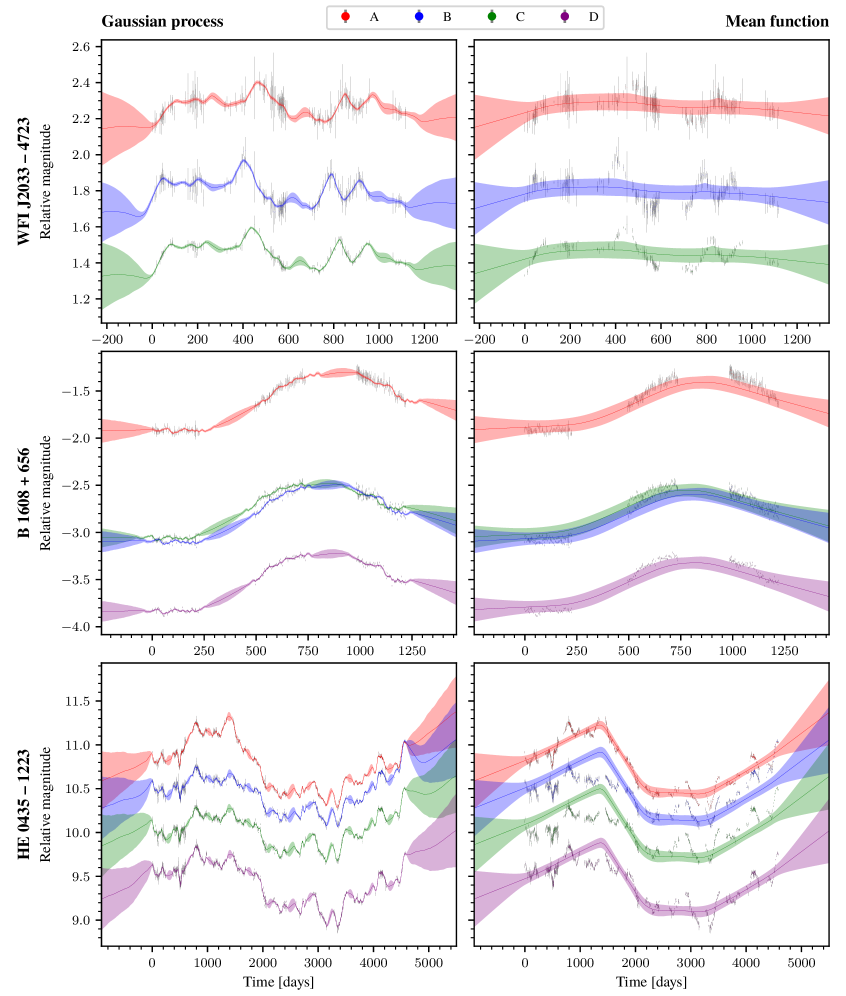

A new Bayesian framework leveraging Gaussian Processes is helping astronomers dissect the fluctuating light from quasars to refine our understanding of the universe’s expansion.

![The scaling dimension [latex]\Delta\phi[/latex] is calculated across different [latex]\mathrm{Sp}(10)[/latex] and [latex]\mathrm{Sp}(4)[/latex] models using conformal 2-pt correlators [latex]\mathscr{C}_T[/latex], revealing its dependence on both [latex]\gamma_{12}[/latex] and [latex]R[/latex], and consistently approaching the expected value of [latex]\Delta_J = 2[/latex] while aligning with the critical point previously identified through crossing symmetry.](https://arxiv.org/html/2602.11255v1/x8.png)

Researchers are using innovative simulations on a ‘fuzzy sphere’ to explore the behavior of quantum systems at the brink of phase transitions.

![The Sondheimer frequency exhibits a clear dependence on doping concentration, transitioning predictably across the overdoped to underdoped regime under a perpendicular magnetic field-a relationship governed by parameters including a perpendicular transfer integral of [latex]3 \times 10^{-3} \text{eV}[/latex], zero phase shift [latex]\eta = 0[/latex], and a layer thickness ratio of [latex]d/a = 40[/latex].](https://arxiv.org/html/2602.11252v1/x6.png)

New research demonstrates that subtle changes in electrical resistance under a magnetic field can be used to chart the complex electronic structure of high-temperature superconductors.

New research reveals that the fundamental nature of spacetime in loop quantum gravity allows for both fermionic and bosonic behavior in the excitations of the gravitational field.