Author: Denis Avetisyan

New research proposes a way to distinguish between quantum and classical origins of primordial fluctuations by searching for violations of specific inequality constraints in cosmological observations.

This paper presents a falsifiable framework for testing quantum effects in the early universe by leveraging symmetry-protected sectors and analyzing constraints on primordial fluctuations.

While inflationary cosmology successfully explains primordial fluctuations, definitively establishing their quantum origin has remained a challenge due to the ability of classical stochastic models to mimic observed statistics. The paper ‘Was the Early Universe Quantum? Falsifying Classical Stochastic Inflation’ presents a novel falsification-based approach, defining precise classical hypotheses and deriving inequality constraints that must be satisfied if these fluctuations are not quantum in nature. By focusing on symmetry-protected sectors to preserve coherence, the authors demonstrate how violations of these constraints can be probed using realistic cosmological observations, such as large-scale structure and 21 cm surveys. Could this work provide a quantitative pathway to finally distinguish between quantum and classical descriptions of the very early universe?

The Echo of Creation: Quantum Seeds in the Cosmic Dawn

The vast cosmic web of galaxies and voids observed today wasn’t simply ‘built’ from pre-existing conditions; instead, it arose from incredibly subtle disturbances at the very dawn of time. These weren’t classical ripples, but genuine quantum fluctuations – momentary appearances of energy allowed by Heisenberg’s uncertainty principle – magnified to cosmic scales during the universe’s earliest moments. Though minuscule initially, these quantum seeds, representing slight density variations, became the gravitational attractors around which matter coalesced over billions of years. Regions with even a slightly higher density pulled in more matter, eventually forming galaxies and the large-scale structures that define the universe. Evidence for this origin lies in the cosmic microwave background, the afterglow of the Big Bang, which reveals the imprint of these primordial fluctuations as tiny temperature variations – a snapshot of the universe when it was only 380,000 years old, and the seeds of all structure were still nascent.

The inflationary framework proposes that a fraction of a second after the Big Bang, the universe underwent an extraordinarily rapid expansion, increasing in size by a factor of at least 10^{26}. This expansion wasn’t simply a continuation of the initial Big Bang event, but rather a phase transition driven by a hypothetical quantum field – often termed the ‘inflaton’. Energy inherent in this field acted as a repulsive force, overcoming gravity and propelling the universe outward at an accelerating rate. As the inflaton field decayed, it released energy, reheating the universe and seeding the conditions for the formation of matter and, eventually, the large-scale structures observed today. Crucially, quantum fluctuations within this inflaton field were stretched to cosmic scales during inflation, providing the initial ‘seeds’ for all subsequent structure formation – effectively imprinting the earliest moments of the universe onto the cosmos we observe billions of years later.

Determining whether the primordial fluctuations responsible for the universe’s structure arose from fundamentally quantum origins or a classical process represents a significant challenge in modern cosmology. If truly quantum, these fluctuations would be governed by the inherent uncertainty of the quantum realm, leaving a distinct statistical signature imprinted on the cosmic microwave background and the distribution of galaxies. Conversely, a classical origin suggests these fluctuations were pre-existing disturbances amplified by the inflationary epoch, potentially altering predictions for gravitational waves and non-Gaussianity. Distinguishing between these scenarios requires increasingly precise cosmological observations and theoretical modeling, as the answer fundamentally shapes ΛCDM model refinements and potentially hints at the underlying connection between quantum mechanics and gravity – a bridge that remains elusive despite decades of research.

Classical Alternatives: A Universe Not Necessarily Born of Quantum Foam

Observations of the cosmic microwave background indicate a nearly scale-invariant scalar power spectrum, traditionally explained through inflationary models rooted in quantum field theory. However, classical stochastic models offer an alternative explanation by positing that primordial fluctuations originated from classical random fields rather than quantum fluctuations. These models demonstrate the capability to reproduce the observed power spectrum – characterized by a spectral index n_s \approx 0.96 and an amplitude A_s \approx 2 \times 10^{-9} – without invoking quantum principles. This suggests that the observed features of the early universe may not necessarily require a fundamentally quantum origin, opening avenues for investigation into purely classical cosmological scenarios.

Classical models proposing alternatives to quantum field theory for primordial fluctuations utilize stochastic classical fields to generate the observed scalar power spectrum. These approaches circumvent the need for a quantum framework by directly postulating a random distribution of initial conditions or fluctuations in a classical field. However, the validity of these models hinges on several critical assumptions regarding the statistical properties of the random fields, including their correlation functions and the enforcement of a positive definite probability distribution. Specifically, these models must demonstrate that the assumed statistical properties are consistent with observational constraints and do not introduce unphysical effects, such as acausality or instabilities, while also addressing the question of initial conditions for the random field itself.

The Classical Hypothesis posits that a consistent classical description of primordial fluctuations is possible, contingent upon strict adherence to three fundamental principles: locality, causality, and a positive probability distribution. Locality requires that influences propagate no faster than the speed of light, preventing instantaneous action at a distance. Causality dictates that effects must follow their causes in time, eliminating any temporal paradoxes. Crucially, the probability distribution governing the random fluctuations must be positive-definite; negative probabilities are physically unrealistic and would violate fundamental probabilistic interpretations. Satisfying these three conditions is not merely a matter of mathematical consistency, but a prerequisite for a physically viable classical alternative to quantum explanations of the observed scalar power spectrum.

The Illusion of Classicality: Decoherence and the Quantum Noise Floor

The inherent limitations of any measurement process establish a ‘classical noise floor’ for primordial fluctuations. This floor isn’t a quantum effect, but rather a consequence of finite observational resolution; any attempt to define the amplitude of fluctuations requires discerning them against a background limited by the smallest measurable scale. Specifically, the uncertainty in determining the fluctuation amplitude is bounded from below, preventing infinitely precise knowledge of the primordial state. This implies a minimum level of uncertainty that exists even in a fully classical description, independent of quantum mechanical principles, and sets a fundamental limit on the precision with which these fluctuations can be characterized.

The classical noise floor in primordial fluctuations is directly related to the conditional variance of observable quantities; specifically, the uncertainty in determining the value of a fluctuation is inherently limited by the spread of possible outcomes given a particular measurement. This relationship establishes a lower bound on the amplitude of these fluctuations because a zero amplitude would necessitate infinite precision in determining the fluctuation’s value, violating the principles of uncertainty inherent in any measurement process. Mathematically, this limit can be expressed as a function of the conditional variance \sigma^2, demonstrating that the fluctuations cannot be arbitrarily small without exceeding the bounds imposed by the measurement’s inherent uncertainty.

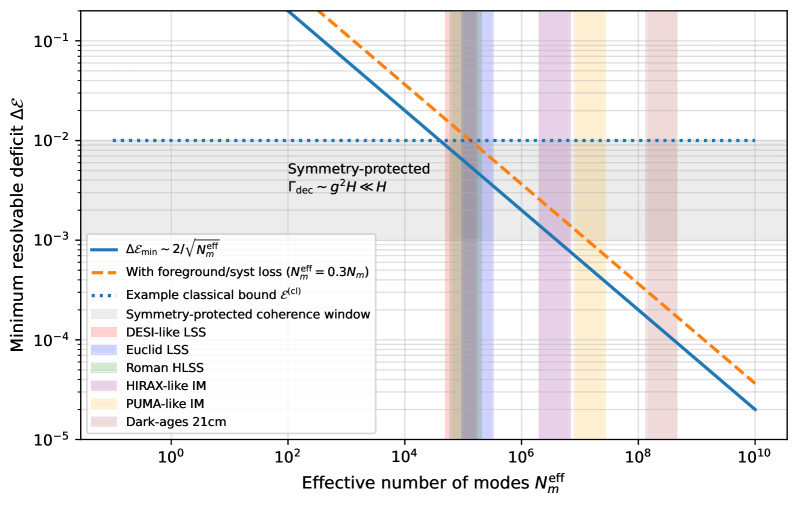

Decoherence, induced by environmental interactions, diminishes quantum coherence within the primordial state, potentially driving the system towards a classical description. However, this effect is not uniform across all sectors; symmetry-protected sectors exhibit suppressed decoherence rates Γ_{dec} = g^2H, where ‘g’ represents the coupling strength and ‘H’ the Hubble parameter. This suppression enhances quantum coherence within these sectors, potentially leaving observable signatures in cosmological data and offering a pathway to probe quantum effects in the early universe despite the overall tendency towards classicality.

Testing the Quantum Fabric: Bell Inequalities and the Search for Non-Classicality

Bell inequalities, derived from the assumptions of local realism, provide a quantifiable method for distinguishing between quantum mechanical predictions and those of classical physics. These inequalities establish limits on the correlations that can exist between measurements on entangled systems, assuming no faster-than-light communication or hidden variables influence outcomes. Violations of Bell inequalities, experimentally confirmed in numerous quantum systems, demonstrate the non-classical nature of these correlations. Applying this framework to cosmology involves assessing whether primordial fluctuations – the seeds of large-scale structure – exhibit correlations that violate these inequalities. A violation would suggest a quantum origin for these fluctuations, differentiating them from classical, stochastic processes. The strength of this test relies on identifying appropriate observables and accounting for observational limitations, such as cosmic variance, inherent in measurements of the early universe.

Testing quantum mechanics with primordial fluctuations presents specific difficulties arising from the nature of cosmological observables. Unlike laboratory quantum tests utilizing controlled systems, primordial fluctuations involve non-commuting observables – quantities whose measurement order affects the outcome – complicating the formulation of Bell-type inequalities. Furthermore, cosmic variance, the inherent limitation on the precision of cosmological measurements due to observing only a single realization of the universe, introduces statistical uncertainty that can obscure violations of these inequalities. This means that even if quantum correlations are present, the signal may be indistinguishable from statistical fluctuations, requiring exceptionally precise measurements and innovative strategies to overcome these limitations and definitively establish quantum behavior in the early universe.

A strategy for falsifying classical explanations of primordial fluctuations has been developed based on inequality constraints applied to observable correlations. This approach targets a Conditional Variance Deficit, denoted as ΔƐ, with projected sensitivity in the range of 10⁻² to 10⁻³. Detection of violations of these inequalities is anticipated through upcoming large-scale structure surveys and 21cm intensity mapping experiments. The effectiveness of this falsification strategy is further improved by the presence of symmetry-protected sectors and, critically, requires sustained quantum coherence for approximately O(1-10) e-folds during the early universe.

A Symphony of Quantum and Classical: The Future of Primordial Cosmology

The earliest moments of the universe present a unique challenge to physicists, demanding a reconciliation of quantum mechanics – the realm of uncertainty and superposition – with general relativity, which governs gravity and the large-scale structure of spacetime. Current cosmological models often treat the primordial universe as either purely quantum or effectively classical, but a comprehensive picture likely necessitates a synergistic approach. This means acknowledging that quantum fluctuations, inherent to the very fabric of spacetime, were not merely initial conditions for a subsequent classical expansion, but actively participated in the transition from a quantum to a classical universe. Understanding how quantum coherence gave way to the classical stochasticity observed in the cosmic microwave background – the afterglow of the Big Bang – requires novel theoretical frameworks. Such frameworks must account for the decoherence mechanisms that effectively ‘measured’ quantum states, collapsing wave functions and seeding the large-scale structures we observe today. The pursuit of this synergy promises a more complete and accurate description of the universe’s birth and evolution, bridging the gap between the quantum and classical worlds.

The earliest moments of the universe were likely governed by quantum fluctuations – ephemeral, probabilistic variations in the fabric of spacetime. However, as the universe expanded and cooled, these quantum effects didn’t simply persist; they underwent decoherence, a process where quantum superpositions collapsed into definite classical states. Understanding this transition is crucial, as it bridges the gap between the quantum realm and the classical universe \text{we observe today} . Researchers theorize that these decohered quantum fluctuations seeded the large-scale structure of the cosmos through classical stochastic processes – random, yet predictable, fluctuations that amplified initial density variations. By meticulously modeling this interplay – how quantum uncertainty gives rise to classical randomness, and ultimately, to galaxies and galactic clusters – cosmologists hope to unlock a more complete picture of the universe’s infancy and the origin of its intricate patterns.

The quest to understand the universe’s earliest moments and the subsequent formation of cosmic structures demands continuous advancement in both theoretical frameworks and observational technologies. Current models, while successful in many respects, reach limits when applied to the extreme conditions of the primordial universe, necessitating novel approaches to modeling quantum gravity and inflationary dynamics. Simultaneously, next-generation telescopes and cosmic microwave background experiments are being developed to probe the universe with unprecedented precision, seeking subtle signatures of primordial fluctuations and topological defects. It is through this synergistic push-refining theoretical predictions and bolstering observational power-that a more complete picture of cosmic origins may emerge, ultimately revealing not only the mechanisms behind large-scale structure formation, but also humanity’s place within the grand cosmic tapestry.

The pursuit of understanding the very early universe, as detailed in this work concerning primordial fluctuations, resembles building castles of thought destined for potential dissolution. This research, attempting to discern quantum origins through falsifiable predictions and inequality constraints, operates under an inherent fragility. As Stephen Hawking once observed, “Intellectuals begin to believe their own projections.” The elegance of symmetry protection, employed to amplify observable signals, offers a temporary shield. Yet, any model, no matter how carefully constructed, remains vulnerable to the relentless scrutiny of cosmological observations – a humbling reminder that even the most brilliant theories exist only until they collide with data, vanishing beyond the event horizon of empirical evidence.

Beyond the Horizon

The pursuit of quantum signatures in the primordial fluctuations feels, at times, like constructing increasingly elaborate pocket black holes – simplified models meant to contain the infinite complexity of the early universe. This work, by focusing on inequality constraints and symmetry-protected sectors, attempts to move beyond merely describing what might have happened, towards formulating predictions that could, in principle, be falsified. It is a necessary, if humbling, exercise. Sometimes matter behaves as if laughing at established laws, and the universe rarely cooperates with neat theoretical frameworks.

The true limitations, however, likely lie not in the mathematics, but in the interpretation. Decoherence remains a formidable obstacle; discerning a genuine quantum origin from stochastic classical noise requires increasingly precise cosmological observations – a pursuit bordering on the asymptotic. Each refinement of the cosmic microwave background map brings clarity, but also reveals the depth of the abyss into which further investigation must descend.

Future research will inevitably involve diving deeper into the computational complexity of simulating the early universe. But perhaps a more profound challenge is recognizing when a model has reached its limit – when the elegant equations cease to reflect reality, and become merely pleasing illusions. The universe does not owe humanity an explanation, and the most honest scientific endeavor may be acknowledging the boundaries of understanding.

Original article: https://arxiv.org/pdf/2601.13053.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Survivor’s Colby Donaldson Admits He Almost Backed Out of Season 50

- Best Controller Settings for ARC Raiders

- Gold Rate Forecast

- Where Winds Meet: How To Defeat Shadow Puppeteer (Boss Guide)

- The Sci-Fi Thriller That Stephen King Called ‘Painful To Watch’ Just Joined Paramount+

- How to Build a Waterfall in Enshrouded

- Hazbin Hotel season 3 release date speculation and latest news

- 40 Inspiring Optimus Prime Quotes

- Guide: Marathon Server Slam Gets Underway Today – Here’s Everything You Need to Know

- Monster Hunter Stories 3’s character creator is officially revealed — and it features a cosmetic that’s normally paywalled in the mainline games

2026-01-22 00:32