Author: Denis Avetisyan

A new study explores the potential of future muon-proton colliders, enhanced by advanced machine learning, to discover vector-like quarks and probe physics beyond the Standard Model.

Researchers demonstrate the feasibility of identifying vector-like singlet top quarks up to 5 TeV at a future muon-proton collider operating at various energy levels using multivariate analysis techniques.

The Standard Model of particle physics, while remarkably successful, leaves open the possibility of extended frameworks and new particle discovery. This study, ‘Search for Vector-Like Singlet Top ($T$) Quark in a Future Muon-Proton ($μp$) Collider at $\sqrt{s} = 5.29, 6.48,$ and $9.16$ TeV using Advanced Machine Learning Architectures’, investigates the potential to detect vector-like singlet top quarks-particles beyond the Standard Model-at a future muon-proton collider using sophisticated machine learning techniques. Results demonstrate that a 9.16 TeV collider, combined with algorithms like Boosted Decision Trees and Multi-Layer Perceptrons, could establish 5σ evidence for these quarks up to a mass of 4 TeV, and extend sensitivity to 5 TeV. Could this collider configuration, and these analysis methods, unlock further insights into the fundamental building blocks of the universe and the nature of physics beyond our current understanding?

The Emerging Landscape Beyond the Standard Model

Despite its extraordinary predictive power and consistent validation through decades of experimentation, the Standard Model of particle physics remains incomplete. It successfully describes the fundamental forces – electromagnetic, weak, and strong – and classifies all known elementary particles, yet it fails to incorporate gravity or account for phenomena like dark matter and dark energy. Moreover, the model necessitates fine-tuning of certain parameters to prevent excessively large quantum corrections, a puzzle suggesting the existence of undiscovered particles or forces. This inherent incompleteness fuels an ongoing quest for “new physics” – theoretical frameworks and experimental searches designed to extend and refine

The perplexing gauge hierarchy problem arises from the vast discrepancy between the electroweak scale and the Planck scale, threatening the stability of the Higgs boson mass against quantum corrections. Theoretical physicists posit that extending the Standard Model with new particles could offer a solution; vector-like quarks represent a compelling possibility. Unlike the chiral quarks found in nature, vector-like quarks possess both left- and right-handed partners, creating a symmetry that cancels out problematic quantum contributions to the Higgs mass. Introducing these heavier quarks effectively shields the Higgs boson from large corrections, providing a natural mechanism for stabilization. The search for these particles at high-energy colliders, therefore, isn’t merely a hunt for new constituents of matter, but a crucial test of whether the universe has implemented its own self-protection against instability at the quantum level.

The validation of theoretical extensions to the Standard Model hinges on the ability of high-energy colliders to perform extraordinarily precise measurements. These facilities, such as the Large Hadron Collider, don’t simply discover new particles; they meticulously analyze the debris of particle collisions, searching for subtle deviations from the predictions of established physics. This demands not only high collision energies to produce potentially massive new particles, but also detectors capable of resolving incredibly small differences in particle properties – mass, charge, spin, and decay modes. Scientists are developing innovative techniques to improve detector resolution and increase data collection rates, aiming to tease out the faint signals of new physics hidden within the overwhelming background of known interactions. Ultimately, the success of this endeavor will determine whether these theoretical models accurately reflect the fundamental laws governing the universe, or require further refinement.

Decoding Vector-Like Quarks: Signatures of the Unexpected

Singlet top quarks, a type of vector-like quark not predicted by the Standard Model, exhibit decay modes distinguishable by their final-state particles. Hadronic decays involve the production of jets, collimated sprays of hadrons resulting from the fragmentation of quarks and gluons. These jets originate from the decay of the top quark and any subsequent W bosons. Leptonic decays, conversely, produce leptons – electrons or muons – alongside missing transverse energy resulting from the undetected neutrino. The presence of a neutrino makes full reconstruction of the decay impossible, requiring inference through momentum conservation. These differing signatures – jets versus leptons and missing energy – are crucial for experimental separation of singlet top quark signals from standard background processes.

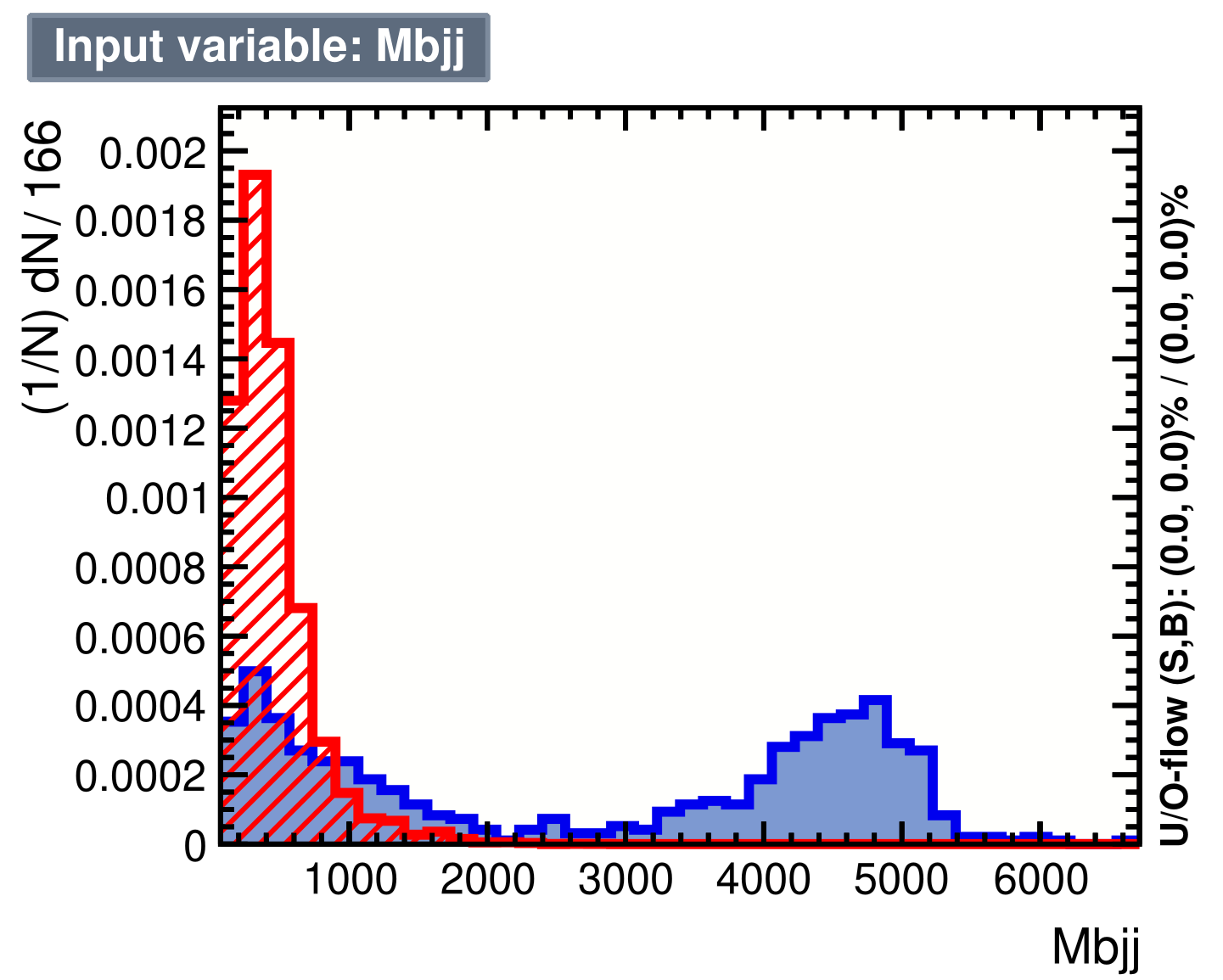

Reconstruction of vector-like quark decays relies heavily on identifying hadronic jets and accurately quantifying missing transverse energy. Hadronic decays manifest as collimated sprays of particles, with jets originating from b quarks being particularly important and identified using bTagging techniques due to their distinct decay signatures. Leptonic decays, conversely, result in undetectable leptons and produce missing transverse energy – a measure of the momentum carried by these unobserved particles. Precise measurement of both the jet characteristics and missing transverse energy is crucial for correctly inferring the properties of the parent vector-like quark and differentiating signal events from background processes that mimic similar signatures.

Accurate characterization of singlet top quark decay channels – both hadronic and leptonic – is critical for effective signal separation from Standard Model background processes. Background estimation relies on precise modeling of kinematic distributions and event topologies mimicking the signal; therefore, detailed understanding of the signal’s expected characteristics in each channel is paramount. Maximizing detection sensitivity necessitates optimizing event selection criteria based on these characterized features, including jet properties,

Extracting Hidden Signals: The Power of Multivariate Analysis

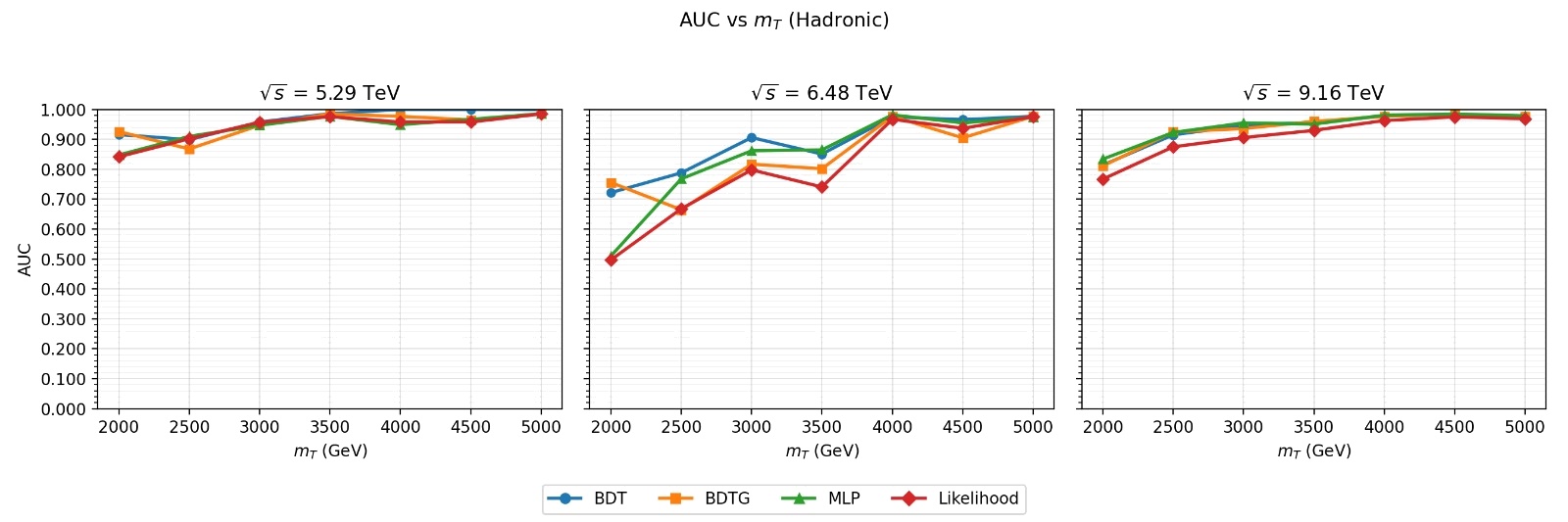

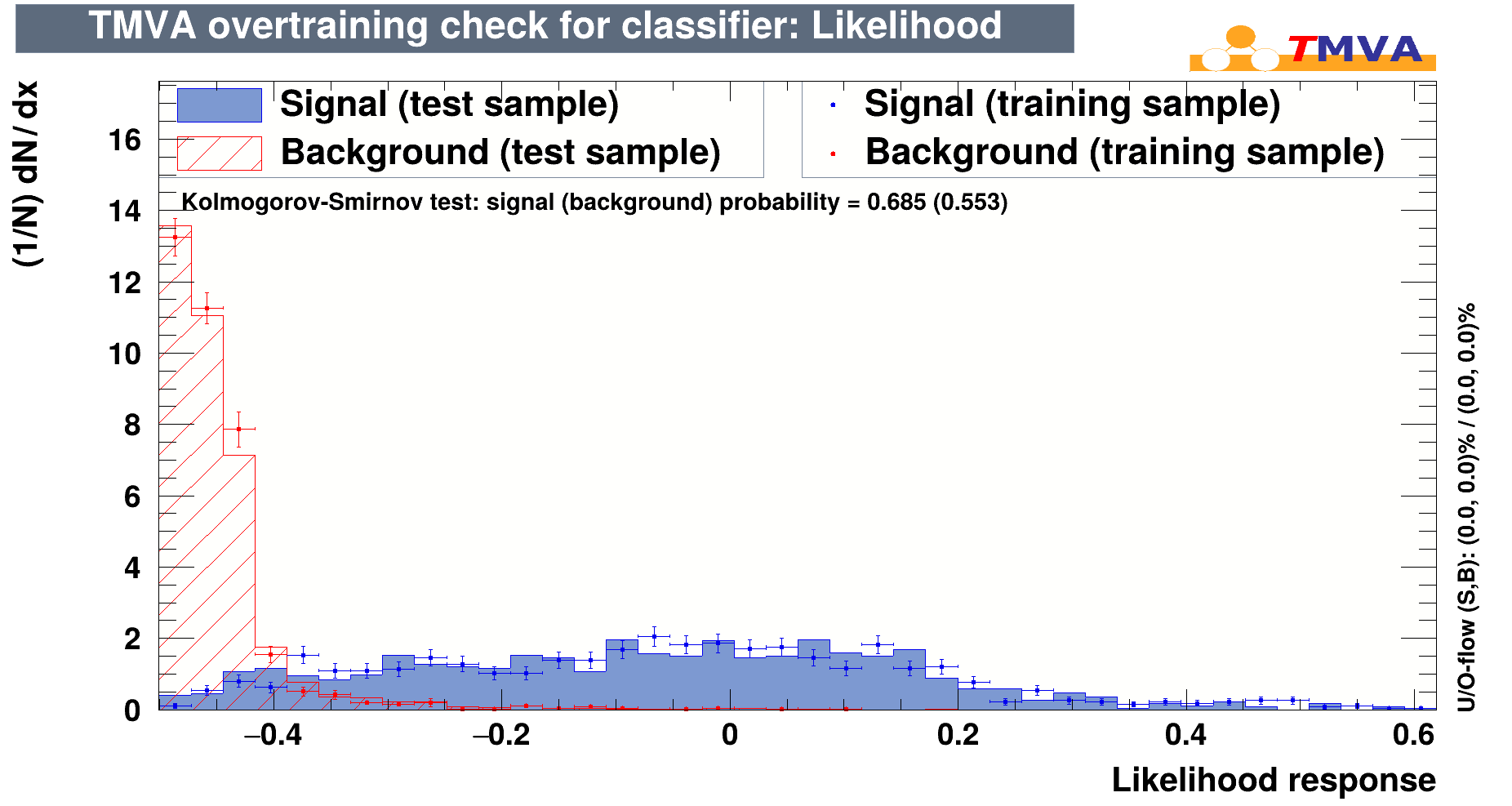

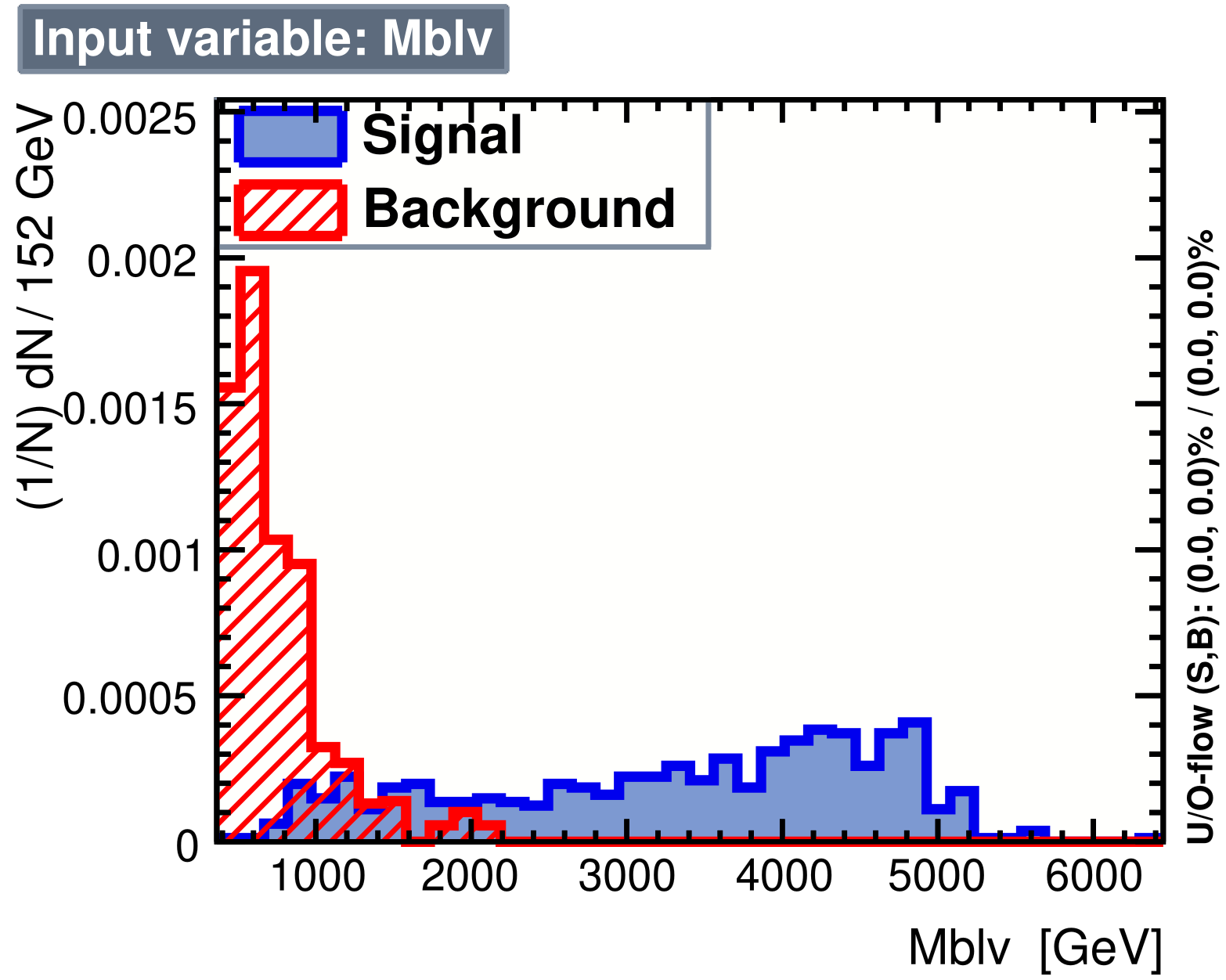

Multivariate analysis techniques, specifically Boosted Decision Trees and Multilayer Perceptrons, are essential for signal extraction in high-energy physics due to their ability to model complex, non-linear relationships within datasets. Traditional cut-based analysis often struggles with overlapping signal and background distributions, leading to reduced sensitivity and increased false positive rates. These machine learning algorithms, however, can effectively discriminate between signal and background by considering multiple input variables simultaneously and learning the optimal decision boundary. This approach improves statistical power by maximizing the separation between signal and background, enabling the detection of subtle signals that would otherwise be obscured by the overwhelming background noise. The algorithms achieve this by iteratively refining a model based on training data, learning to weight the importance of each input variable and identify complex correlations that contribute to the signal-background separation.

The ToolkitForMultivariateDataAnalysis is a software package designed to facilitate the implementation and optimization of complex multivariate algorithms, specifically BoostedDecisionTrees and MultilayerPerceptrons. It includes pre-built implementations of these algorithms, along with tools for feature selection, hyperparameter tuning, and performance evaluation. The toolkit supports data input from various formats and provides interfaces for integrating with existing data analysis pipelines. Furthermore, it offers functionalities for model validation, including cross-validation and bootstrapping, to ensure robust and reliable results when analyzing high-dimensional datasets, such as those encountered in particle physics research.

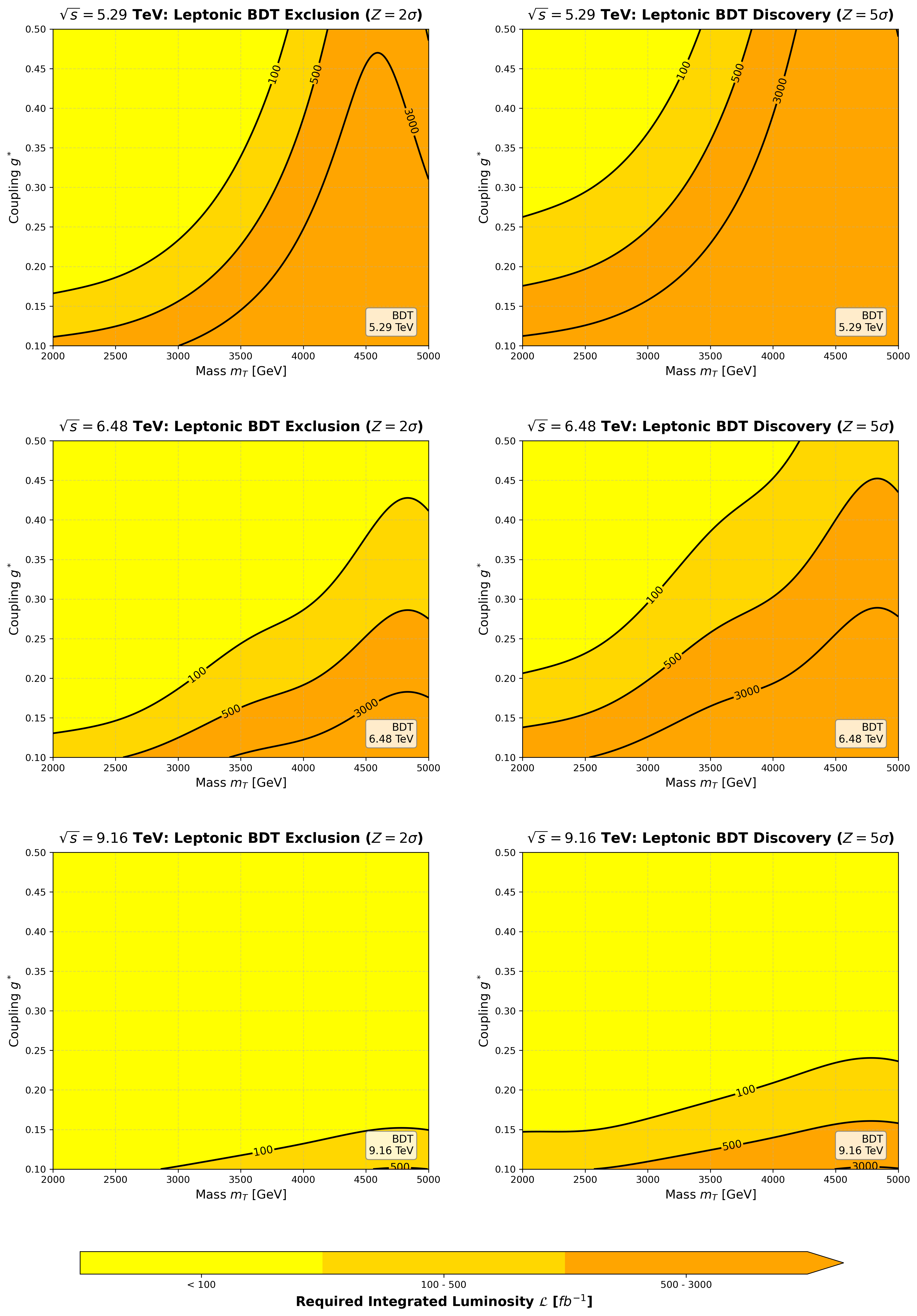

Analysis of collision data employing multivariate techniques has demonstrated the capability to identify vector-like quark signatures with high statistical significance. Specifically, at a luminosity of 3000 fb⁻¹, a significance of 42.23σ was achieved in the hadronic channel for a 2.5 TeV mass signature, and 30.76σ was observed in the leptonic channel at 4 TeV mass. These results indicate a substantial improvement in the ability to detect these signatures compared to previous methods, enabling more precise measurements and searches for beyond the Standard Model physics.

A New Era of Colliders: Probing the Universe’s Deepest Secrets

A MuonProtonCollider presents a compelling pathway to investigate physics beyond the Standard Model, particularly through its heightened sensitivity to vector-like quarks – hypothetical particles not present in the current model but predicted by various extensions. Unlike proton-proton collisions, the use of muons as one of the colliding beams offers a cleaner experimental signature, reducing background noise and enabling the precise measurement of rare processes. This advantage stems from the muon’s simpler decay characteristics and the ability to reconstruct its momentum with greater accuracy. Consequently, a MuonProtonCollider significantly boosts the potential for discovering these heavier quarks and precisely determining their properties, such as mass and decay modes, potentially unveiling new fundamental interactions and resolving long-standing mysteries in particle physics. The facility’s design promises an unprecedented ability to probe the high-energy frontier, offering a unique complement to existing and planned experiments.

Establishing new physics beyond the Standard Model requires extraordinarily high levels of statistical certainty, and future collider experiments are designed to meet this challenge. Recent studies demonstrate that a next-generation facility, accumulating an integrated luminosity of 3000 fb⁻¹, possesses the capability to definitively identify new particles, achieving a statistical significance exceeding 5

A future, next-generation collider represents more than just an incremental improvement in technology; it promises a transformative leap in humanity’s ability to probe the very fabric of existence. By reaching energy regimes previously inaccessible, this facility aims to unveil phenomena beyond the Standard Model of particle physics – potentially revealing the nature of dark matter, explaining the matter-antimatter asymmetry of the universe, and uncovering extra dimensions. Such discoveries wouldn’t merely add to existing knowledge, but could fundamentally reshape established theories, demanding a revision of our understanding of space, time, and the fundamental forces governing reality. The collider’s potential extends beyond confirming or refuting current hypotheses; it offers a chance to witness entirely new particles and interactions, opening a new window onto the universe’s deepest secrets and propelling physics into uncharted territory.

The pursuit of vector-like quarks at a muon-proton collider, as detailed in this study, exemplifies a system where emergent properties arise from localized interactions. The application of machine learning isn’t about imposing control, but rather about discerning patterns within complex data streams – allowing the signal of these quarks to emerge from the noise. As Bertrand Russell observed, “The good life is one inspired by love and guided by knowledge.” Here, ‘knowledge’ isn’t a pre-defined outcome, but a capacity to detect subtle signatures, to influence the interpretation of results, and ultimately, to understand the underlying physics without dictating it. The collider, the algorithms, and the analysis form a living system where local connections – the individual decay events and machine learning parameters – collectively determine the potential for discovery.

Beyond the Search

The pursuit of vector-like quarks, as demonstrated by this exploration of a muon-proton collider, isn’t about finding new fundamental particles so much as revealing the limitations of current frameworks. Order manifests through interaction, not control; the Standard Model, while remarkably successful, increasingly strains under the weight of its own internal consistency. This study suggests a pathway – a technological lever – but the true discoveries likely reside in the unexpected anomalies, the deviations from predicted behavior. The sensitivity projected here, reaching beyond 5 TeV, isn’t a destination, but a higher-resolution lens.

Future iterations will inevitably focus on refining the machine learning architectures, squeezing ever more signal from background. Yet, the crucial challenge remains disentangling genuine new physics from sophisticated statistical flukes. The elegance of a clean signal is often illusory. A more fruitful direction may lie in broadening the search parameters, exploring decay channels not presently prioritized, or considering more complex models beyond simple singlet extensions.

Sometimes inaction is the best tool. The sheer cost of these ventures demands careful consideration. Perhaps the most profound insight will come not from what is found, but from understanding when to cease the search, accepting the inherent incompleteness of any model, and embracing the emergent complexity of the universe. The pursuit continues, not because a solution is guaranteed, but because the questions themselves are fundamental.

Original article: https://arxiv.org/pdf/2602.01010.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Where Winds Meet: How To Defeat Shadow Puppeteer (Boss Guide)

- Best Controller Settings for ARC Raiders

- Survivor’s Colby Donaldson Admits He Almost Backed Out of Season 50

- Best Thanos Comics (September 2025)

- Resident Evil Requiem cast: Full list of voice actors

- Gold Rate Forecast

- 4 TV Shows To Watch While You Wait for Wednesday Season 3

- Best Shazam Comics (Updated: September 2025)

- PS5, PS4’s Vengeance Edition Helps Shin Megami Tensei 5 Reach 2 Million Sales

- How to Build a Waterfall in Enshrouded

2026-02-03 13:50