On June 4th, followers of the Houston Museum of Natural Science (HMNS) experienced an event that challenged traditional beliefs about social media safety. The authentic Instagram account of the museum, marked with its verification badge and institutional reputation, was taken over and employed to advertise a bogus Bitcoin giveaway, which included deepfake videos of Elon Musk promising $25,000 in BTC.

In just a few hours, the museum managed to regain control and remove the posts. However, this incident revealed an unsettling truth: the advice we’ve been given about avoiding cryptocurrency scams is rapidly becoming obsolete.

The Uncomfortable Truth About “Best Practices”

For a long time, safety professionals have repeatedly emphasized similar principles: “Stick to validated profiles,” “Rely on recognized entities,” and “Remain cautious about instant wealth opportunities.” These tips are useful in avoiding well-known schemes such as cloud mining and online dating scams. Nevertheless, the recent HMNS incident demonstrates that these rules are not merely inadequate; they can even foster an illusory sense of protection that con artists are skillfully manipulating with surgical accuracy.

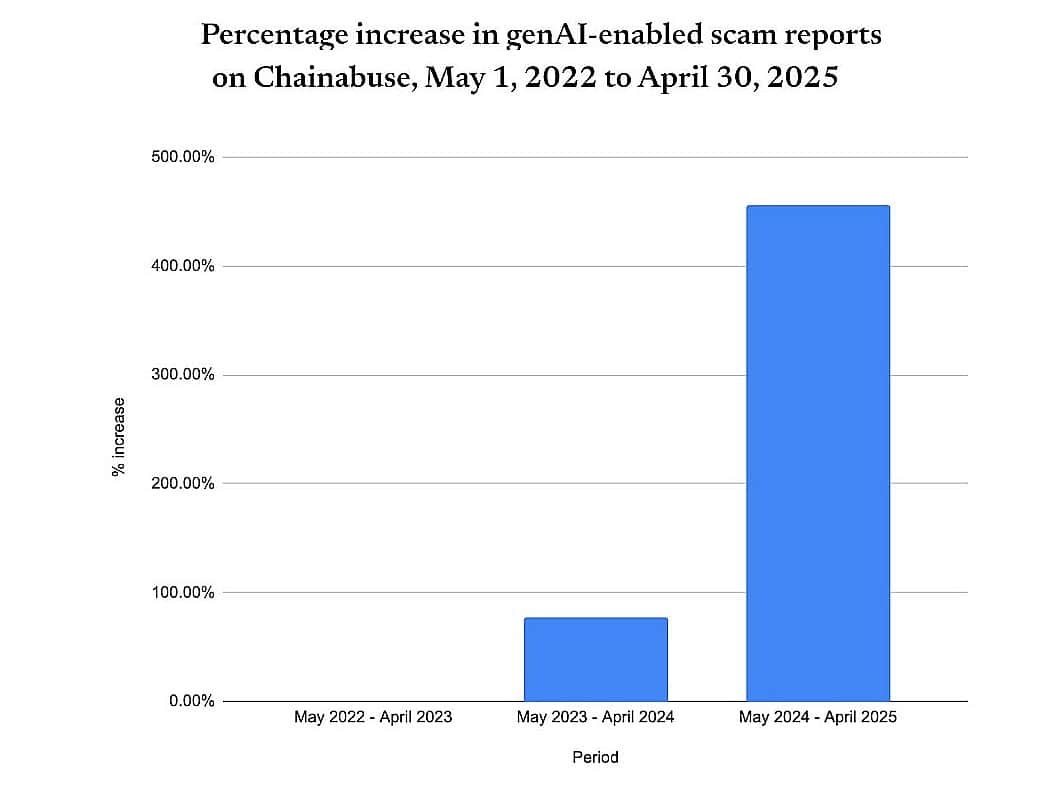

As an analyst, I’ve observed a staggering increase in scams leveraging generative AI technology. From May 2024 to April 2025, this type of fraud skyrocketed by an astounding 456% compared to the year prior. Even more concerning is that the year before had already seen a significant surge of 78%. This isn’t merely growth; it’s an exponential acceleration that traditional security advice didn’t foresee.

The issue isn’t really about people disregarding security guidance. Rather, it’s because this advice was formulated based on a context that has significantly changed over time.

Why This Problem Is Worse Than Social Platforms Admit

It’s beneficial for social media companies to minimize the impact of AI-driven fraud since it undermines users’ trust, which is crucial for advertisers as well. However, statistics paint a picture that contrasts with what these platforms’ public relations teams might prefer you to believe.

Lately, there have been attacks that show a level of complexity and reach which these platforms refuse to acknowledge. In January 2025, the NBA and NASCAR league accounts were hacked concurrently, propagating false “NBA token” and “NASCAR token” advertisements. This wasn’t a coincidence—it was an orchestrated event. Simultaneously, in the same month, the UFC’s official Instagram account was targeted by a deceptive cryptocurrency campaign labeled as “#UnleashTheFight.

As an analyst, I’d rephrase that statement like this: What these platforms don’t disclose is that these attacks were successful not in spite of their security measures, but because those measures are inherently incompatible with AI-driven assaults. Conventional account protection was engineered to thwart human hackers employing traditional tactics. However, AI-enabled attacks function at machine speed and precision, uncovering weaknesses faster than our human-designed systems can react. Platforms like Meta, X, and others choose to remain reactive rather than proactive, as taking a proactive approach would necessitate acknowledging the extent of the issue—and the significant infrastructure investment required to tackle it effectively.

Security experts often keep quiet about this, but social media sites have financial motivations that can clash with stopping scams. Their algorithms, designed to boost engagement, amplify contentious posts, including fraudulent content that gathers comments, reposts, and reactions. The very systems that drive profits for these platforms also expose them to manipulation. A genuine effort to prevent scams might mean accepting reduced engagement in favor of security—a compromise shareholders won’t agree to unless there is regulatory intervention.

The Real Reason Museums and Sports Teams Are Prime Targets (It’s Not What You Think)

As a analyst, I’ve observed that it’s commonly believed that hackers are after high-follower accounts due to their influence and credibility. However, this oversimplifies the issue. The real concern is that these accounts symbolize the pinnacle of human curation, where trust is at its highest level within these institutions.

When cryptocurrency promotions show up on a museum’s social media account, followers perceive it as more than just an endorsement – they see it as a form of institutional approval or validation. This is because museums, sports teams, and cultural institutions hold a special place in people’s minds where they assume the content shared has undergone scrutiny by trustworthy curators or overseers.

This isn’t primarily about attracting a vast number of people. Instead, it’s about leveraging the robust systems of trust that have been developed over decades. A crypto scam on an average influencer’s account often faces initial doubts. However, the same scam on the Vancouver Canucks’ or NASCAR’s account (taken over on May 5th) avoids such skepticism entirely. The choice of these targets suggests a high level of psychological profiling that conventional security systems didn’t factor in. Scammers aren’t merely stealing accounts; they’re seizing control of decades-worth of institutional trust-earning efforts.

Why Current Solutions Won’t Work

In addressing AI-driven fraud within the cryptocurrency sector, a common strategy emerges: enhancing education, improving verifications, and reinforcing password security. However, this approach seems to misconstrue the essence of the issue.

Modern approaches often attribute security issues to users requiring more education or systems needing improved detection. However, recent breaches at Coinbase, Bitopro, and Cetus have demonstrated a pattern of reactionary responses involving promises of ‘enhanced’ security measures. This response, while recurrent, is often deemed as coming ‘too little, too late.’ In today’s context, the criminal’s arsenal has grown significantly. Artificial Intelligence (AI) is being employed to execute sophisticated scams that exploit not just users but also the mental shortcuts that enable digital communication itself.

When you come across information from a trusted, verified account, your brain employs a process psychologists refer to as “cognitive offloading.” Essentially, this means transferring the task of verification to the platform and institution, which enables us to manage the vast number of digital interactions we encounter daily without overwhelming our mental resources. This isn’t a flaw in human cognition; instead, it’s an advantageous mechanism that facilitates efficient information processing.

In simpler terms, traditional security guidelines suggest that individuals should completely shut down their trust in others and approach each interaction with extreme suspicion. However, this approach is not only unrealistic but also beyond what humans can do consistently on a large scale. For the moment, it remains our primary defense strategy.

The Authentication Theater Problem

In simpler terms, two-factor authentication, account verification, and platform badges are often referred to as “security stage shows” by experts in the field. These visible security features give users a sense of comfort, but they offer limited protection against complex cyber threats.

The HMNS incident showcased an unexpected risk associated with verification badges – they might inadvertently heighten vulnerability instead of mitigating it. Instead of preventing the attack, these badges ironically magnified the impact of the false content by bestowing an institutional seal of approval on fraudulent material. This presents a conundrum that conventional security guidance doesn’t address: the tools meant to build trust could potentially be used to manipulate it.

What Traditional Advice Gets Wrong About AI

Traditional cybersecurity guidance often views AI as an advanced form of current risks, but this perspective underestimates the profound change that AI brings.

Previously, crypto scams relied heavily on human ingenuity, personal manipulation, and laborious content production. On the other hand, AI-driven frauds operate non-stop, exploring numerous possibilities at once, learning from each encounter, and adapting in real-time. While conventional wisdom advises caution when faced with deals that seem too good to be true, it assumes that scam methods remain constant. However, AI-powered scams modify their offers, timing, and appearance according to the behavior of their targets, making the “too-good-to-be-true” bar a rapidly changing one that humans may struggle to keep up with.

The Deepfake Dilemma

Modern deepfake technology has advanced so much that the usual warning signs are no longer reliable. For instance, the fraudulent deepfakes involving Elon Musk aren’t merely convincing; they appear as genuine content to many viewers. The approach taken by the crypto industry, which encourages users to act as amateur investigators, is not only unjust but also inefficient because it places an unfair burden on individuals instead of addressing the root problem.

Conclusion

The shortcomings in conventional cryptocurrency security guidance aren’t coincidental – they’re inherent. These methods were devised when cryptocurrency fraud was primarily a human issue, needing human-level solutions. However, AI-driven scams mark a shift in scale that demands entirely novel strategies.

Strengthening defense against AI-driven cryptocurrency fraud necessitates accepting some hard realities, such as the insufficiency of personal caution alone, the weaknesses in existing safety measures on platforms, and the necessity of comprehensive, structural changes instead of just focusing on individual behavior.

The Houston Museum of Natural Science incident wasn’t simply an addition to the growing trend of crypto scams. Instead, it served as a warning of a potential future where conventional security measures not only become ineffective but may actually foster new vulnerabilities. As long as we don’t acknowledge these truths, we will persist in combating tomorrow’s threats with outdated solutions.

Read More

- Gold Rate Forecast

- SteelSeries reveals new Arctis Nova 3 Wireless headset series for Xbox, PlayStation, Nintendo Switch, and PC

- Masters Toronto 2025: Everything You Need to Know

- Discover the New Psion Subclasses in D&D’s Latest Unearthed Arcana!

- Eddie Murphy Reveals the Role That Defines His Hollywood Career

- We Loved Both of These Classic Sci-Fi Films (But They’re Pretty Much the Same Movie)

- Rick and Morty Season 8: Release Date SHOCK!

- ‘The budget card to beat right now’ — Radeon RX 9060 XT reviews are in, and it looks like a win for AMD

- Forza Horizon 5 Update Available Now, Includes Several PS5-Specific Fixes

- Mission: Impossible 8 Reveals Shocking Truth But Leaves Fans with Unanswered Questions!

2025-06-10 06:52