The director of Man vs. Baby, David Kerr, has confirmed suspicions about how the baby in the Netflix comedy series was filmed. He explained why computer-generated imagery (CGI) was necessary for certain scenes. Man vs. Baby is a sequel to the 2022 series Man vs. Bee, which also used CGI – a common practice when working with animals, and generally accepted by viewers. However, some fans noticed what appeared to be artificial intelligence (AI) being used in Man vs. Baby, particularly when filming scenes with the baby, and questioned its use. Kerr has now addressed these concerns and clarified the situation.

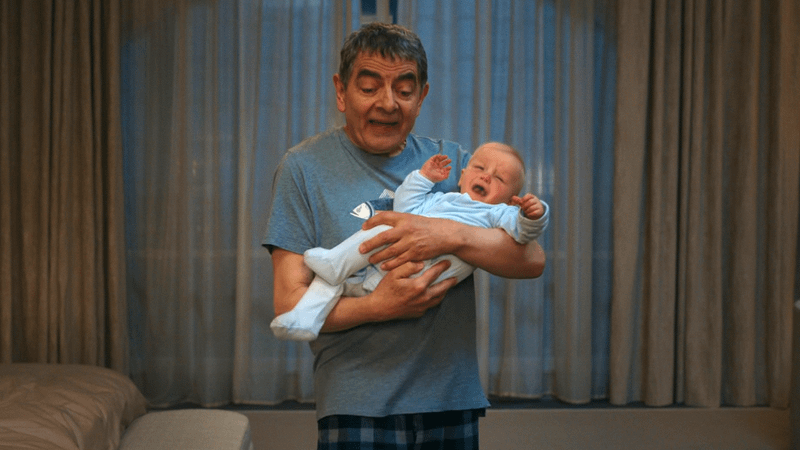

The four-part Christmas special, Man vs. Baby, follows Trevor Bingley, who takes a well-paying house-sitting job for the holidays. Things get complicated when he unexpectedly finds himself caring for a lost baby. The series stars Rowan Atkinson (known for his role in the 2023 film Wonka), along with Robert Bathurst, Nina Sosanya, and Susanna Fielding. All four episodes became available on Netflix on December 11, 2025.

Is the Baby In ‘Man Vs. Baby’ AI?

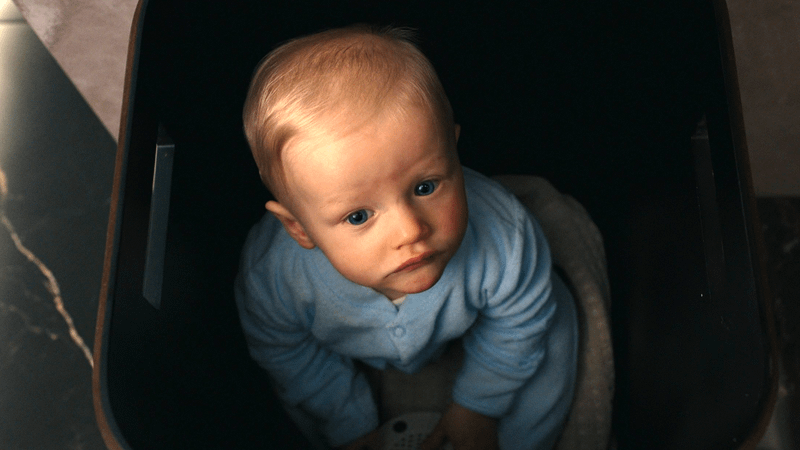

The Netflix Christmas special, Man vs. Baby, featured a six-month-old baby as a central character throughout its four episodes. Because the show used a real infant for many scenes, viewers are wondering how the production team was able to film everything so smoothly.

To mark the Netflix release of Man vs. Baby, director David Kerr revealed the clever methods used to seamlessly feature the baby in the series. They cast identical twins, employed “machine learning” to create realistic baby expressions, and used CGI. This machine learning technology, a type of artificial intelligence (AI), essentially confirms that AI was used to bring the baby to life, though the baby was still portrayed by real infants.

Kerr explained their approach, pointing out that babies so young—around six months old—can’t follow instructions. Plus, they only had about two hours each day to film with the babies, which limited what they could do.

As WC Fields famously said, ‘never work with children or animals.’ In my recent series, ‘Man Vs Bee,’ I found myself working with both! My co-star was a CG bee, and another was a six-month-old baby. Babies, of course, don’t take direction – they do whatever they want! This presented a challenge because babies can only film for a maximum of two hours a day, and this little one was in nearly every scene.

Kerr explained that they originally cast identical twins to play the baby characters, and then brought in a second pair as backups. This allowed them to film both sets of twins and digitally swap faces in editing. They also used a realistic, movable latex model of the babies.

For the baby actors, we used two sets of identical twins. The first pair played the main roles, while the second, slightly older pair served as backups, as they were more mobile. Because the backup babies were a similar size, we could film with them and digitally swap their faces with the main babies in editing. We also created a realistic, poseable latex model of the babies – a ‘jelly baby’ – which was incredibly useful for planning and rehearsing the scenes before filming with the real infants.

Despite looking remarkably real, the jelly baby puppet didn’t quite hold up when viewed closely on screen. That’s why you won’t see it in any shots from the show. I really wanted to capture as much of the baby’s performance live, during filming. However, I knew I needed a backup plan.

Kerr went on to describe how they worked with the visual effects team, using a special recording session to capture the babies’ facial expressions in detail.

I worked closely with our VFX supervisor, Rob Duncan from Framestore, because AI technology is rapidly evolving and we’re pushing its boundaries. We aimed to create a completely realistic, computer-generated baby that could perform any action or display any expression needed for the film. To achieve this, we began with a performance capture session, filming our young actors with five cameras for a couple of hours to record a wide range of natural expressions – from waking up to falling asleep.

The director acknowledged the process was taking time, explaining that they were building a valuable collection of expressions and actions using machine learning. This library will be used throughout filming and when editing the footage.

After filming, Framestore used machine learning to analyze the footage and create a collection of expressions and movements. This allowed them to generate additional options for expressions and actions, even ones the babies hadn’t naturally made. During post-production, they used this library to refine the babies’ performances. While Framestore’s visual effects team and animators were incredibly skilled, it wasn’t a quick process. It took time and careful attention to detail, as even small things like a mouth shape or blink needed to look realistic. Through close collaboration and creative problem-solving, we were able to achieve a fantastic final result.

In the end, the project was successful. Kerr explained that the best computer-generated (CG) shots were those where the team had a real-life example of the facial expression or movement they wanted the CG baby to make. This helped create more believable and realistic results.

I discovered that the most convincing computer-generated (CG) shots of the baby were those where we had a real-life reference for the expression or movement we wanted to create. It didn’t even matter if the reference footage was filmed in a different location or with different lighting. Because people are so good at noticing small details in facial expressions, making the CG as realistic as possible is crucial.

David Kerr detailed the reasons behind the production’s unique methods, highlighting how challenging it was to work with a baby as a central character. Despite potential criticism, these techniques were sensible given the unpredictable nature of working with a young child.

Rowan Atkinson recently told the Manchester Evening News that they used twin babies during filming. He explained this was a smart choice because if one baby became fussy or upset, they could simply switch to the other one.

It’s important to remember these were twin babies, which is helpful because you can switch them out if one gets fussy. Filming with babies is limited to 45 minutes at a time, with a maximum of two hours total per day. Since a shoot day is eight to ten hours long, there’s a lot of time when you can’t film the planned scene and need to switch to something else – maybe a different angle or a completely different shot.

Atkinson acknowledged that the rules for scheduling work with babies and young children are complicated, which is why the show often used computer-generated imagery instead.

It’s challenging to coordinate scenes with babies, especially twins. We had one set of twins who were the main babies, but they couldn’t crawl. So, we used a second set of twins for crawling shots. To make it look like the same babies, we used computer-generated imagery to digitally replace the face of the crawling baby with the face of the main baby.

Man vs. Baby is one Netflix’s biggest TV shows releasing in December 2025.

Why ‘Man vs. Baby’s Use of Machine Learning Is A Double-Edged Sword

Many people are wary of artificial intelligence, particularly in filmmaking. However, some believe machine learning was crucial to the success of Man vs. Baby, as it’s impossible to predict what a six-month-old baby will do on set, and strict child labor laws add to the challenge.

I was really impressed with how much effort went into making this production work! They used some clever techniques alongside the AI, like having identical twins and even backup babies on set, just to make sure everything flowed seamlessly from scene to scene. Honestly, I think it’s great that director David Kerr was open about all the tools and methods they used – that level of honesty is a real win in my book.

Since Man vs. Baby has a clear resolution – Trevor successfully returns the baby to the Schwarzenbochs – it’s unlikely we’ll see this story continued. This probably means less reliance on AI tools, like machine learning, going forward.

Read More

- Survivor’s Colby Donaldson Admits He Almost Backed Out of Season 50

- Where Winds Meet: How To Defeat Shadow Puppeteer (Boss Guide)

- Best Controller Settings for ARC Raiders

- Gold Rate Forecast

- The 10 Best Episodes Of Star Trek: Enterprise

- Best Thanos Comics (September 2025)

- Best Shazam Comics (Updated: September 2025)

- How to Build a Waterfall in Enshrouded

- Resident Evil Requiem cast: Full list of voice actors

- Death Stranding 2: Best Enhancements to Unlock First | APAS Guide

2025-12-12 04:37