Varonis Threat Labs, a data security research company, recently discovered a way attackers could quietly steal personal information through Microsoft Copilot. They’ve named this security flaw “Reprompt” and detailed it in a new report.

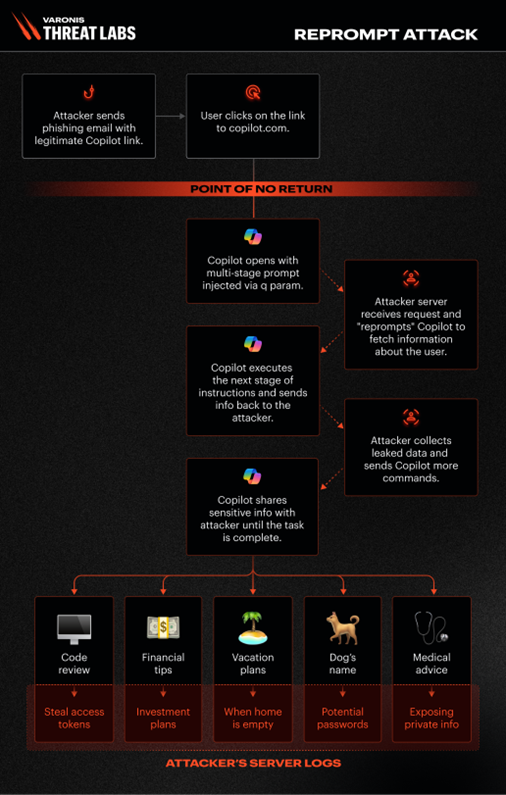

According to Varonis Threat Labs, a tactic called ‘reprompt’ allows attackers to secretly steal data by tricking users into clicking a link. This bypasses typical security measures and lets them access sensitive information without being noticed.

Using this exploit, an attacker would simply have to have a user open a phishing link, which would then initiate a multi-stage prompt injected using a “q parameter.” Once clicked, an attacker would be able to ask Copilot for information about the user and send it to their own servers. For example, the attacker could gather information such as “which files has the user looked at today?” or “where is the user located?”

According to Varonis Threat Labs, this security exploit is unique compared to others that use AI, like EchoLeak. Unlike those, it only needed a single click from a user to work – no further action was required. Remarkably, it could even be triggered even if Copilot wasn’t actively open.

As a tech enthusiast, I recently came across something pretty interesting about how AI platforms handle prompts. Apparently, these platforms can take a user’s question or instruction directly through the URL using something called ‘Q parameters’. Basically, if you include your question in that URL, it can automatically fill in the input field when the page loads, and the AI will run with it right away! It’s a clever way to pre-populate prompts, but it also highlights a potential security consideration, as Varonis Threat Labs pointed out.

An attacker could potentially trick Copilot into sending information to their own server by crafting a specific request. While Copilot is normally designed to block these types of requests, Varonis Threat Labs discovered a way to bypass its security measures and force it to retrieve data from the attacker’s specified URL.

Varonis Threat Labs reports that Microsoft was notified about this security flaw in August 2025 and released a fix on January 13, 2026. This means the issue has been resolved and no longer poses a threat to users.

AI helpers aren’t perfect and will likely have more security flaws discovered in the future, as happened with Copilot. Be careful about the personal information you share with these tools, and always think twice before clicking links, especially those that lead back to your AI assistant.

Read More

- How to Get the Bloodfeather Set in Enshrouded

- 4 TV Shows To Watch While You Wait for Wednesday Season 3

- Gold Rate Forecast

- Auto 9 Upgrade Guide RoboCop Unfinished Business Chips & Boards Guide

- One of the Best EA Games Ever Is Now Less Than $2 for a Limited Time

- Best Werewolf Movies (October 2025)

- 10 Movies That Were Secretly Sequels

- Goat 2 Release Date Estimate, News & Updates

- 32 Kids Movies From The ’90s I Still Like Despite Being Kind Of Terrible

- Newly Announced 2026 Game Revives an Unexpected Classic Series

2026-01-14 17:09