Over the past few months, I’ve been experimenting with Ollama, a tool that provides an accessible way for people like me to engage with large language models (LLMs) directly on our personal computers, which is quite effective.

Using and setting up Ollama previously wasn’t overly challenging, but it necessitated residing within a terminal window. However, this is no longer the case.

Now, Ollama offers a sleek graphical user interface (GUI) specifically designed for Windows. This new feature comes as an official application that installs alongside command-line interface (CLI) components during setup. This change significantly reduces the necessity of using a terminal to access your LLM.

If it suits your preference better, you may proceed to download the Command Line Interface (CLI) version of Ollama directly from its GitHub repository instead.

Setting up both parts is just as straightforward: You only need to download Ollama from its website, then run the installation file. The software will automatically run in the background. You can access it easily via the system tray or command line, or you can also start it directly from there, either through the system tray icon or the Start Menu.

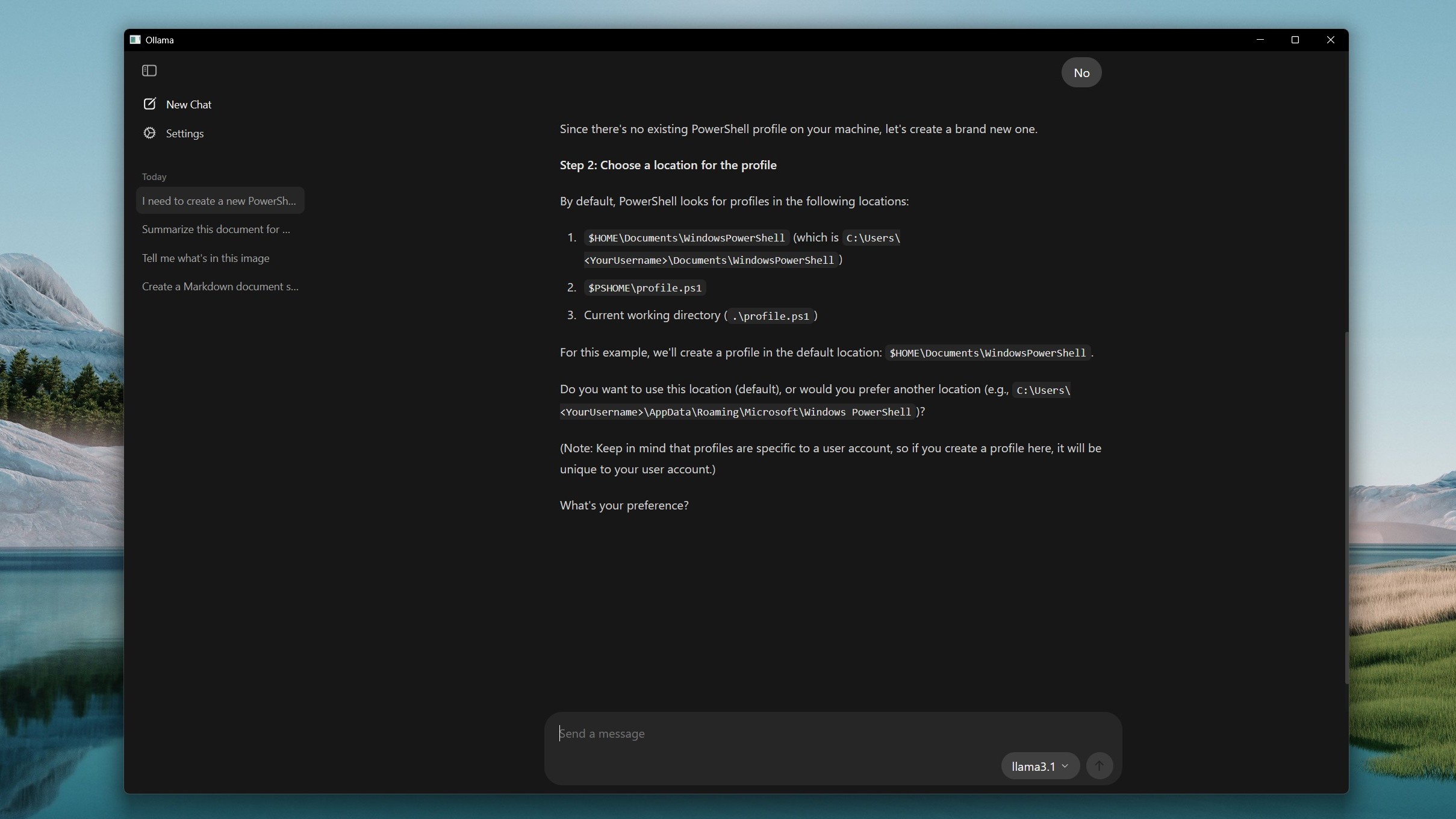

Using an AI chatbot like Ollama is quite straightforward if you have interacted with one before. Simply type your message into the box, and based on the model you select, Ollama will provide a response.

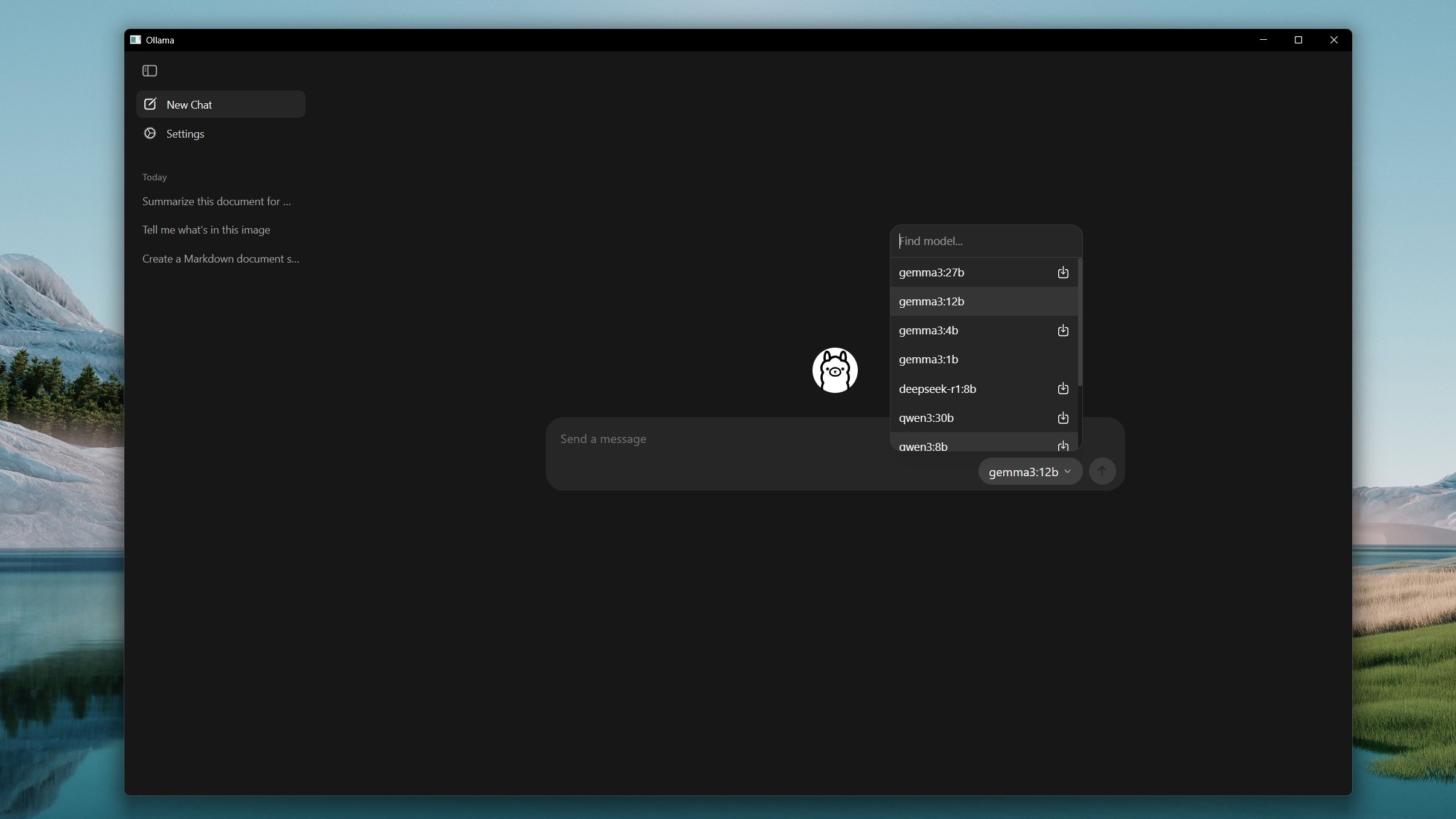

It appears that when you click on the icon beside an uninstalled model, it should download and install onto your device, but at present, it seems inactive. However, you can still discover models by using the drop-down menu to search for them instead.

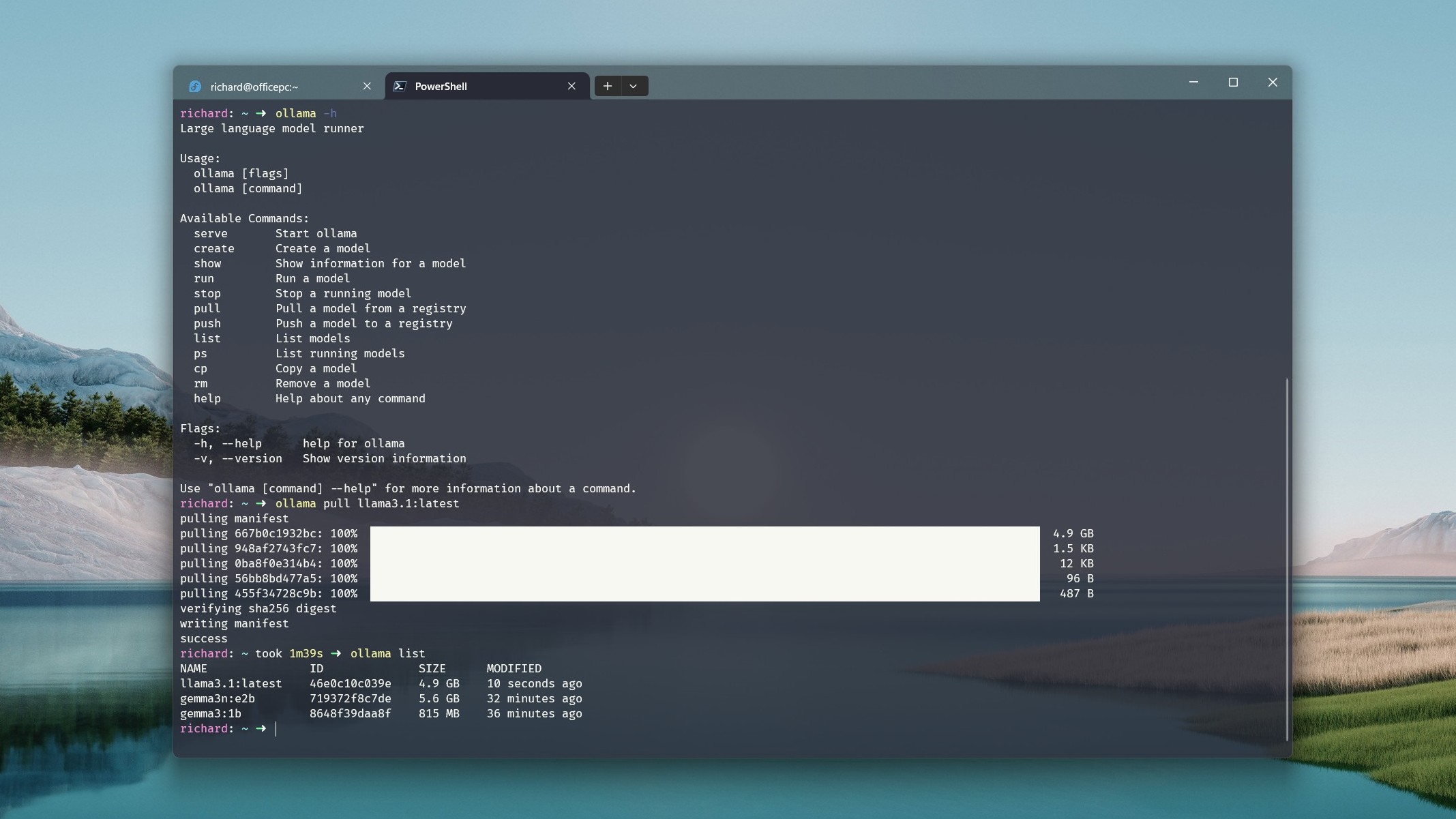

Instead of directly pulling models from the terminal, I discovered a workaround where you select the model first, followed by attempting to send a message to it. This action triggers the Ollama app to fetch it initially. Of course, you can still access models through the terminal and they’ll show up in the app later on.

The settings may be simple, but there’s an option to adjust the size of the viewing area. For extensive files, you can extend the view range using the slider, although this requires additional system memory, which makes sense given the increased demand.

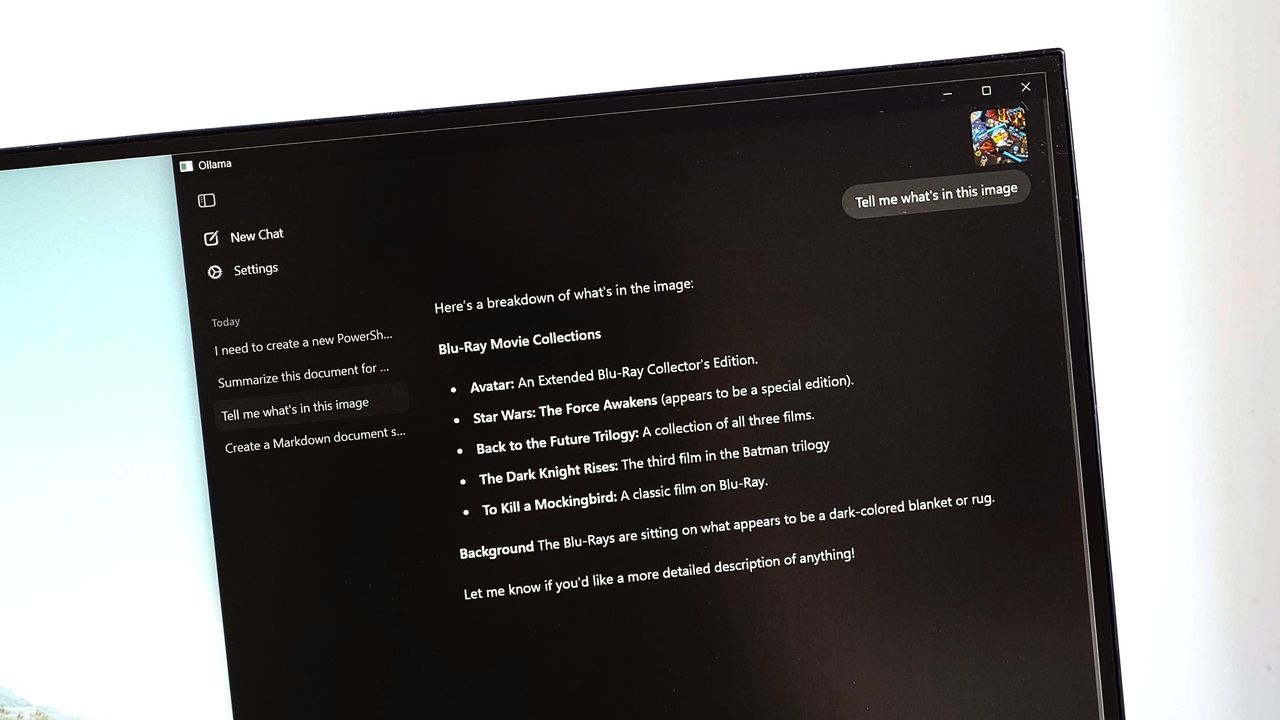

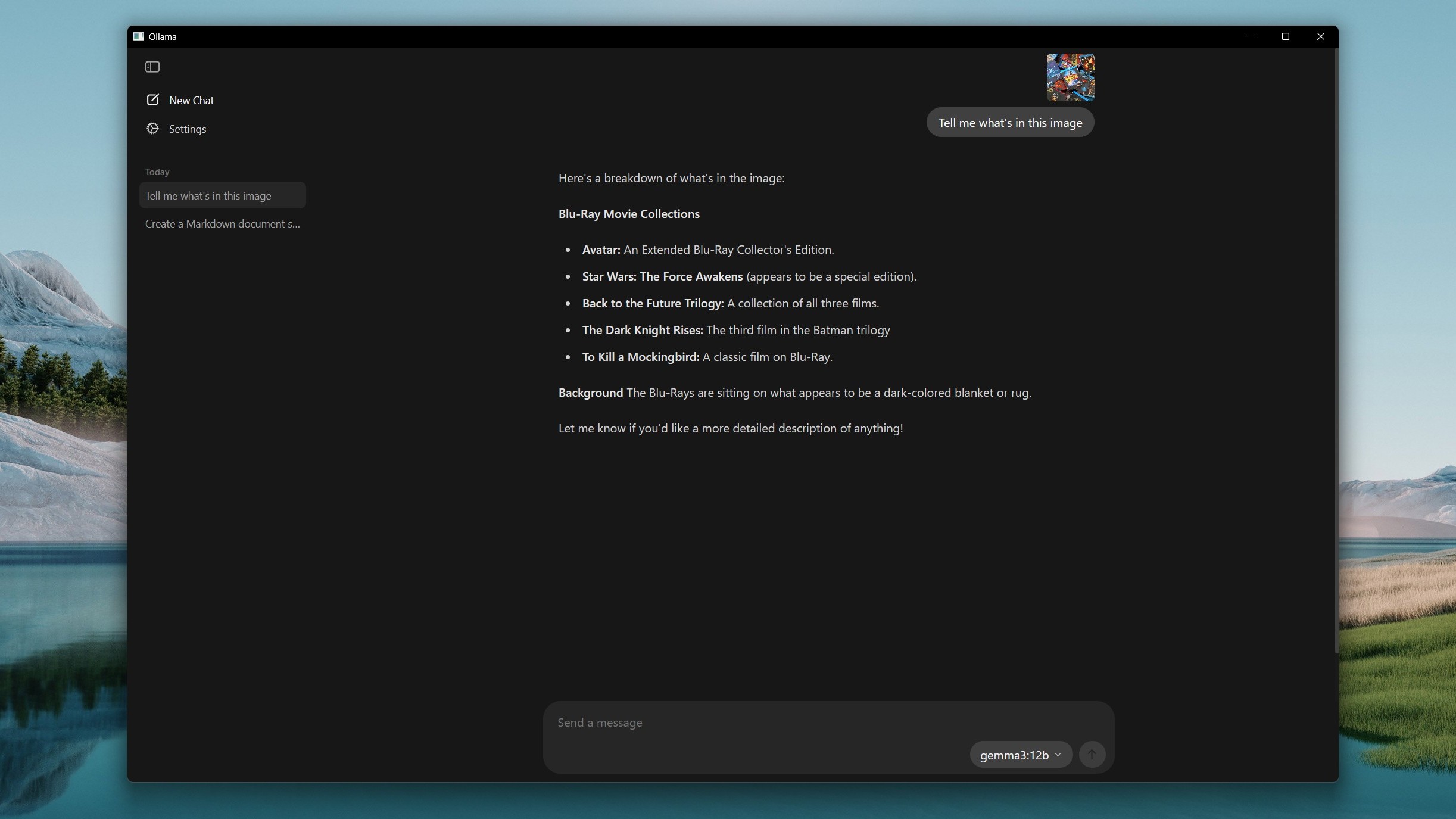

The latest Ollama application boasts some handy functions, and the accompanying blog post includes a couple of videos demonstrating these features in real-time. With its multimodal capabilities, you can insert images into the app and transmit them to models that are proficient at interpreting them, such as Gemma 3.

In this version, I’ve tried to maintain the original meaning while making it more readable and natural for an English conversation or text.

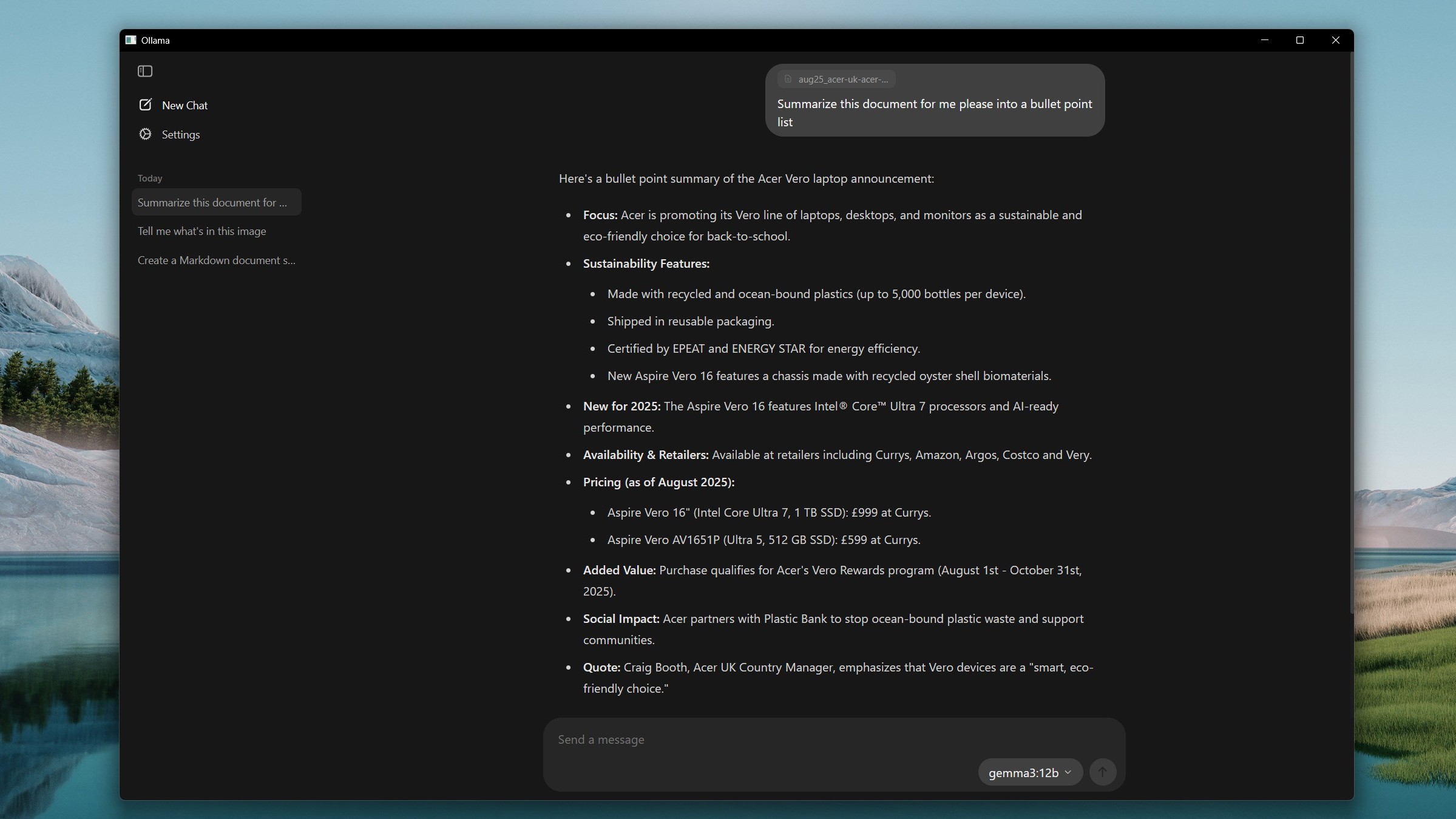

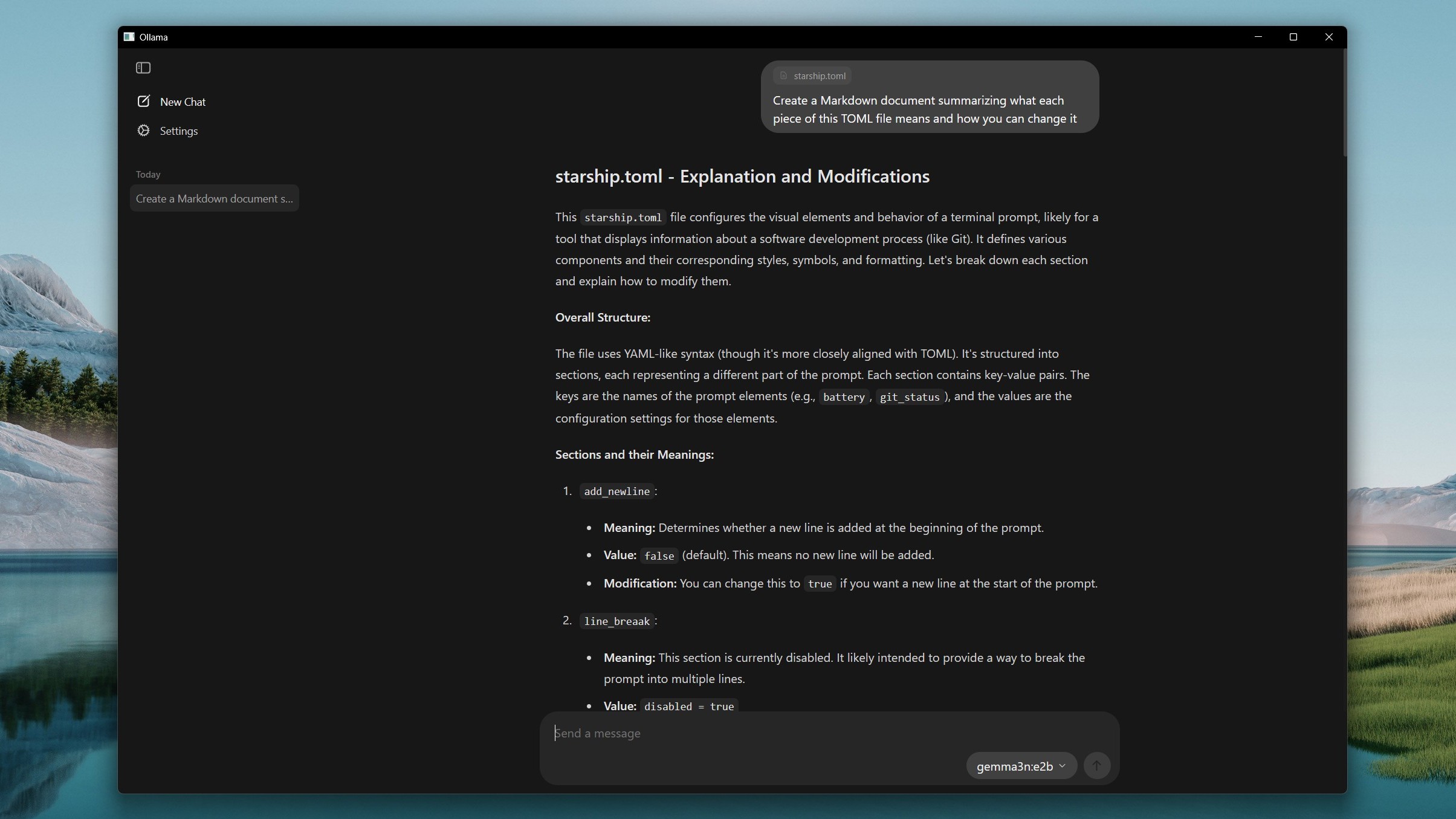

The application is capable of deciphering your code files as well, given that you place them within it. For instance, if you ask the app to create a Markdown document detailing the code and instructions on how to utilize it, here’s what it might produce:

“Here’s an explanation for the provided code and guidance on using it:

[Insert code description and usage instructions here]

To run this code, follow these steps:

1. [First step to run the code]

2. [Second step to run the code]

3. [Third step to run the code, if any]

Enjoy coding!”

Using Ollama’s drag-and-drop feature for transferring files is significantly more user-friendly compared to navigating through the Command Line Interface (CLI). With this method, you won’t have to worry about finding and remembering file locations.

For the time being, it might be beneficial to utilize the Command Line Interface (CLI) for certain functionalities in Ollama, as some features such as pushing a model, creating models, listing running models, copying, and deleting can only be done through the CLI, not the app.

In my opinion, this is a fantastic progress for everyday use. Keep in mind, those who have been utilizing local Language Learning Models up until now might be accustomed to working within a terminal window. Nonetheless, possessing the ability and contentment to work with a terminal is definitely a skill I’d always advocate for.

By incorporating an intuitive graphical user interface (GUI), we make the tool more approachable for a wider range of users. Previously, tools like Open WebUI have successfully achieved this, but having the GUI as part of the core package simplifies its use.

Despite this, it’s essential to note that a computer with sufficient processing power will still be required to utilize these models. As of now, Ollama does not support Neural Processing Units (NPUs), making the overall system crucial for their operation.

For optimal performance, consider using either an 11th Generation Intel processor or a CPU based on AMD’s Zen 4 architecture that supports AVX-512. Aim for at least 16GB of RAM if possible, but 8GB is the minimum recommendation for the 7B models by Ollama. Smaller models with lower resource requirements are also available.

As a researcher, I can assure you that while a GPU isn’t strictly essential for all tasks, having one undeniably enhances performance, particularly when dealing with larger models. The more Video Random Access Memory (VRAM) your GPU boasts, the more efficient and smoother these demanding tasks will be.

Giving local Language Learning Model (LLM) a try could be an interesting experience, especially if your computer is capable. Since it doesn’t require an internet connection, you can use it offline and it won’t contribute to the vast AI cloud server up there.

The Ollama app doesn’t have web search capabilities built-in, but if you really require this feature, there are workarounds available. However, for web searching, you’d need to use something other than the Ollama app. Still, I recommend downloading and trying it out as it could become your preferred AI chatbot.

Read More

- Best Controller Settings for ARC Raiders

- Stephen Colbert Jokes This Could Be Next Job After Late Show Canceled

- 10 X-Men Batman Could Beat (Ranked By How Hard It’d Be)

- DCU Nightwing Contender Addresses Casting Rumors & Reveals His Other Dream DC Role [Exclusive]

- 7 Home Alone Moments That Still Make No Sense (And #2 Is a Plot Hole)

- Is XRP ETF the New Stock Market Rockstar? Find Out Why Everyone’s Obsessed!

- Embracer Group is Divesting Ownership of Arc Games, Cryptic Studios to Project Golden Arc

- XRP’s Cosmic Dance: $2.46 and Counting 🌌📉

- JRR Tolkien Once Confirmed Lord of the Rings’ 2 Best Scenes (& He’s Right)

- Bitcoin or Bust? 🚀

2025-08-05 15:42