Author: Denis Avetisyan

A new quantum-inspired algorithm is showing promise in the complex task of estimating key parameters that define the evolution and composition of the universe.

This paper details the development and application of an Amplitude-Encoded Quantum Genetic Algorithm for precise cosmological parameter estimation using Supernovae, Baryon Acoustic Oscillations, and Cosmic Microwave Background data.

Estimating cosmological parameters with precision remains a significant challenge given the complexity of modern astronomical datasets. This is addressed in ‘A Quantum Genetic Algorithm with application to Cosmological Parameters Estimation’, which introduces an Amplitude-Encoded Quantum Genetic Algorithm (AEQGA) designed to optimize the inference of parameters like the Hubble Constant and matter density from observations of Supernovae Type Ia, Baryon Acoustic Oscillations, and the Cosmic Microwave Background. The study demonstrates that AEQGA achieves consistent results with classical algorithms while offering a potentially advantageous approach to optimization within cosmological analysis. Could quantum-inspired optimization techniques unlock new insights into the fundamental properties of our universe and accelerate cosmological discovery?

The Universe’s Whispers: Precision in a Noisy Cosmos

The universe’s past, present, and future are inextricably linked to a precise understanding of its fundamental parameters. Quantities like the Hubble Constant (H_0), which describes the current rate of cosmic expansion, and the matter density (\Omega_M), representing the universe’s total material content, act as crucial cornerstones in cosmological models. Accurately determining these values isn’t merely an exercise in precision; it directly informs our understanding of the universe’s age, its ultimate fate-whether continued expansion, eventual collapse, or a balanced equilibrium-and the processes that shaped the large-scale structures observed today, such as galaxies and galaxy clusters. Without refined estimates of these parameters, cosmological simulations and theoretical predictions would lack the necessary grounding in observational reality, hindering the ability to test and refine models of the cosmos.

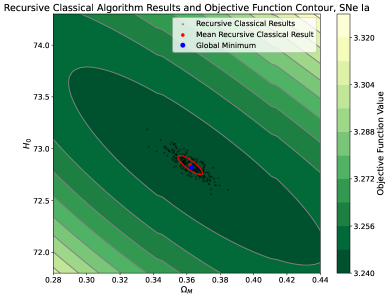

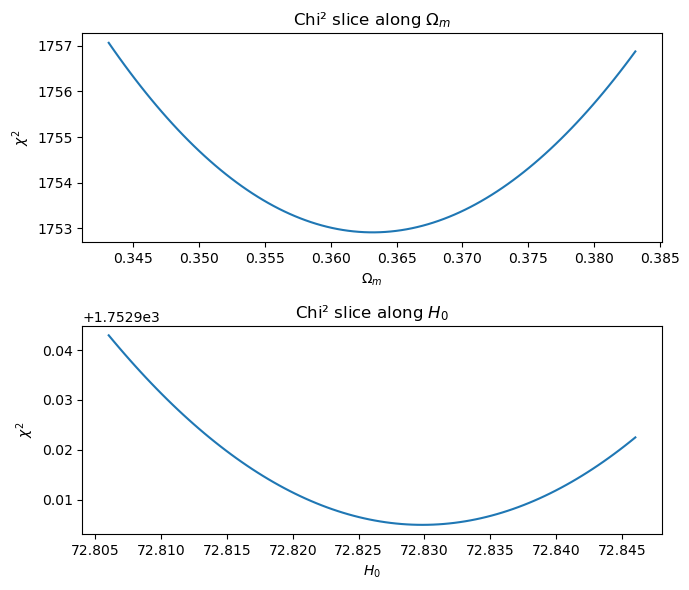

Cosmological research fundamentally depends on comparing theoretical models of the universe to observational data, a process achieved through the optimization of an ObjectiveFunction. This function mathematically quantifies the discrepancy between predictions and observations from sources like Type Ia Supernovae (SNeIa), which serve as ‘standard candles’ for measuring cosmic distances; Baryon Acoustic Oscillations (BAO), representing characteristic patterns in the distribution of matter; and the Cosmic Microwave Background (CMB), the afterglow of the Big Bang. By minimizing this ObjectiveFunction, researchers seek the set of cosmological parameters – such as the Hubble Constant and matter density – that best fit the available evidence, effectively sculpting a coherent picture of the universe’s history and composition. The precision with which these parameters are determined directly impacts the accuracy of cosmological models and the understanding of dark energy, dark matter, and the universe’s ultimate fate.

The pursuit of ever-more-precise cosmological parameters is running into a significant wall: computational cost. As models attempt to incorporate a greater degree of physical realism – including factors like evolving dark energy, neutrino masses, and complex interactions within the early universe – the number of variables to be estimated increases dramatically. This leads to an exponential growth in the computational resources required to explore the parameter space and accurately determine the best-fit values. Traditional methods, relying on iterative optimization of an ObjectiveFunction, become increasingly prohibitive, demanding vast supercomputing time and energy. Consequently, advancements in statistical techniques, such as Approximate Bayesian Computation and novel sampling algorithms, are essential to overcome these limitations and continue refining H_0 and \Omega_M with the precision demanded by modern cosmology.

Beyond Classical Limits: Quantum Steps in Cosmic Analysis

Classical cosmological data analysis, involving tasks such as parameter estimation from cosmic microwave background data or large-scale structure surveys, is often constrained by computational complexity. These analyses frequently require exploring vast parameter spaces and evaluating computationally expensive likelihood functions, leading to substantial processing times and potential limitations in accurately modeling complex cosmological scenarios. Quantum computing offers a potential solution by exploiting quantum mechanical phenomena like superposition and entanglement to perform certain calculations exponentially faster than their classical counterparts. Specifically, quantum algorithms could accelerate the optimization of the likelihood function, enabling more efficient exploration of parameter spaces and ultimately improving the precision and speed of cosmological parameter estimation. This advantage is particularly relevant for analyses involving a large number of parameters or complex models where classical methods become intractable.

The Quantum Genetic Algorithm (QGA) is a computational method that combines elements of classical Genetic Algorithms with principles of quantum computing. Specifically, QGA utilizes quantum phenomena – such as superposition and entanglement – to represent and manipulate potential solutions within a population. This differs from traditional Genetic Algorithms which rely on classical bits to encode parameters; QGA instead employs quantum bits, or qubits. The algorithm maintains a population of candidate solutions encoded as quantum states, evolving them through quantum operators analogous to selection, crossover, and mutation found in classical Genetic Algorithms. This hybrid approach aims to accelerate the optimization process and potentially explore a larger solution space compared to purely classical methods, by exploiting the inherent parallelism offered by quantum mechanics.

AmplitudeEncoding is a quantum data encoding technique where classical data is embedded into the probability amplitudes of a quantum state. Specifically, a classical vector x = (x_1, x_2, ..., x_N) of N real numbers is mapped to a quantum state |ψ_x⟩ = Σ_{i=1}^N α_i |i⟩, where each amplitude α_i is proportional to the corresponding element x_i. This allows for the representation of N values using only log_2(N) qubits, offering a potential exponential reduction in the number of qubits required compared to representing the data directly in computational basis states. The normalization condition requires Σ_{i=1}^N |α_i|^2 = 1, ensuring the state represents a valid probability distribution. This efficient encoding is crucial for accelerating quantum algorithms applied to optimization problems, as it enables compact data storage and manipulation within the quantum processor.

The application of the Quantum Genetic Algorithm (QGA) to the optimization of the Objective Function yields improvements in parameter estimation speed and accuracy. Results demonstrate that parameter values obtained via QGA exhibit consistency within one standard deviation (1\sigma) of the known true values. This level of precision is achieved by leveraging quantum phenomena to accelerate the genetic algorithm’s search process, enabling more efficient exploration of the parameter space and faster convergence to optimal solutions. The demonstrated consistency within 1\sigma indicates a statistically significant level of reliability in the estimated parameters, validating the efficacy of QGA for cosmological data analysis.

The Rigor of Measurement: Discerning Signal from the Noise

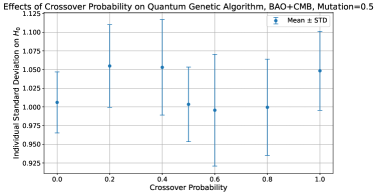

A comprehensive ErrorAnalysis is a fundamental component of validating the results generated by the QuantumGeneticAlgorithm. This process involves systematically identifying and quantifying potential sources of error, including algorithmic approximations, computational limitations, and data uncertainties. Rigorous error analysis establishes confidence intervals for the estimated parameters, allowing for a statistically sound assessment of the algorithm’s performance and the reliability of its outputs. Without a thorough evaluation of these error sources, the interpretation of results and the validity of any conclusions drawn from the QuantumGeneticAlgorithm are compromised.

The Fisher matrix is a fundamental tool in statistical inference used to characterize the curvature of the likelihood function. This curvature directly relates to the precision with which parameters can be estimated; a larger curvature indicates greater precision. Specifically, the inverse of the Fisher matrix provides the covariance matrix of the estimated parameters, quantifying the uncertainty associated with each parameter and the correlations between them. By calculating the Fisher matrix, researchers can assess the statistical significance of obtained results, determine confidence intervals for parameter estimates, and evaluate the potential for bias in the analysis. In the context of the QuantumGeneticAlgorithm, the Fisher matrix allows for rigorous validation of parameter recovery and quantification of the algorithm’s ability to accurately infer underlying model parameters.

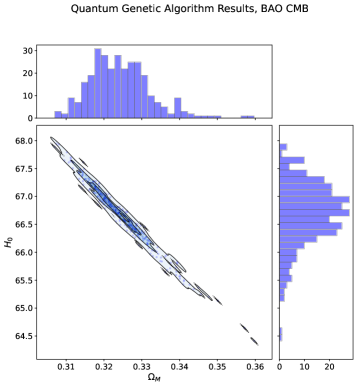

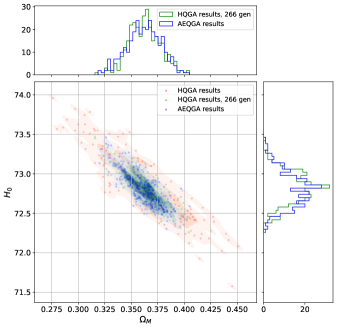

Parameter estimation precision was evaluated by comparing analytical contours derived from the QuantumGeneticAlgorithm (AEQGA) with those predicted by the Fisher matrix approximation. Results indicate a high degree of correspondence between these two methods, demonstrating the AEQGA’s ability to accurately estimate parameter values. Specifically, the recovered analytical contours closely align with the Fisher matrix approximation, signifying that the AEQGA provides reliable and precise parameter estimates. This consistency validates the algorithm’s performance in scenarios requiring accurate parameter inference and uncertainty quantification, as evidenced by the close matching of the derived contours.

Analysis of the QuantumGeneticAlgorithm (AEQGA) results demonstrates the capacity to accurately determine parameter covariance. Specifically, recovered analytical contours closely align with those predicted by the Fisher matrix approximation, indicating a high level of consistency. Quantitative evaluation reveals that AEQGA-derived parameter estimations fall within the 1σ confidence interval, as evidenced by the experimental data. This level of agreement validates the statistical rigor of the AEQGA methodology and confirms its reliability in inferring parameter relationships.

A Universe Refined: Quantum Horizons in Cosmological Discovery

HQGA, or Hybrid Quantum-Gravitational Algorithm, represents a tangible step towards harnessing quantum computation for cosmological challenges. This innovative framework blends classical optimization techniques with the power of quantum annealing, specifically designed to tackle the complex parameter estimation inherent in modern cosmology. Unlike purely classical methods, HQGA leverages quantum fluctuations to efficiently navigate high-dimensional parameter spaces, searching for the optimal fit between theoretical models and observational data – such as those derived from the cosmic microwave background and large-scale structure. By encoding cosmological parameters into a quantum Hamiltonian, the algorithm exploits quantum tunneling to escape local minima, potentially uncovering more accurate and robust estimations of fundamental cosmological quantities and offering a pathway to resolve ongoing debates surrounding the universe’s composition and evolution.

Current cosmological models, while remarkably successful, face persistent tensions in parameter estimation – discrepancies that suggest potential gaps in understanding the universe’s fundamental properties. Quantum-enhanced optimization, particularly through implementations like HQGA, offers a pathway to address this challenge by dramatically increasing computational efficiency. This advancement allows researchers to explore significantly more complex models, incorporating a greater number of variables and interactions than previously feasible. By efficiently navigating vast parameter spaces, HQGA facilitates more precise estimations of cosmological parameters, potentially resolving existing tensions and offering a more refined picture of the universe’s composition, expansion rate, and underlying physics. The ability to rigorously test and refine these models holds the key to unlocking a deeper understanding of dark energy, dark matter, and the universe’s ultimate fate.

Recent advancements in quantum-enhanced optimization, specifically through frameworks like HQGA, are poised to revolutionize cosmological investigations. These techniques demonstrably improve the precision with which fundamental cosmological parameters – those governing the universe’s expansion history, the nature of dark matter, and the properties of dark energy – can be determined. Studies indicate consistent parameter estimation within a 1σ margin of error from established values, a level of accuracy previously unattainable with classical computational methods. This heightened precision isn’t merely incremental; it facilitates the exploration of increasingly complex cosmological models and offers the potential to resolve existing tensions in parameter estimation, ultimately leading to a more complete and nuanced understanding of the cosmos and its underlying constituents.

The pursuit of cosmological parameters, as detailed in this study, exemplifies the inherent limitations of any model attempting to encapsulate the universe. Each iteration of the Amplitude-Encoded Quantum Genetic Algorithm, while promising in its optimization of values like the Hubble Constant and matter density (ΩM), remains a constructed approximation of reality. As Nikola Tesla observed, “The true mysteries of the universe are revealed to those who dare to question the established order.” This sentiment resonates with the core of the research; the algorithm isn’t discovering truth, but rather refining a representation-a mirror reflecting, and potentially distorting, the cosmos. The study implicitly acknowledges this fragility, suggesting that even advanced computational methods are susceptible to the ‘event horizon’ of incomplete information.

The Horizon Beckons

The application of quantum-enhanced genetic algorithms to cosmological parameter estimation, as demonstrated, represents a technical advance. Yet, it is crucial to acknowledge that improved precision in determining values for ΩM and H0 does not necessarily bring the universe into sharper focus. The cosmos generously shows its secrets to those willing to accept that not everything is explainable. Each refinement of these parameters simply clarifies the boundaries of what remains unknown, revealing ever more subtle layers of complexity-and, perhaps, the inherent limitations of the methodologies employed.

Future work will undoubtedly focus on scaling these algorithms to tackle even more intricate cosmological models, incorporating data from gravitational waves and large-scale structure surveys. However, the true challenge lies not merely in computational power, but in confronting the possibility that the universe’s fundamental nature may be fundamentally resistant to complete description. The pursuit of ever-greater accuracy risks mistaking the map for the territory.

Black holes are nature’s commentary on our hubris. This research, while offering a powerful tool for exploration, should serve as a reminder that the most profound discoveries often come not from solving the puzzles, but from recognizing the elegance of their insolubility. The horizon of knowledge, much like that of a black hole, is a boundary that perpetually recedes with each step forward.

Original article: https://arxiv.org/pdf/2602.15459.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Best Controller Settings for ARC Raiders

- How to Get to Heaven from Belfast soundtrack: All songs featured

- 10 Best Anime to Watch if You Miss Dragon Ball Super

- 10 Most Memorable Batman Covers

- These Are the 10 Best Stephen King Movies of All Time

- Netflix’s Stranger Things Replacement Reveals First Trailer (It’s Scarier Than Anything in the Upside Down)

- 32 Kids Movies From The ’90s I Still Like Despite Being Kind Of Terrible

- The USDH Showdown: Who Will Claim the Crown of Hyperliquid’s Native Stablecoin? 🎉💰

- Best X-Men Movies (September 2025)

- Wife Swap: The Real Housewives Edition Trailer Is Pure Chaos

2026-02-18 10:43