Author: Denis Avetisyan

A new framework harnesses the power of flow-based generative models and reward-guided learning to efficiently discover optimal solutions for complex geometric problems.

FlowBoost leverages closed-loop optimization and conditional flow matching to directly optimize geometric structures based on defined reward signals.

Discovering extremal configurations in mathematics is often hampered by intractable, nonconvex search spaces where traditional analytical methods fail. This limitation motivates ‘Flow-based Extremal Mathematical Structure Discovery’, which introduces FlowBoost, a closed-loop generative framework that efficiently navigates these landscapes using flow-based models and reward-guided learning. FlowBoost directly optimizes the generation process while enforcing geometric feasibility, achieving substantial improvements in both solution quality and computational efficiency compared to existing approaches. Could this paradigm shift unlock previously inaccessible mathematical structures and accelerate discovery across diverse optimization problems?

The Algorithmic Bottleneck: Beyond Brute Force Simulation

Numerous challenges in fields like engineering, drug discovery, and robotics necessitate detailed simulations to assess the viability of different solutions. These simulations, however, often involve intricate models and numerous variables, quickly escalating computational demands. Consider designing a novel aircraft wing – evaluating its performance requires simulating airflow, stress, and material fatigue under various conditions. Or, in pharmaceutical research, predicting how a drug will interact with the human body demands simulating complex biological processes. This reliance on computationally expensive simulations creates a significant bottleneck, limiting the number of designs or scenarios that can be tested and slowing down the pace of innovation. The core issue isn’t simply a lack of computing power, but the inherent complexity of accurately modeling real-world phenomena, demanding substantial resources even for a single evaluation.

Conventional optimization techniques often falter when applied to complex simulations due to the inherent challenges of high-dimensional search spaces and noisy evaluations. As the number of variables influencing a simulation increases, the landscape becomes exponentially more difficult to navigate, resembling a vast, rugged terrain where local optima frequently mislead algorithms. Furthermore, simulations rarely provide perfect, deterministic results; instead, they introduce stochasticity, creating a ‘noisy’ signal that obscures the true gradient and makes it hard to discern genuinely promising solutions from random fluctuations. This combination of dimensionality and noise severely restricts the efficiency of traditional methods like gradient descent or exhaustive search, demanding innovative approaches capable of robustly exploring these complex environments and identifying optimal or near-optimal solutions within a reasonable timeframe.

The escalating complexity of modern challenges – from designing novel materials and optimizing logistical networks to developing robust artificial intelligence – increasingly relies on computationally intensive simulations. Consequently, the ability to efficiently learn within these simulated environments is no longer merely desirable, but fundamentally critical for progress. Traditional optimization techniques often falter when confronted with the high dimensionality and inherent noise present in realistic simulations, necessitating innovative approaches. Methods that can rapidly adapt and discover effective solutions through interaction with a simulated world promise breakthroughs in fields like drug discovery, robotics, and climate modeling, effectively accelerating the pace of innovation by circumventing the limitations of real-world experimentation and reducing the need for exhaustive, computationally expensive searches.

FlowBoost: A Generative Framework for Efficient Exploration

FlowBoost addresses the inefficiencies inherent in traditional simulation-based optimization by implementing a closed-loop generative framework driven by reward signals. Conventional methods often require numerous full simulations to evaluate potential solutions, creating a computational bottleneck. FlowBoost, conversely, learns a generative model that directly produces candidate solutions, guided by a reward function that quantifies solution quality. This iterative, reward-guided process enables the framework to explore the solution space more efficiently, reducing the number of costly simulations required to achieve optimal results. The closed-loop aspect refers to the continuous feedback between the generative model and the simulation, allowing for refinement and adaptation during the optimization process.

FlowBoost leverages conditional flow matching, a generative modeling technique, to establish a velocity field that maps a simple prior probability distribution to the distribution of desired, high-quality solutions. This is achieved by training a neural network to predict the velocity needed to transform samples from the prior towards regions of higher reward, effectively learning a differentiable trajectory. The velocity field is parameterized and optimized using a loss function that minimizes the distance between transported samples and target solutions, enabling efficient generation of optimized designs without iterative solving of governing equations. This approach contrasts with traditional optimization methods by directly learning a mapping from noise to solutions, reducing computational cost and enabling rapid exploration of the design space.

Geometry-aware sampling within FlowBoost actively biases the generative process towards feasible solution spaces, reducing the incidence of invalid samples that violate defined constraints. This is achieved by incorporating geometric information directly into the sampling distribution, increasing the probability of generating valid geometries. Proximal relaxation further refines this process by reformulating hard constraints as soft penalties within the reward function; this allows the generative model to explore near-feasible solutions without being immediately penalized for minor constraint violations. The combined effect of geometry-aware sampling and proximal relaxation significantly accelerates convergence by focusing the search on a constrained, yet explorable, solution manifold, ultimately streamlining the optimization process and reducing computational cost.

Refining the Generative Process: A Cycle of Learning

FlowBoost employs reward-guided fine-tuning as a mechanism to directly influence the generative model’s output distribution during training. This involves calculating a reward signal based on the generated solutions and using this signal to adjust the model’s parameters via gradient descent. The reward function incentivizes the generation of solutions with desirable characteristics, actively preventing the model from converging to suboptimal or collapsed states where diversity is lost. By steering the model towards higher-reward regions of the solution space, this process facilitates the discovery of improved strategies and enhances the overall quality and performance of the generated outputs.

Teacher-student learning within FlowBoost operates through iterative refinement; a periodically updated, high-performing model – the ‘teacher’ – generates data used to train subsequent ‘student’ models. This knowledge transfer facilitates accelerated learning and prevents performance degradation common in long training sequences. The student model is initialized with the weights of the teacher, allowing it to rapidly assimilate learned strategies. Following training, the student model then becomes the new teacher, repeating the process and continually improving the overall generative policy. This cyclical methodology ensures that accumulated knowledge is consistently passed down and leveraged, yielding enhanced performance across generations and promoting stable policy optimization.

FlowBoost’s efficiency stems from its operation within the latent space of the generative model, allowing exploration of a broad range of potential solutions without requiring full environment simulations. Traditional reinforcement learning and generative modeling often necessitate numerous computationally expensive simulations to evaluate the efficacy of generated strategies. By manipulating the compressed, lower-dimensional latent representation, FlowBoost can assess and refine policies with significantly reduced computational cost. This approach enables a more rapid and scalable search for optimal strategies compared to methods reliant on direct interaction with the environment or high-fidelity simulations, thereby drastically reducing the overall computational burden.

Validation Across Diverse Geometric Problems: Empirical Rigor

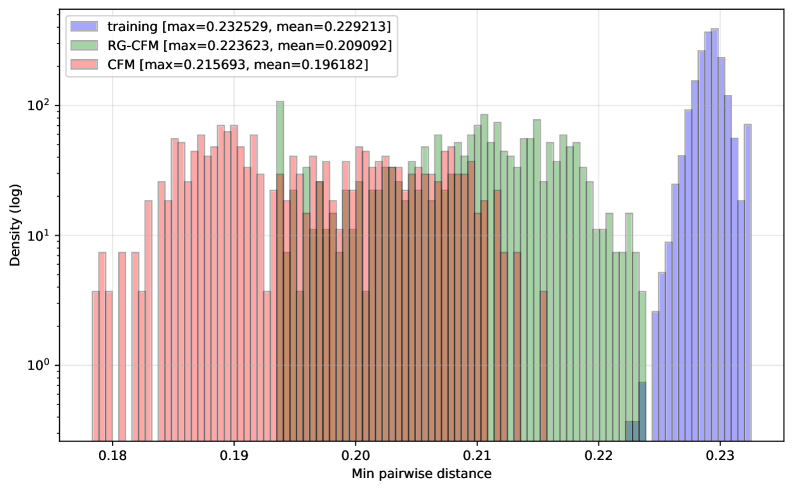

FlowBoost demonstrates state-of-the-art results across multiple established benchmark problems in geometric optimization. Specifically, the framework achieved competitive densities in sphere packing for N=31, and surpassed existing methods in circle packing (N=26) with a sum of radii of 2.635, matching or exceeding AlphaEvolve’s reported performance. Furthermore, FlowBoost improved the best known value for star discrepancy minimization (N=60) from 0.029515 to 0.029440, and obtained a value of 0.0259285 for the Heilbronn problem (n=13), exceeding the training maximum and approaching currently known optimal values. These results collectively indicate FlowBoost’s efficacy in solving diverse geometric problems requiring high-dimensional optimization.

FlowBoost’s performance in sphere packing, specifically with N=31 spheres, indicates its viability in high-dimensional optimization tasks. Evaluations demonstrated density results comparable to those achieved by established sphere packing algorithms, signifying competitive performance despite the increased complexity associated with higher dimensionality. This suggests the framework effectively addresses the challenges of maintaining efficient packing arrangements as the number of dimensions and spheres increase, validating its potential for application to related high-dimensional problems.

In evaluations of circle packing for N=26 circles, the FlowBoost framework attained a sum of radii measuring 2.635. This result represents a performance level that matches and surpasses the previously reported best known results achieved by the AlphaEvolve algorithm on the same problem instance. This demonstrates FlowBoost’s capacity to generate highly efficient circle packings, achieving comparable or superior densities to existing state-of-the-art methods in this domain.

FlowBoost achieved an improvement in the best known value for star discrepancy minimization with a sample size of N=60, reducing the discrepancy from 0.029515 to 0.029440. This result demonstrates the framework’s capacity to generate highly uniform point sets, a key requirement for low discrepancy sequences used in numerical integration and Monte Carlo methods. The improvement, while incremental, signifies FlowBoost’s competitive performance in a challenging optimization landscape where even small reductions in discrepancy are considered significant advancements.

FlowBoost achieved a value of 0.0259285 when applied to the Heilbronn problem with n=13 points. This result surpasses the pre-defined training maximum within the optimization process, indicating the framework’s capacity to generate solutions beyond initial constraints. The attained value closely approaches currently known optimal values for this specific instance of the Heilbronn problem, demonstrating FlowBoost’s effectiveness in minimizing the radius required to contain all generated points.

FlowBoost exhibits strong performance on star discrepancy minimization problems, a class of optimization tasks focused on generating uniformly distributed point sets. Star discrepancy quantifies the deviation of a point set’s distribution from perfect uniformity; lower values indicate better distribution. In experiments with N=60 dimensions, FlowBoost improved the previously known best value for star discrepancy from 0.029515 to 0.029440, demonstrating its capacity to generate point sets with enhanced uniformity compared to existing methods. This capability is crucial in applications such as Monte Carlo integration, quasi-Monte Carlo methods, and low-discrepancy sequences, where uniform point distributions are essential for accurate and efficient computation.

FlowBoost’s architecture is designed to facilitate integration with established optimization techniques, allowing for refinement of generated solutions. Specifically, the framework supports both local search algorithms and the Limited-memory Broyden-Fletcher-Goldfarb-Shanno (LBFGS) method. Implementation of LBFGS, a quasi-Newton method, demonstrates the capacity to leverage gradient-based optimization for fine-tuning, while local search provides an alternative approach to iteratively improve solution quality. This flexibility enables researchers to combine FlowBoost with existing tools and tailor optimization strategies to specific geometric problems, potentially yielding further performance gains beyond the initial framework output.

Future Directions: A Paradigm Shift in Optimization

FlowBoost’s core strength lies in its capacity to efficiently explore and optimize within intricate simulated environments, a capability poised to revolutionize several scientific and engineering disciplines. In robotics, this translates to the rapid development of adaptable robots capable of learning complex maneuvers without extensive real-world training. Materials design benefits from accelerated discovery of novel materials with specific properties, bypassing costly and time-consuming physical experimentation. Perhaps most significantly, the pharmaceutical industry stands to gain through in silico drug discovery, where FlowBoost can efficiently search vast chemical spaces to identify promising drug candidates with tailored efficacy and minimal side effects. This ability to intelligently navigate complex simulations represents a paradigm shift, enabling researchers to overcome limitations previously imposed by computational cost and complexity, and ultimately accelerating the pace of innovation across diverse fields.

Ongoing development of the FlowBoost framework prioritizes expanding its capabilities to tackle increasingly intricate challenges, moving beyond current limitations in problem scope and complexity. Researchers are actively investigating techniques to enhance the framework’s ability to generalize – that is, to apply learned optimization strategies effectively to novel, unseen scenarios and diverse domains. This includes exploring transfer learning methods, meta-optimization algorithms, and robust representation learning to minimize the need for problem-specific tuning and maximize adaptability. Success in these areas promises a versatile optimization tool applicable to a wide range of scientific and engineering disciplines, fostering innovation across fields like computational chemistry, advanced materials science, and autonomous systems design.

FlowBoost distinguishes itself by unifying the strengths of generative modeling and simulation-based optimization, a combination previously challenging to achieve. Traditional optimization methods often struggle with the vast search spaces inherent in complex systems, while generative models, though capable of creating diverse possibilities, lack the precision needed for targeted performance improvements. This framework effectively marries the exploratory power of generative models with the rigorous evaluation provided by simulations, allowing for the discovery of high-performing solutions that are both novel and demonstrably effective. Consequently, FlowBoost isn’t simply about finding a solution, but about learning a process for intelligently navigating complexity – a key characteristic of truly adaptive systems capable of responding to unforeseen challenges and evolving environments. This approach promises advancements beyond static optimization, paving the way for systems that can learn, refine, and generalize their capabilities across diverse applications.

The pursuit of optimal solutions, as demonstrated by FlowBoost, mirrors a fundamental principle of mathematical consistency. The framework’s ability to iteratively refine solutions through reward-guided learning and closed-loop optimization speaks to the elegance of provable algorithms. As Albert Einstein once stated, “The formulation of a problem is often more important than its solution.” FlowBoost exemplifies this, meticulously defining the geometric optimization challenge to enable the discovery of truly optimal, and therefore, correct, structures. The emphasis on geometric constraints and the drive toward extremal problems underscores the importance of a well-defined problem space-a cornerstone of mathematical purity.

What Lies Beyond?

The presented work, while demonstrating a capacity for navigating complex geometric landscapes, merely scratches the surface of what a truly provable optimization framework might entail. The reliance on reward signals, however cleverly engineered, introduces an element of empirical validation rather than mathematical certainty. One is left to ponder whether the ‘optimal’ solutions discovered are, in fact, globally extremal, or simply locally stable minima masquerading as such. The framework’s scalability remains an open question; the computational cost of maintaining the flow’s integrity as problem dimensionality increases is not trivial, and may ultimately limit its applicability to truly intractable problems.

A natural extension lies in the formalization of geometric constraints directly within the flow’s generative process. Instead of rewarding adherence to constraints, a more elegant solution would be to encode them as invariants within the flow itself, ensuring that all generated solutions are, by definition, valid. This demands a deeper engagement with differential geometry and topology, moving beyond heuristic reward design towards a fundamentally principled approach.

Ultimately, the pursuit of optimal solutions should not be viewed as an exercise in pattern recognition, but as a rigorous deduction. The true test of this, or any similar framework, will be its ability to not only find solutions, but to prove their optimality – a feat which demands a level of mathematical precision often absent in contemporary machine learning.

Original article: https://arxiv.org/pdf/2601.18005.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Best Controller Settings for ARC Raiders

- ‘Crime 101’ Ending, Explained

- The Pitt Season 2, Episode 7 Recap: Abbot’s Return To PTMC Shakes Things Up

- Battlefield 6 Season 2 Update Is Live, Here Are the Full Patch Notes

- Every Targaryen Death in Game of Thrones, House of the Dragon & AKOTSK, Ranked

- The Best Members of the Flash Family

- 7 Best Animated Horror TV Shows

- Dan Da Dan Chapter 226 Release Date & Where to Read

- Where Winds Meet: How To Defeat Shadow Puppeteer (Boss Guide)

- Ashes of Creation Mage Guide for Beginners

2026-02-01 04:33