Author: Denis Avetisyan

Future lepton colliders offer an unprecedented opportunity to explore the fundamental nature of quantum entanglement and nonlocality in high-energy particle collisions.

This review details how precision measurements of spin correlations in tau-lepton and weak boson pairs at future lepton colliders can surpass the sensitivity of hadron colliders for probing Bell nonlocality and testing the foundations of quantum mechanics.

The foundational principles of quantum mechanics, while rigorously tested in low-energy systems, remain comparatively unexplored in the high-energy regime of particle physics. This paper, ‘Quantum Entanglement and Bell Nonlocality at Future Lepton Collider’, presents recent investigations into the detection of quantum entanglement and Bell nonlocality in processes involving tau-leptons and weak gauge boson pairs. Results demonstrate that future lepton colliders offer unprecedented sensitivity to probe quantum correlations, exceeding the capabilities of current hadron colliders. Could these studies at the energy frontier reveal subtle deviations from quantum predictions and provide insights into the fundamental nature of quantum interactions?

The Emergence of Interconnectedness

Quantum entanglement represents a profound departure from classical physics, fundamentally questioning how interconnectedness operates in the universe. In classical thought, two objects are only correlated because of shared history or a communicating signal – a principle known as locality. Entanglement, however, demonstrates that two or more particles can become linked in such a way that they share the same fate, no matter how far apart they are. Measuring a property of one entangled particle instantaneously influences the corresponding property of the other, seemingly violating the classical constraint that information cannot travel faster than light. This “spooky action at a distance,” as Einstein famously termed it, doesn’t allow for faster-than-light communication, but it does demonstrate a deep interconnectedness that challenges the classical notion of separability – the idea that objects have definite properties independent of observation. The implications of this non-local correlation are not merely theoretical; they form the basis for emerging quantum technologies and continue to reshape understanding of the fundamental nature of reality.

The promise of quantum technologies – from secure communication and ultra-powerful computing to highly sensitive sensors – fundamentally relies on the ability to harness quantum entanglement. However, simply creating entangled particles isn’t enough; these delicate states are notoriously fragile and susceptible to environmental noise, quickly degrading the correlations necessary for practical applications. Therefore, a central challenge lies in developing robust methods for precisely characterizing entangled states – determining their quality, purity, and the degree to which they maintain their quantum properties. Sophisticated techniques, including quantum state tomography and entanglement witnesses, are employed to map out these states and quantify their usefulness, ensuring that the entanglement resource remains viable long enough to perform meaningful quantum operations. Without meticulous characterization, even the most brilliantly created entangled states remain unrealized potential, hindering the development of a truly quantum future.

Probing Correlations in Particle Collisions

Lepton colliders, such as proposed electron-positron facilities, offer a distinct advantage in studying particle pair production and subsequent entanglement due to the relatively simple and well-defined initial state. Unlike hadron colliders which involve complex strong interactions and numerous background processes, lepton collisions primarily produce particles through the annihilation of the lepton and anti-lepton beams. This results in a cleaner experimental signature and reduced systematic uncertainties when analyzing pairs like Z bosons, W bosons, and particularly tau-leptons. The production of these pairs-e^+e^- \rightarrow Z \rightarrow \tau^+\tau^--allows for precise measurements of their quantum correlations, essential for testing entanglement and exploring fundamental aspects of quantum mechanics. The lower energy spread and higher luminosity projected for future lepton colliders will significantly enhance the precision and statistical power of these entanglement studies.

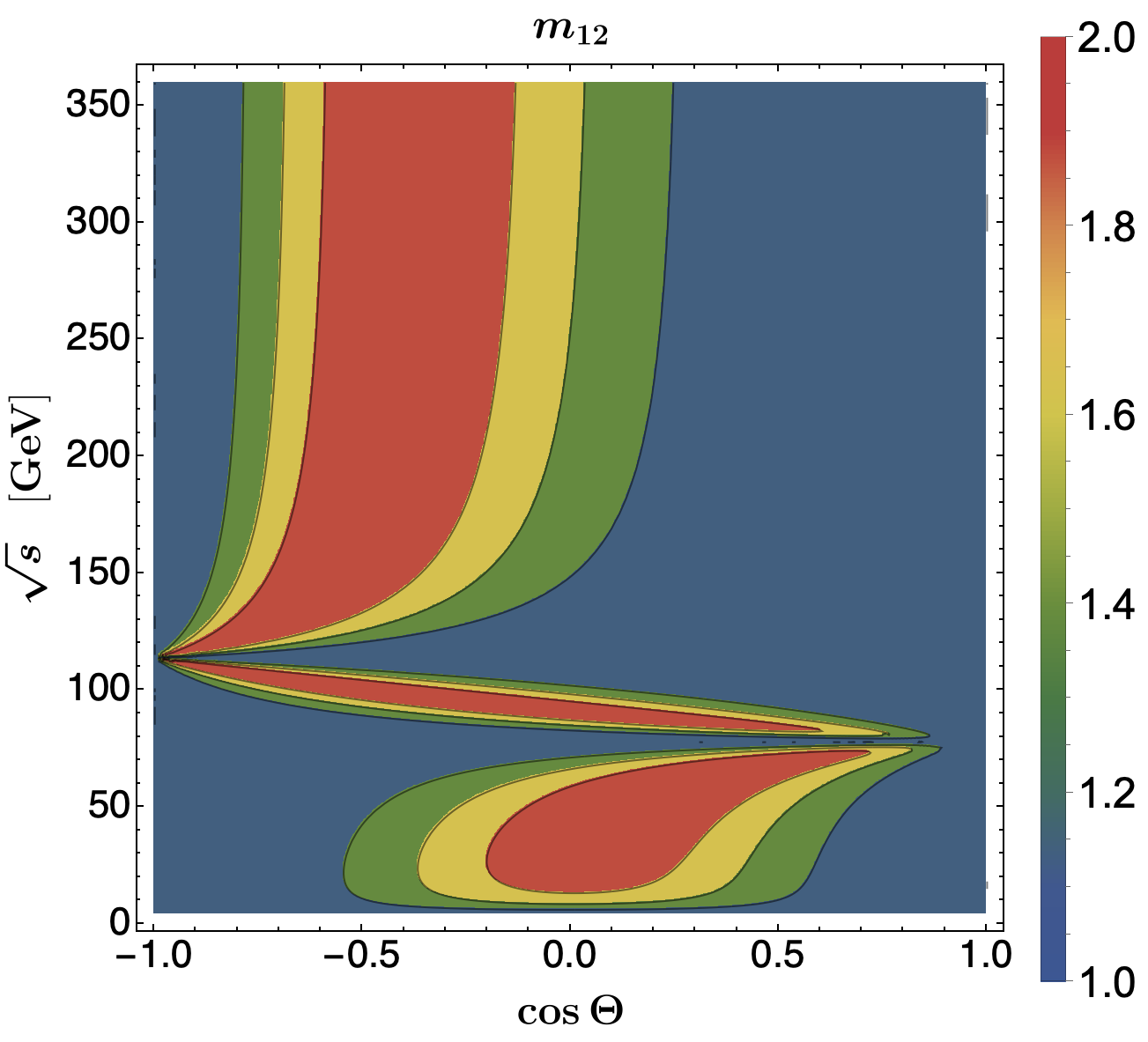

Analysis of correlated particle pairs – including top-quarks, Z bosons, W bosons, and tau-leptons – provides a means to characterize the underlying quantum state produced in high-energy collisions. Future lepton colliders are projected to significantly surpass the sensitivity of existing and planned hadron colliders in this regard. Specifically, with an integrated luminosity of 150fb⁻¹, these colliders are anticipated to achieve a statistical significance of up to 30σ in detecting nonlocality within tau-pair production events, enabling precise tests of quantum entanglement and potentially revealing deviations from standard model predictions.

Reconstructing Quantum States Through Computation

Monte Carlo simulations are a fundamental component of high-energy physics data analysis, particularly in modeling particle collisions. Tools such as MadGraph5_aMC@NLO facilitate the generation of simulated collision events based on perturbative calculations in Quantum Chromodynamics and Electroweak theory. A critical aspect of these simulations is the inclusion of initial state radiation (ISR), where particles emit photons or other particles before the main interaction. Accurately modeling ISR is crucial because it affects the observed energy and momentum distributions of the final state particles and introduces significant background noise. MadGraph5_aMC@NLO automates the calculation of ISR contributions at next-to-leading order (NLO) precision, enabling more realistic simulations and improved signal-to-background discrimination in experimental analyses. These simulations produce large event samples necessary for statistical analysis and the reconstruction of underlying physical processes.

Generating statistically significant evidence of quantum entanglement necessitates the production and analysis of large event samples. The low probability of entangled state creation in particle collisions requires simulating a high volume of events – often on the order of millions or billions – to accumulate sufficient data for observation. These simulated events provide the basis for constructing histograms and performing statistical tests to identify the subtle correlations indicative of entanglement, differentiating them from background noise and other potential sources of error. The size of the required sample is directly related to the expected signal strength and the desired level of statistical confidence, typically expressed as a p-value or confidence interval. Furthermore, the event samples facilitate the estimation of systematic uncertainties that could affect the observed entanglement signal.

Quantum state tomography is the process of characterizing a quantum state by performing a series of measurements. Since the quantum state is generally unknown, it is represented by the density matrix ρ, a positive semi-definite operator with trace equal to one. Complete state reconstruction necessitates measuring a sufficient number of observables to fully determine the elements of this density matrix. These measurements do not directly reveal the state; instead, statistical inference is used to estimate ρ from the observed measurement probabilities. The number of independent measurements required scales quadratically with the dimension of the Hilbert space, necessitating a significant experimental effort for complex systems.

The Expanding Horizon of Nonlocal Measurement

Concurrence serves as a quantifiable metric for assessing entanglement specifically within qubit systems, and recent analysis of tau-pair production at the proposed Future Circular Collider – electron positron (FCC-ee) has yielded a measured value of 0.4805, accompanied by a statistical uncertainty of ± 0.0063 and a systematic uncertainty of ± 0.0012. This precise determination offers a concrete measurement of the quantum correlations present in these particle interactions. Essentially, concurrence gauges the degree to which two qubits are linked – a value of zero indicates no entanglement, while higher values denote stronger correlations. The measured concurrence in tau-pair production provides valuable data for validating theoretical predictions about entanglement and offers insights into the fundamental nature of quantum mechanics at high energies.

While entanglement is often explored using qubits, systems leveraging higher-dimensional quantum states – known as qudits – demand alternative analytical tools. The Collins-Gisin-Linden-Massar-Popescu Inequality provides a crucial framework for quantifying entanglement in these more complex scenarios. Recent investigations into tau-pair production at the proposed Future Circular Collider – electron positron (FCC-ee) – have employed this inequality, resulting in a measured Horodecki parameter of 1.239 ± 0.017 (stat) ± 0.008 (syst). This value doesn’t merely suggest entanglement, but establishes it with an extraordinary level of statistical significance – exceeding 13σ – providing compelling evidence for quantum nonlocality beyond the limitations of classical physics and opening avenues for deeper investigations into the foundations of quantum mechanics.

Rigorous examination of quantum nonlocality, facilitated by entanglement measures like concurrence and the Collins-Gisin-Linden-Massar-Popescu Inequality, continues to yield compelling data from particle collider studies. Analyses of W⁺W⁻ production at a potential 1 TeV muon collider demonstrate a statistical significance of 2σ, suggesting preliminary evidence for nonlocal correlations. Even more promising are initial findings from simulated ZZZZ production at a future electron collider, which exhibit a statistical significance reaching 4σ – a strong indication of quantum entanglement exceeding classical predictions. These investigations, while ongoing, collectively contribute to a deeper understanding of entanglement’s fundamental nature and offer potential avenues for refining models of quantum reality.

The study meticulously outlines how future lepton colliders can serve as exceptional tools for investigating quantum entanglement and nonlocality, exceeding the capabilities of current hadron colliders. This approach aligns with a fundamental principle articulated by Aristotle: “The whole is greater than the sum of its parts.” The intricate spin correlations observed in tau-lepton and weak gauge boson pairs, detailed within the paper, demonstrate that understanding the interactions between particles reveals properties not discernible from studying them in isolation. It’s not simply about identifying individual components, but recognizing how these components, when entangled, create emergent phenomena-a principle resonating deeply with the idea that complex systems arise from simple, local rules, rather than centralized control.

Where Do We Go From Here?

The prospect of exquisitely mapping entanglement within the decay of tau leptons and weak bosons, as this work suggests, isn’t about proving quantum mechanics – that particular ghost has long since departed. Rather, the utility lies in refining the boundaries of our understanding. The Standard Model, despite its successes, remains a remarkably pragmatic construction. This research doesn’t attempt to control particle interactions, but to observe the emergent order within them-to discern whether the correlations revealed by Bell nonlocality offer subtle fissures hinting at underlying structures beyond current description.

The limitations, of course, are not technological, but conceptual. The sheer volume of data anticipated from future lepton colliders will necessitate advanced quantum tomography techniques. But even perfect reconstruction of quantum states won’t resolve the fundamental question: are these correlations merely a consequence of local, albeit complex, rules, or do they reflect a deeper, non-local reality? The answer won’t arrive through brute-force experimentation, but through increasingly sophisticated theoretical frameworks capable of interpreting the observed patterns.

The focus should shift toward exploring the interplay between entanglement, information theory, and the foundations of statistical mechanics. The illusion of control-of being able to dictate particle behavior-must give way to an appreciation for the subtle influence exerted by initial conditions and the inherent unpredictability of complex systems. The goal isn’t to find the architect of quantum order, but to understand how it arises spontaneously from the interactions of its constituent parts.

Original article: https://arxiv.org/pdf/2602.03960.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- How to Get the Bloodfeather Set in Enshrouded

- Gold Rate Forecast

- Meet the cast of Mighty Nein: Every Critical Role character explained

- Best Controller Settings for ARC Raiders

- Survivor’s Colby Donaldson Admits He Almost Backed Out of Season 50

- 32 Kids Movies From The ’90s I Still Like Despite Being Kind Of Terrible

- Where Winds Meet: How To Defeat Shadow Puppeteer (Boss Guide)

- These Are the 10 Best Stephen King Movies of All Time

- Auto 9 Upgrade Guide RoboCop Unfinished Business Chips & Boards Guide

- USD JPY PREDICTION

2026-02-05 06:12