One insight I gained once I became a parent is that time truly holds value, particularly the amount of online content I consume daily for work. Whether it’s research, keeping up with news or reviews, or pulling out specific details, my job demands a significant amount of reading.

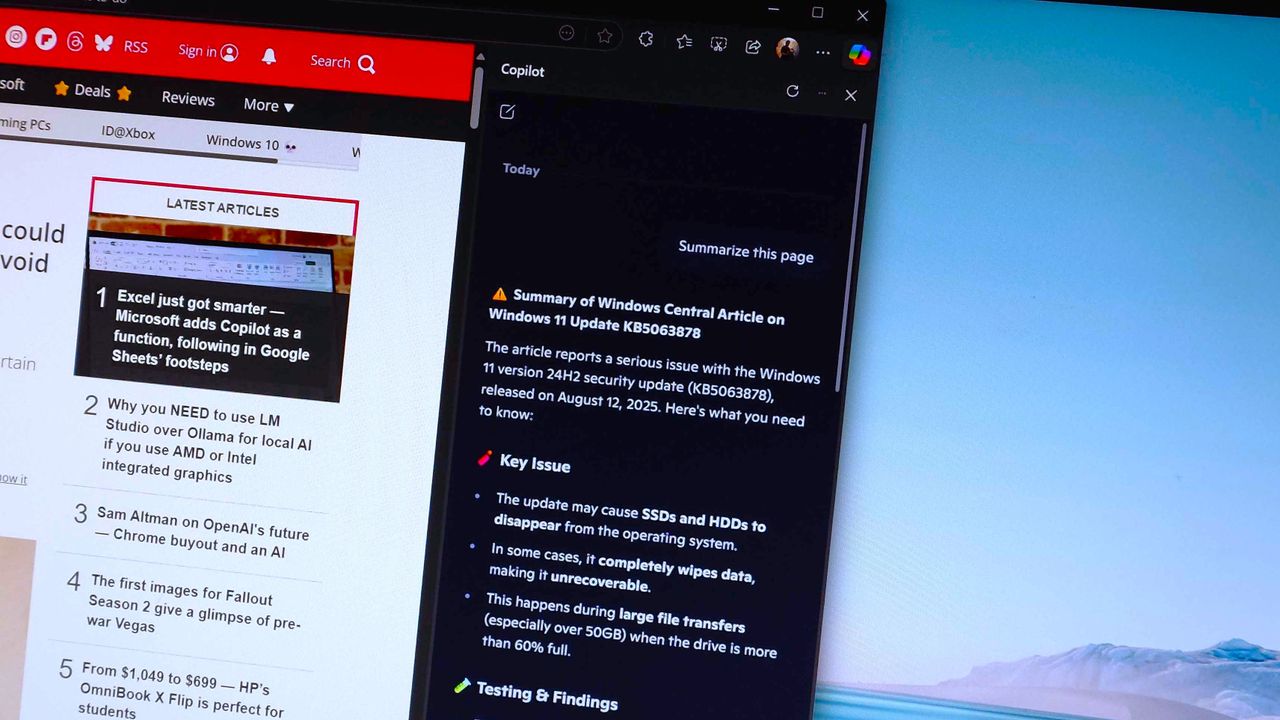

Copilot excels at crafting concise summaries, and it continues to improve significantly. Previously, I had to explicitly state my requirements and desired output format. Now, it takes care of everything seamlessly and effectively.

Instead, I’ve been diving deeper into local AI solutions like Ollama and LM Studio lately, rather than solely depending on tools such as Copilot, Me, ChatGPT, and Google Gemini.

It appears that several methods to recreate this feature involve using Python. While I’m open to some experimentation, I was wondering if there might be a straightforward, browser-based solution that could accomplish what I need.

The positive development is that I’ve discovered something, but unfortunately, it’s not yet as effective as Copilot in performing this particular task.

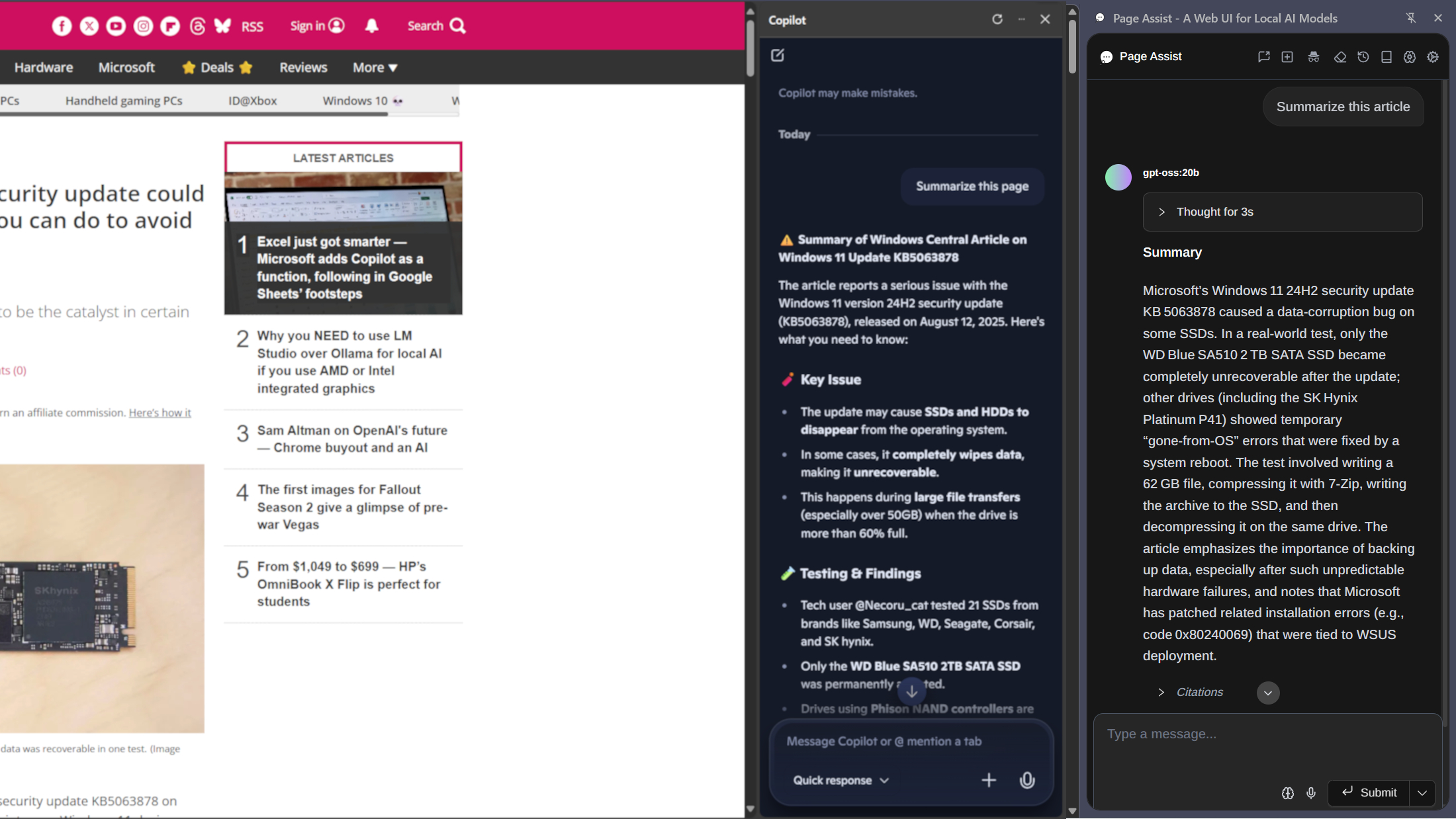

Ollama in the back and Page Assist in the front

As I was exploring potential solutions, I stumbled upon Page Assist – available in the Chrome Web Store (or Microsoft Edge Addons if you prefer). This tool is not just an extension, but rather, it’s a comprehensive web application serving as a graphical user interface for Ollama.

This tool is incredibly versatile and boasts a wealth of features. So far, I can’t think of anything it doesn’t offer. It streamlines the process of working with local Language Learning Models remarkably well, and it surpasses the Ollama app in terms of sophistication.

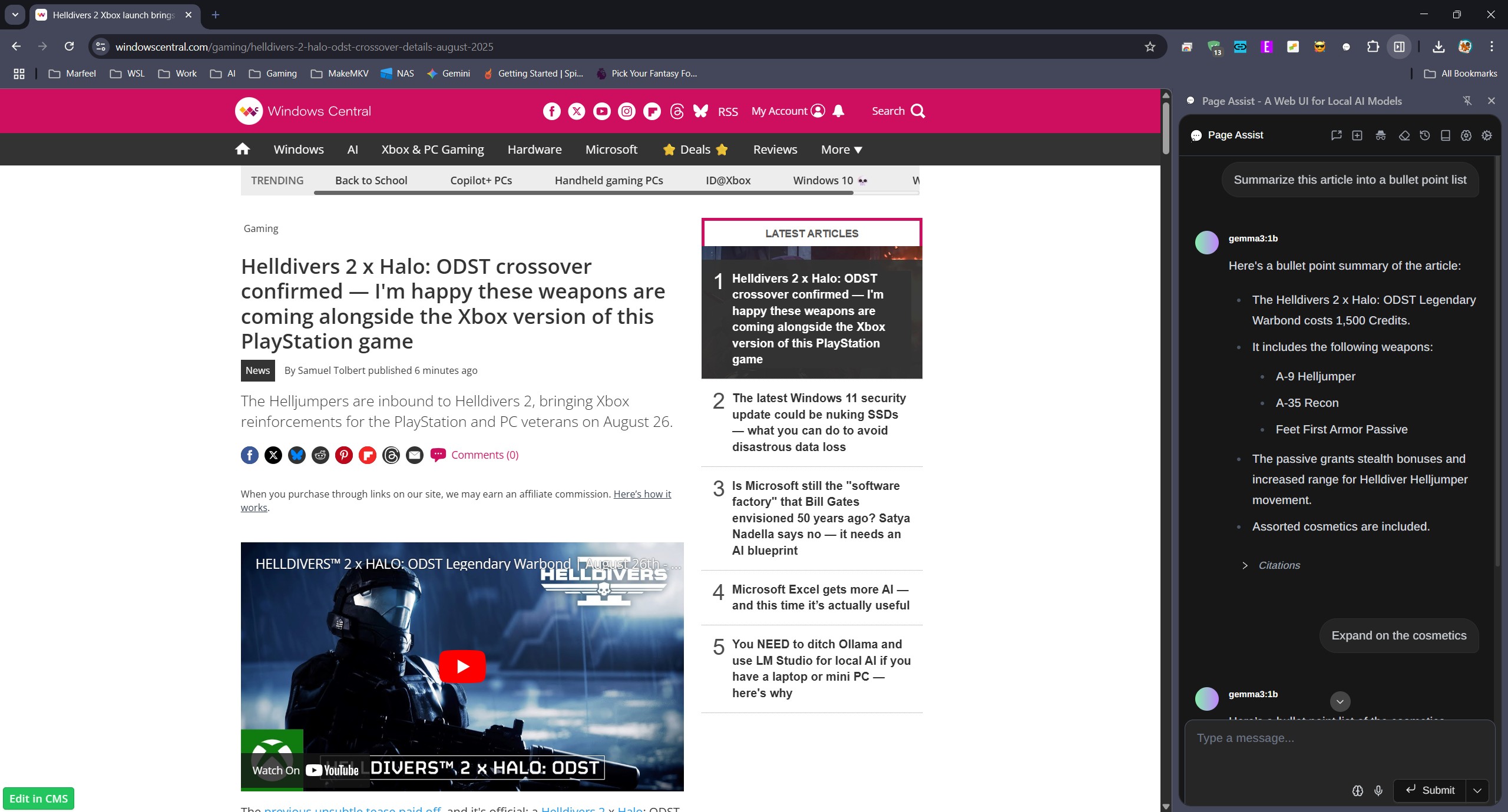

What I’m focusing on isn’t the entire layout, but rather the sidebar feature that allows interaction with an already opened webpage. And guess what? I’ve found it!

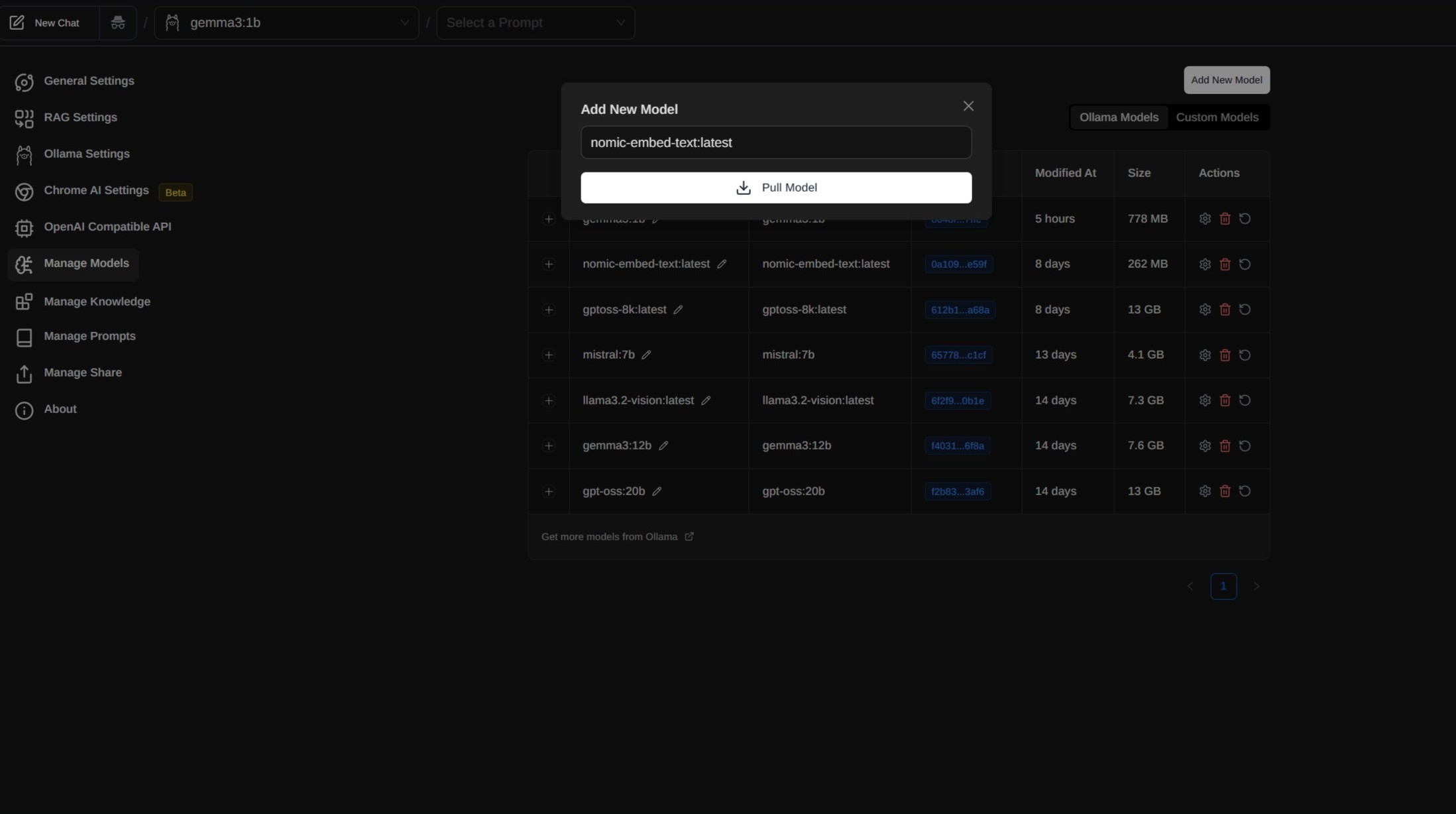

To communicate with a webpage using Page Assist, your Ollama setup requires an additional step. Specifically, you’ll need to implement some Regex (Regular Expressions) to facilitate the interaction.

One possible paraphrase for “Retrieval-Augmented Generation” could be “Enhanced Text Generation with Data Retrieval.” This term refers to a method used in artificial intelligence where the AI system augments its own generation capabilities by retrieving relevant information from external data sources, such as databases or other documents, during the process of creating responses or generating text. By integrating data retrieval into the generative process, the AI can produce more accurate, informative, and contextually appropriate responses compared to a system that relies solely on its own internal knowledge base.

To communicate with a web page through Page Assist, we require an embedding model to be incorporated within Ollama. The app simplifies this process by suggesting Nomic Embed. Consequently, I followed suit by accessing Page Assist via the Manage Models option in Settings, adding a new model, and typing “nomic-embed-text:latest” into the box.

Another way of saying this could be: To proceed further, you just need to find out how to open the side panel. Normally, it’s accessible from the right-click menu. So, when you’re on a page you want to discuss, right-click and then open the side panel to initiate a chat.

It’s good, but not AS good as Copilot

Does it actually work? Yes, it does. But it doesn’t work as well as Copilot, sadly.

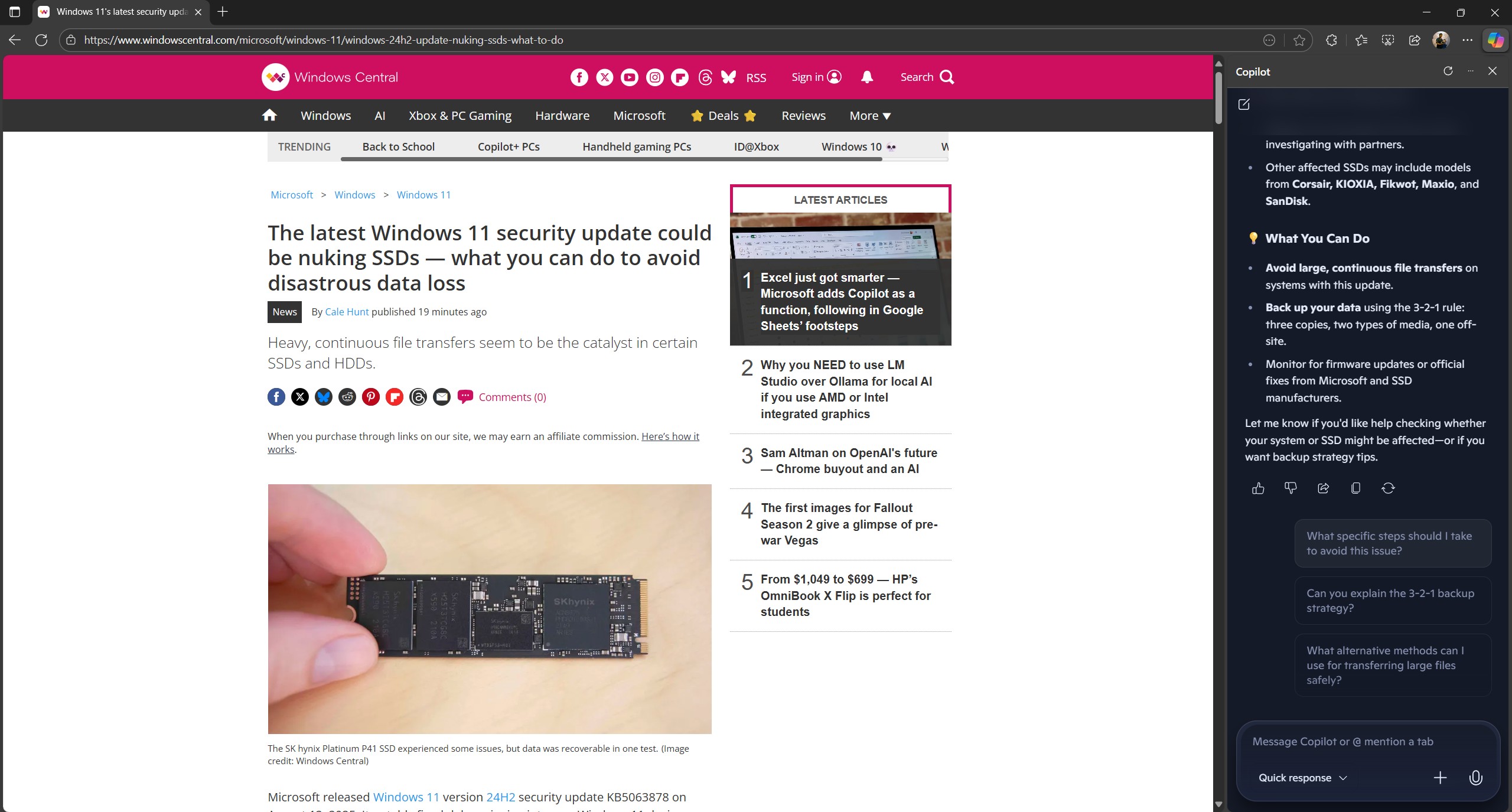

Initially, let me highlight the positive aspects. Employing diverse models, I can effectively condense articles. Larger models tend to perform better. While it’s unreasonable to expect gemma3:1b to match the capabilities of gpt-oss:20b, it does fulfill its purpose in summarizing web content as directed.

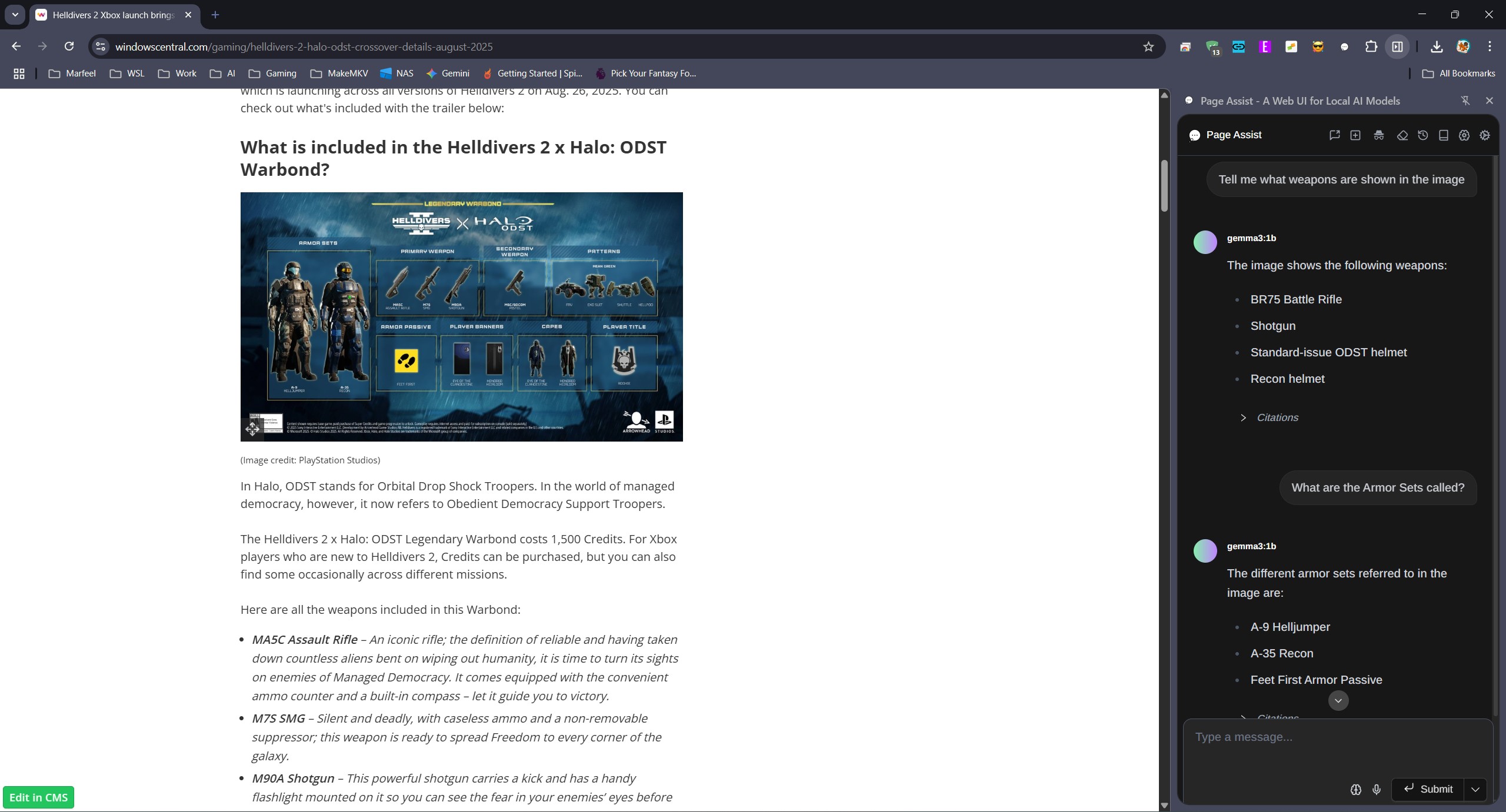

As a dedicated user, I’m excited to share that this tool is designed to utilize vision on images when equipped with the appropriate model. However, I admit I may have opted for a smaller model initially, which might not be ideal as you can observe from the image above. Nevertheless, rest assured that it’s fully capable of processing and interpreting images, employing Optical Character Recognition (OCR), and much more!

Thus far, everything appears fine. However, the major issue lies in its failure to recognize transitions between different web pages. In my workflow, when I’m in speed-reading mode, I keep the side panel open and reissue the command as I navigate through various pages.

If I don’t initiate a fresh conversation or explicitly ask the model to disregard the previous article, it often rehashes information from the old article when summarizing the new one. This isn’t a major issue, but it can be somewhat frustrating.

Among all models I’ve tested thus far, none has produced results that match the high-quality summaries generated by Copilot. What sets Copilot apart is its ability to provide superior summaries, even without a highly detailed initial prompt.

Given some time, I believe I could improve upon this, but it requires effort on my part. The focus here is on efficiency and ease of use. While Copilot is more like having a chat, these local models come across as straightforward and factual.

As an analyst, I find Copilot particularly valuable for its ability to offer follow-up questions. While not always necessary, there are instances where these questions serve as a spark, presenting me with perspectives or angles I hadn’t previously considered.

A case where I think I might stick with online AI

In certain scenarios, utilizing locally-based AI can offer several benefits compared to its online counterparts. Nevertheless, in this specific case, I need an internet connection to access the web pages I wish to have summarized. Given that the local AI functions effectively for me, there’s no compelling reason to switch.

I’d like to remind everyone to explore the local AI scene, as it’s currently a significant focus for me, especially in terms of education. Each day brings a new learning experience for me.

Additionally, in relation to the topics covered in this piece, I’ve discovered another preferred method of employing Ollama – Page Assist. I find it exceptionally impressive, and I may delve deeper into its capabilities in future writings once I’ve had a chance to explore it further.

But when I’m working, I think I’ll stick to the online tools for this task. At least for now.

Read More

- How to Get the Bloodfeather Set in Enshrouded

- Every Targaryen Death in Game of Thrones, House of the Dragon & AKOTSK, Ranked

- 4 TV Shows To Watch While You Wait for Wednesday Season 3

- The Pitt Season 2, Episode 7 Recap: Abbot’s Return To PTMC Shakes Things Up

- Gold Rate Forecast

- One of the Best EA Games Ever Is Now Less Than $2 for a Limited Time

- Goat 2 Release Date Estimate, News & Updates

- Best Controller Settings for ARC Raiders

- Best Werewolf Movies (October 2025)

- 10 Movies That Were Secretly Sequels

2025-08-21 14:41