Katy Perry Reveals She Quit Nicotine 2 Months Ago

Katy Perry, known for her song “I Kissed a Girl,” announced on Instagram that she’s been nicotine-free for two months.

Katy Perry, known for her song “I Kissed a Girl,” announced on Instagram that she’s been nicotine-free for two months.

Players with Xbox Game Pass Ultimate or PC Game Pass can now enjoy the new game, Planet of Lana II: Children of the Leaf. It’s available on Xbox Series X, PC, and even Xbox One – which is great news for Xbox One Game Pass members, as they haven’t received many games on their release day this year. In fact, Planet of Lana II: Children of the Leaf is currently the highest-rated day-one game added to Xbox Game Pass in 2026.

Spider-Man has teamed up with many heroes, but some pairings just work better than others – whether they’re instant friends or have a playfully competitive relationship. We’re ranking seven of Spider-Man’s best partners, and it was a tough choice! Many other heroes could have made the list, but these seven have the strongest and most engaging dynamic with our friendly neighborhood Spider-Man. Let’s jump right in and see who made the cut!

The latest Scream movie delivers a fresh wave of terrifying killings, and it doesn’t shy away from graphic violence. Similar to Scream VI, the film features three Ghostface killers responsible for the deaths. One of them, Karl Allan Gibbs, is quickly taken out of the picture after being hit by a van. That leaves Marco Davis and Jessica Bowden – a neighbor of Sidney’s and an overly enthusiastic fan with a skewed interpretation of events – to carry out the rest of the attacks. With a total of seven deaths in Scream 7, who is responsible for each one?

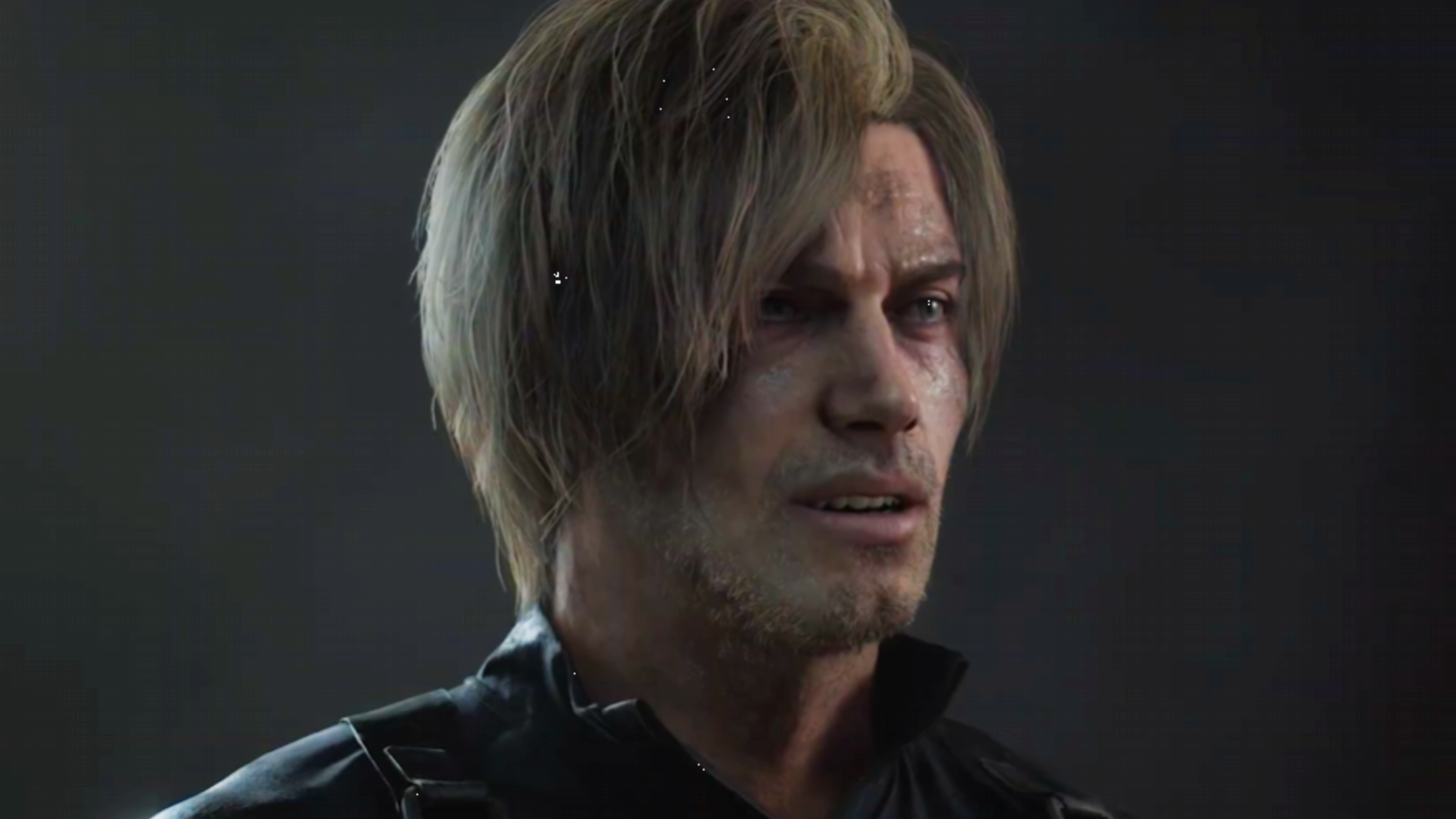

Two lesser-known characters have made surprisingly fantastic appearances in the game, becoming popular with fans over time. Their roles as smaller figures fit perfectly within the story, and players have been eagerly anticipating a showdown with them. Here’s a look at these two great character cameos in Resident Evil Requiem and why they work so well.

In a recent episode of Family Guy, Peter Griffin is walking on a boardwalk while on the phone with Lois. Trying to avoid his duties, Peter accidentally walks right into Bob’s Burgers. Inside, he learns about an “Emmy winner discount” that Homer Simpson is using. This is ironic because Family Guy has won Emmys before, but never for the prestigious “Outstanding Comedy Series” award, a fact the characters seem to acknowledge. While this crossover was a surprise to many viewers, it’s not the first time characters from Fox’s animated shows have appeared together. You can watch the scene in the video linked below.

Good news for fans of the show Outlander! The final season has arrived, with new episodes now streaming on Starz. While many have been waiting since the seventh season concluded in January 2025, this isn’t a complete goodbye – more Outlander stories are still planned beyond these episodes. Though it’s bittersweet to see this chapter close, fans will be glad to know the world of Outlander will continue.

According to Sean Phillip, a longtime friend and former assistant, Britney Spears texted him right after her DUI arrest in California, sharing what happened.

Eva Mendes explained in 2020 that she intentionally keeps her family life private. Responding to a fan question about why she doesn’t share photos of her daughters, Esmeralda, 11, and Amada, 9, with Ryan Gosling, she said she’s happy to talk about them, but within certain boundaries. She prefers not to share pictures of their everyday routines.

George Clooney is a big fan of Narcos, calling it his favorite show and even comparing it to the classic film The Godfather. The Netflix series stands out because it makes a serious topic engaging, thanks to the talented cast and the involvement of Steve Murphy and Javier Peña – the real DEA agents portrayed in the show who pursued Pablo Escobar.