Around the mid-1900s, the Space Race began, and by the mid-2020s, we find ourselves squarely in the midst of the Artificial Intelligence (AI) competition. No one is idle as entities from various parts of the world are actively striving for the next groundbreaking discoveries.

Chinese researchers have recently announced that they have developed a new, highly advanced language model called SpikingBrain1.0. Unlike typical language models, this one is said to be up to 100 times faster than existing models like those powering ChatGPT and Copilot. This groundbreaking development in AI technology has generated significant excitement within the scientific community.

The model’s unique functionality sets it apart, marking a novel departure from existing models. Often referred to as the pioneering “neural network-inspired” LLM, let’s delve into what this term signifies: To clarify, here’s some background on how conventional LLMs function.

Let me try to clarify this in a more straightforward manner:

Current language models, often referred to as LLMs, analyze an entire sentence simultaneously. Their focus is on identifying patterns and understanding relationships among the individual words, regardless of their specific order within the sentence.

It uses a method known as Attention. Take a sentence such as this:

“The Baseball player swung the bat and hit a home run.”

A human understands the context of a sentence quickly since their mind connects words like “Baseball” with the subsequent ones without much thought. On the other hand, an LLM might not discern between a baseball bat and the mammal known as a bat based solely on the word “bat.” It’s only by considering the rest of the sentence that it can accurately differentiate the meanings.

As a language analyst, I focus on scrutinizing entire sentences to grasp their meaning. To do this effectively, I establish connections among the sentence’s components, identifying key terms like ‘swung’ and ‘baseball player.’ By understanding these elements, I can ascertain the definition accurately, ultimately leading to more accurate predictions in my analysis.

The training data for the LLM includes instances where “baseball” and “bat” are frequently associated.

Processing entire sentences all at once can be resource-intensive, especially for larger inputs. As the amount of data increases, so does the required resources for comprehension. This, in part, explains why most current Language Learning Models demand significant computing power. Essentially, every word is compared to every other word, which requires a lot of resources.

SpikingBrain1.0 asserts that it emulates the human brain’s method by concentrating on relevant words in a sentence, much like how we comprehend a sentence’s context. Unlike the constant operation of the entire human brain, this model operates efficiently by activating only necessary nerve cells when needed.

As an enthusiast, I can’t help but be thrilled about a new model that promises significant improvements! This marvel of technology is said to boost efficiency by up to 100 times over existing Language Learning Models (LLMs), according to its developers. In comparison to something like ChatGPT, this model is designed to selectively react to inputs, which in turn reduces its resource needs for operation.

As written in the research paper:

Using this method, we can perform ongoing training with only a small fraction (less than 2%) of the data, and still achieve performance similar to well-known open-source models.

As a tech enthusiast, I find it fascinating that this model isn’t solely dependent on NVIDIA GPUs for its computational power. Instead, it has successfully passed tests using a homegrown chip from a Chinese company, MetaX.

As a tech enthusiast, it’s clear that there are numerous factors to ponder over, but theoretically speaking, SpikingBrain1.0 could mark a significant step forward in the evolution of Large Language Models (LLMs). The potential impact of AI on our environment is undeniable, with immense energy consumption and equally massive demands for cooling these gigantic data centers being major concerns.

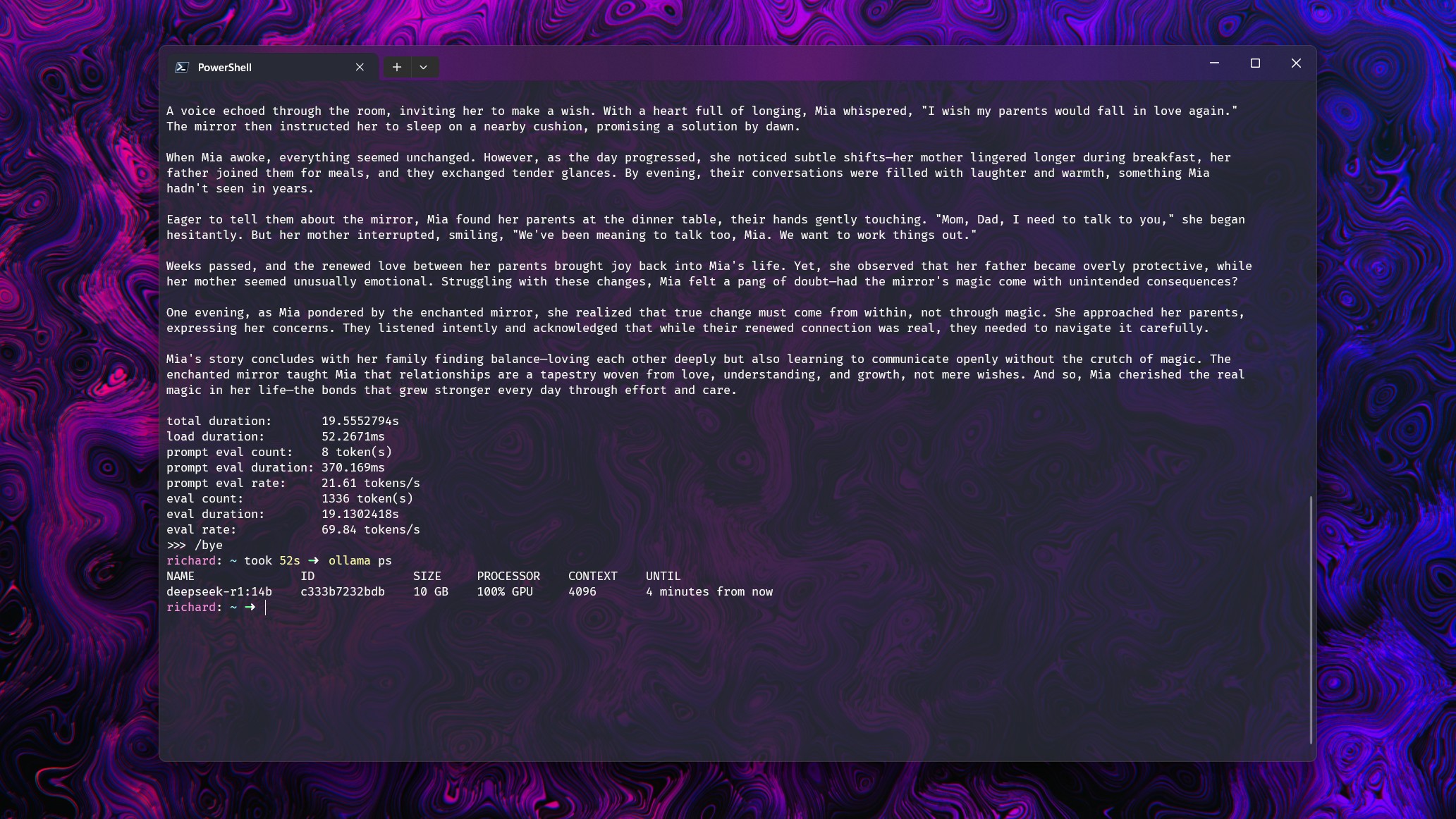

Running Large Language Models (LLMs) on Ollama at home with an RTX 5090 is already causing my home to heat up significantly, as this graphics card draws nearly 600W and isn’t very energy-efficient. If you scale that up to a data center filled with similar GPUs, the heat production and energy consumption would likely become unmanageable and inefficient on a much larger scale.

This new development seems promising, assuming it’s indeed true. If it delivers on both accuracy and efficiency, it could mark a significant advancement. Definitely an exciting time ahead!

Read More

- How to Get the Bloodfeather Set in Enshrouded

- Gold Rate Forecast

- These Are the 10 Best Stephen King Movies of All Time

- Best Werewolf Movies (October 2025)

- 10 Movies That Were Secretly Sequels

- USD JPY PREDICTION

- Auto 9 Upgrade Guide RoboCop Unfinished Business Chips & Boards Guide

- One of the Best EA Games Ever Is Now Less Than $2 for a Limited Time

- Uncovering Hidden Order: AI Spots Phase Transitions in Complex Systems

- 4 TV Shows To Watch While You Wait for Wednesday Season 3

2025-09-12 15:09