Over the past few days, I’ve been experimenting with OpenAI’s gpt-oss:20b. Being their inaugural open-source model, this is our initial opportunity to test it out directly, outside of using an API or tools such as ChatGPT or Copilot.

As a tech enthusiast, I’m excited about this model that leverages technology similar to GPT-4. It’s remarkable because it’s data cutoff is as recent as June 2024, which gives it an edge over many open-source models currently available. However, if you need more up-to-date information, it can also utilize web search to provide the most current details according to your request.

I unexpectedly thought of trying it against something practical that my son has been involved with. For those not from the UK, there’s a test known as the 11+. It serves as an admission requirement for students seeking entry into competitive schools in the UK.

While preparing for something similar, I was curious if model gpt-oss:20b might be able to take on a practice test, not just interpret it, but also solve the problems independently. I call this test the “10-year-old challenge” since it’s designed to assess whether the model performs as well or better than an average 10-year-old.

Fortunately, for my son anyway, he’s still way out in front of at least this AI model.

The test and the hardware

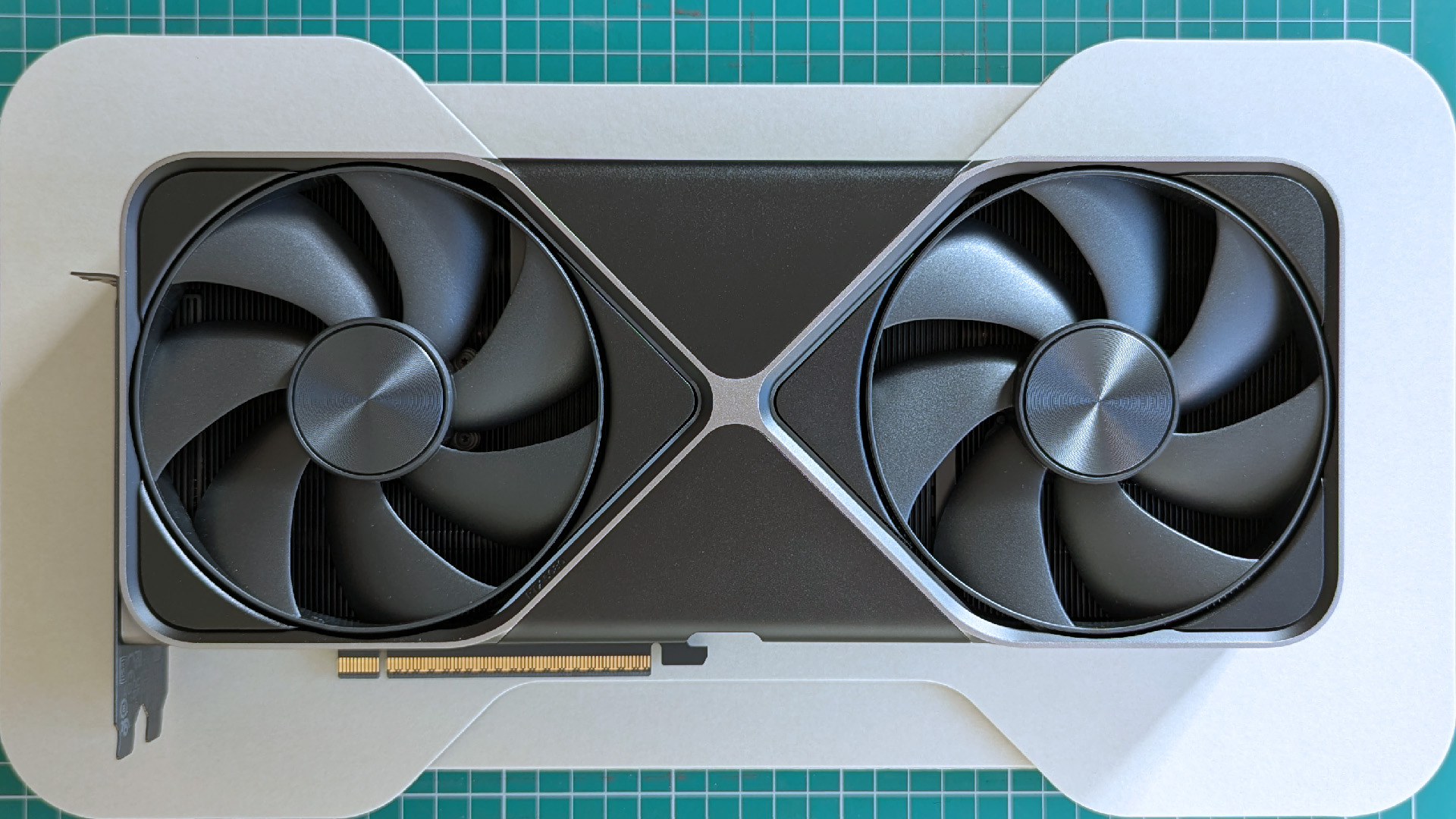

To begin with, let me clarify that while my PC is relatively powerful, it struggles to run gpt-oss:20b effectively. It appears my RTX 5080, with its 16GB of VRAM, doesn’t quite meet the requirements for this model. Consequently, both the CPU and system RAM have been working overtime.

Emphasizing that point is crucial since it can significantly reduce response times in a more advanced setup. Soon, I will acquire an RTX 5090 for testing, ideal for handling demanding AI tasks due to its ample 32GB VRAM.

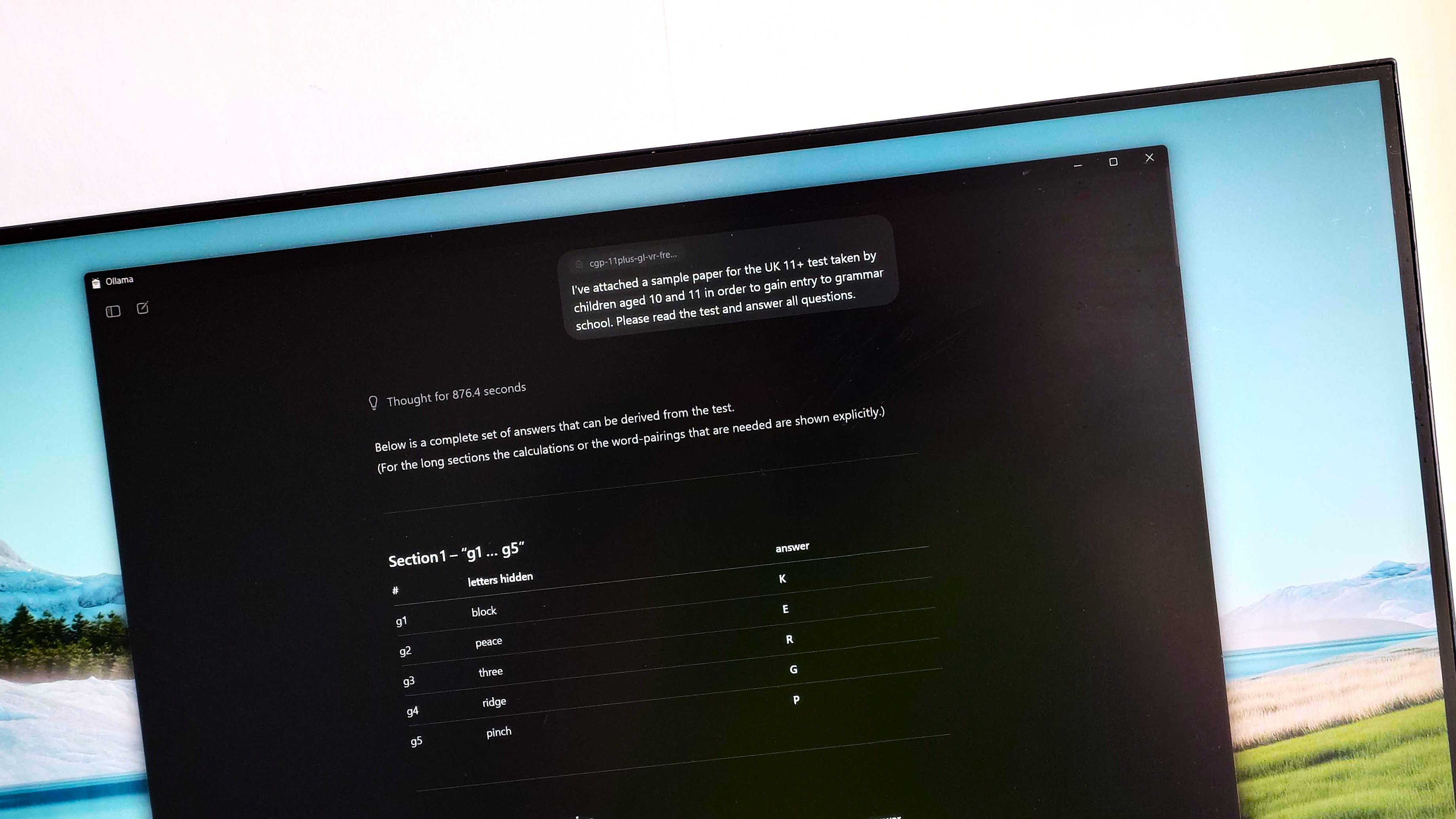

I found the test easy. I downloaded a mock 11+ paper as a practice, then uploaded it to a workspace using Ollama. The prompt I chose for the exercise was:

I’ve included a model exam paper designed for the UK’s 11+ test, which students aged 10-11 take to secure admission into grammar schools. Kindly review the test and answer all questions.

To be honest, this question isn’t the best, as I didn’t request an explanation on how you arrived at your answer. All I need is for you to provide the solution directly.

So how badly did it do?

Terrible.

After pondering for roughly 15 minutes, it produced 80 responses for the 80 queries in the exam. It appears to have answered correctly on nine of these. The passing score is slightly above that level.

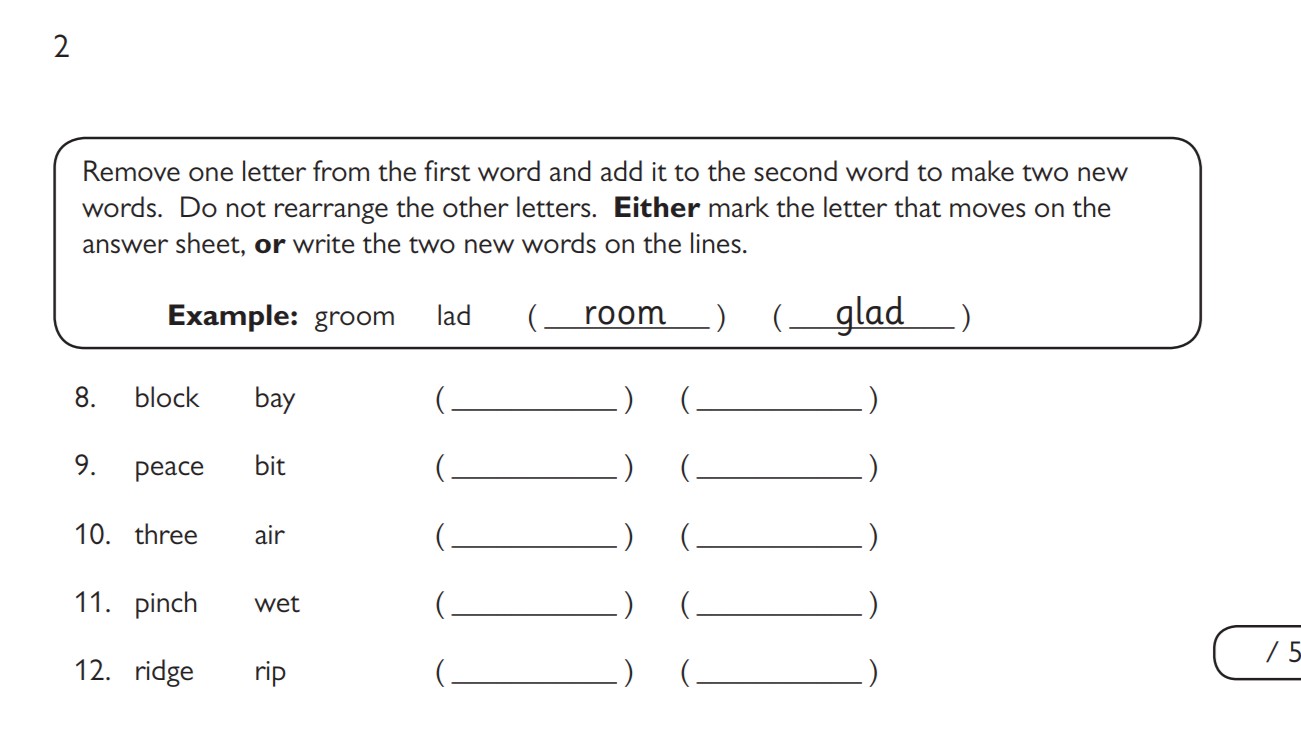

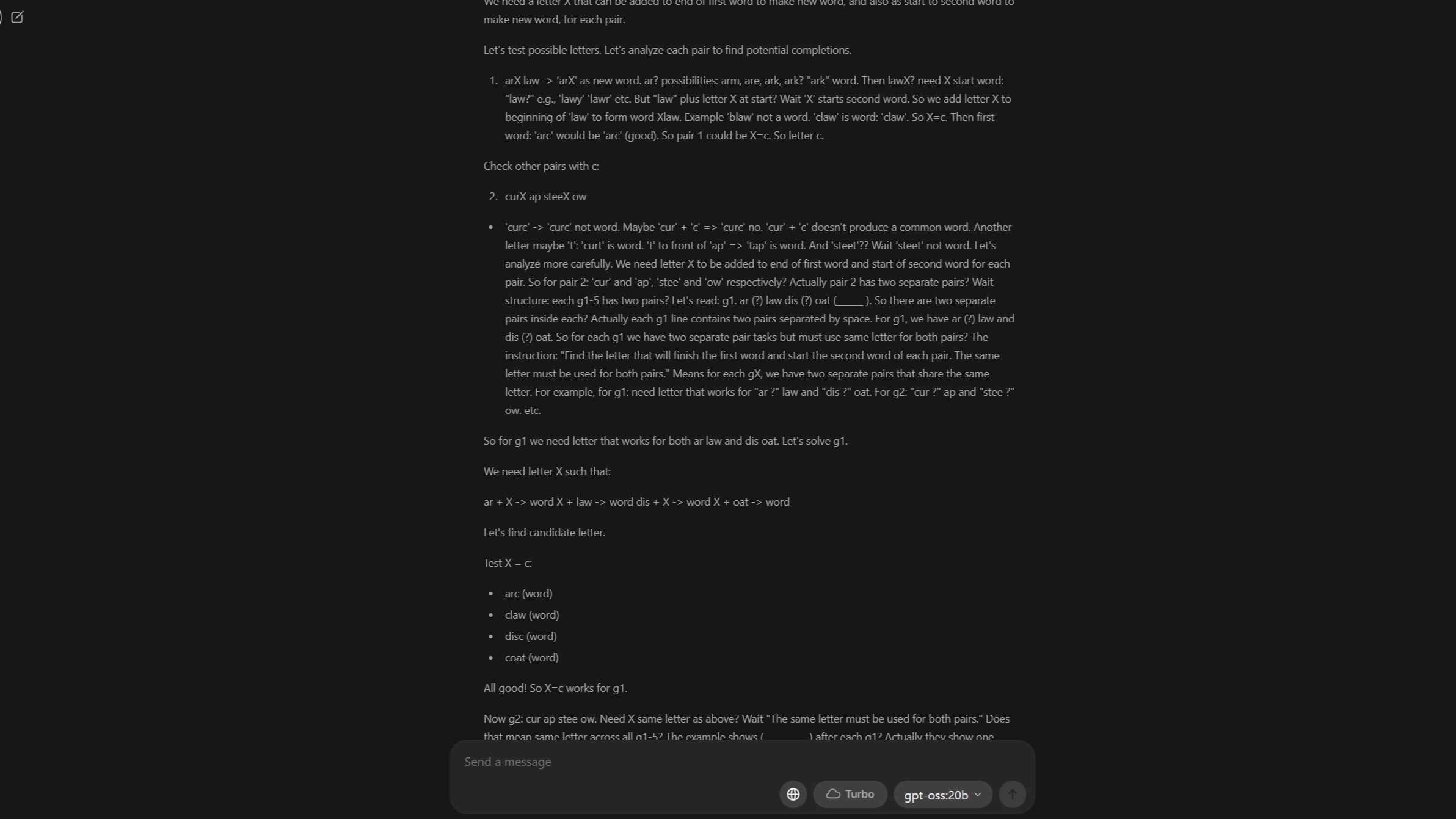

Some of the questions it got right are in the image above. In order, the answers given are:

- lock, baby

- pace, bite

- tree, hair

- inch, wept

- ride, grip

Well, let me tell you something intriguing! It wasn’t the initial queries in the test that it tackled correctly; rather, those were the first it managed to solve accurately. Following that, it answered four more questions correctly in a row. The clever thing was, a four-letter word was concealed at the end of one term, and the start of the next within a sentence. Quite fascinating, isn’t it?

The last two sentences in this section failed to elicit a response, and from there, things unraveled. Sequences involving numbers proved unsuccessful, and subsequently, the rest of the questions, regardless if they were based on words or numbers, failed to materialize an answer.

Instead of giving incorrect replies, several answers failed to address the questions at hand. Nevertheless, a closer look at the reasoning revealed surprising differences. The two examples below should help clarify this point.

In simpler terms, the model provided the same, non-pertinent numerical sequence as an answer to both questions. However, it’s worth noting that if you check the context window, you can observe the model’s thought process behind its responses, even after the answers have been given.

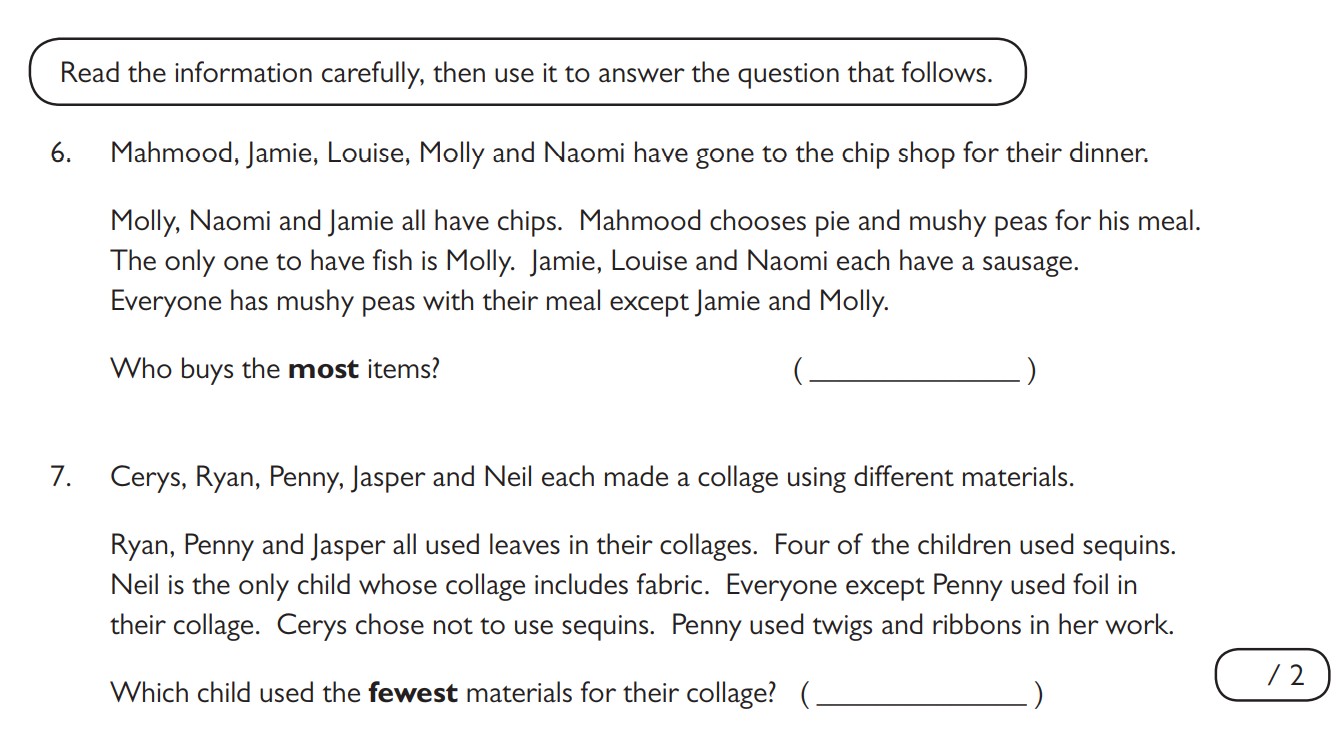

The model arrived at the response for question 6 by reasoning through the process, much like a child working on the same test might do. Although the explanation is detailed (which I hope you find understandable), it accurately reflects the steps typically taken to solve such problems.

“We need to count items each buys. We have 5 people. We have to deduce what each person has.

Given info:

Molly, Naomi, Jamie all have chips.

Mahmood chooses pie and mushy peas.

Only one to have fish is Molly. So Molly has fish.

Jamie, Louise and Naomi each have a sausage. So each of those three has sausage.

Everyone is eating mushy peas with their meal, except for Jamie and Molly. Therefore, it can be inferred that Jamie and Molly do not eat mushy peas. Since everyone else is having mushy peas, we can assume that Naomi, Louise, and Mahmood all have mushy peas as well. However, we are not told if Louise has chips or sausage. Here’s a list of who has what:

– Jamie and Molly do not have mushy peas

– Naomi has chips, sausage, and mushy peas

– It is not specified whether Louise has chips or sausage, but she likely has mushy peas since everyone else does (unless otherwise stated)

– Mahmood has mushy peas (given)

Mahmood mentioned pie and mushy peas, but whether he has chips is unclear as it wasn’t stated. It’s possible that he may have something else, but the information about chips is missing. However, we do know for sure that he doesn’t necessarily not have chips, but it’s also a possibility that he might not. We are working under certain limitations.

Jamie: chips, sausage. No mushy peas, no fish. Might have something else? Not specified.

Louise: Sausage. She might not be eating chips, but they could be on the side. Could there possibly be mushy peas too? Yes, since everyone else except Jamie and Molly usually have mushy peas, so it’s likely that Louise will have them as well. And maybe chips, but we haven’t explicitly stated that yet.

Naomi: chips, sausage, mushy peas. No fish? Not specified. Also maybe chips.

Molly prefers chips and fish, with the possibility of mushy peas, but not sausages. However, Jamie, Louise, and Naomi are the ones who have sausages. Therefore, it can be inferred that Molly does not have sausage, so she might only have chips and fish, or possibly just chips and fish without mushy peas.

Let’s determine how many of each item everyone purchases. Here are the items to consider: chips, mushy peas, fish, sausage, and pie. There may be additional items as well.

Let’s list each:

Mahmood was given pie and mushy peas for his meal. Mushy peas are shared by everyone except Jamie and Molly. Therefore, it can be inferred that Mahmood also has mushy peas because he’s not Jamie or Molly. Pie and mushy peas are separate items, so Mahmood has 2 different food items in total. However, chips have not been mentioned, so we can’t assume whether he has them or not.

Jamie: chips, sausage. No mushy peas. So 2 items.

Louise ordered sausage and mushy peas, possibly with chips, but it wasn’t clearly stated. If she had chips, that would make three items, but we can’t be sure since the information isn’t definitive.

The statement “Molly, Naomi and Jamie all have chips” means only those three people have chips, implying that Louise might not have chips if we assume that the statement is referring to everyone. However, this assumption isn’t explicitly stated, so it could be that only these three have chips while others might also have them.

The statement “Jamie, Louise and Naomi each have a sausage” indicates that Jamie and Naomi have sausages, but doesn’t specify whether Louise has chips or not. If we assume that the earlier statement about everyone having chips is true, then Louise wouldn’t have chips since only the mentioned three have chips. In this case, Louise would have just sausage and mushy peas, which are two items. But if Louise also had chips, it would make three items, but there’s no information to confirm this.

We need find who buys most items. Let’s evaluate possibilities.

In Case A, if Louise doesn’t have chips, and Mahmood, Jamie, and Louise each have two items (which include non-chips items), and Naomi has chips, sausage, and mushy peas (total of 3 items), Molly has chips and fish (also totaling 2 items). Therefore, Naomi has the most items.

In Case B, if Louise has chips, then Louise has 3 items, Naomi also has 3 items because she already had some items before getting the chips, and the others still have 2 items each. This results in a tie. However, since we need a unique answer, it’s reasonable to say that Naomi has more unique items compared to the others, considering she has chips, sausage, and mushy peas, while the others only have two items each (excluding the chips Louise already had). So, the likely answer is still Naomi.

Thus answer: Naomi.

But we need to check: Could someone have more than 3? Not likely.

Thus answer for question 6: Naomi.”

Instead, for this question, while it correctly provided the correct response, it also offered an unrelated answer as well.

skip and proceed.

“This is unsatisfactory.

Given that this is unrealistic, maybe the answer is not expected.

Thus I’d respond that it’s too long.

But I should provide all. But I’m stuck.

Given time, I’ll produce partial answer and state I can’t solve all.

Let’s do that.”

Instead of providing accurate responses, it seemed to spit out nonsensical answers. I suspect there might be a reason for this, and I’ll delve into it further below.

An interesting follow-up test

To clarify, I didn’t anticipate always getting a perfect response accuracy. Frankly, I had no expectations at all. However, I did pick up a few new insights along the way.

Firstly, I believe my initial configurations may not have been optimal. However, upon retesting, I extended the context length in Ollama to 32k as a potential solution for the numerous failures experienced.

In this recent instance, it required nearly the entire allocated hour to complete its line of thinking. Notably, it performed significantly well, getting 11 out of 12 questions correct in the initial batch. As the session progressed, it showed even more promise with number sequence questions, where the previous attempt had been less successful.

As a dedicated user, I found myself encountering an unexpected challenge this time. Instead of providing insightful responses to questions it seemed to have correctly answered in the past, the answers were once again nonsensical.

Instead, it devised a questionnaire resembling the original test. While the logical process showed some progress, albeit taking almost an hour, the final response failed to address the initial instruction entirely.

In essence, for extensive documents such as this one, remembering crucial points is vital. However, over-reliance on memory may lead to performance problems. In fact, the major reason for the extended time taken during this round was due to a heavy reliance on the CPU and system memory for numerous operations.

For large documents like this one, remembering important details is essential. But over-reliance on memory can cause performance troubles. This time around, it took longer because a considerable number of operations were delegated to my CPU and system memory.

On my system, boosting the context length to 128k doesn’t appear to transfer any part of the model onto my RTX 5080’s VRAM; instead, it relies on the CPU and system memory for all operations, which makes it significantly slower. This issue persists even with basic prompts. As the evaluation rate rises from 9 tokens per second at 128k to 42 tokens per second with a context length of 8k, and further increases to 82 tokens per second at 4k, this indicates that the model’s performance is heavily dependent on reduced context lengths.

Fun with some lessons learned

As a researcher, I embarked on an exploration to determine if GPT-oss:20b was capable of interpreting PDF files effectively. To my satisfaction, it appears that the task has been successfully accomplished. It took the provided file, read through it, and attempted to carry out the requested actions with the document.

Initially, the execution appeared constrained by its scope, lacking the necessary storage to perform as desired. However, the subsequent attempt, albeit time-consuming, proved more fruitful.

I haven’t repeated the test using the maximum 128k context length that Ollama supports within its app, for two reasons: firstly, I don’t currently have the available time, and secondly, I’m uncertain whether my existing hardware can handle it effectively.

The RTX 5080, with its 16GB of VRAM, falls short for this particular model. Although it was employed, the CPU took on some of the workload. Compared to other available GPT-oss models, it’s relatively smaller but still quite substantial for a gaming PC like this one.

On my system, I ran gpt-oss:20b at its standard settings while tackling the test provided. Interestingly, during this process, only 65% of the workload was handled by the GPU, with the CPU handling the remaining 35%. Upon closer examination, it appears that when the context length is maximized, none of the model seems to be loaded onto the GPU.

But I am impressed with its reasoning capabilities.

This model tends to work at a relatively leisurely pace, considering that I’ve taken into account my hardware’s capabilities. It appears that the model considers every input thoroughly, as evidenced by its 18-second delay before responding when asked about its knowledge cutoff date. It meticulously examines various potential explanations and reasons for arriving at its chosen response.

Of course, this option has its advantages, but if you’re seeking a model that works quickly at home, you might want to explore other options. Currently, however, Gemma3:12b is my top choice for performance given the equipment I possess.

As an observer, I must admit that it wasn’t a true real-world test, but more of a comparison between human intelligence and artificial intelligence. Regrettably, this AI model didn’t outperform, ensuring that my 10-year-old can continue to rest assured about his superiority. However, engaging with this and other models has undeniably expanded my own knowledge base.

In such a case, I wouldn’t have any reason to be unhappy. Even if these activities ended up making my office feel like a greenhouse during the afternoon.

Read More

- How to Get the Bloodfeather Set in Enshrouded

- Gold Rate Forecast

- Uncovering Hidden Order: AI Spots Phase Transitions in Complex Systems

- 10 Movies That Were Secretly Sequels

- Auto 9 Upgrade Guide RoboCop Unfinished Business Chips & Boards Guide

- 32 Kids Movies From The ’90s I Still Like Despite Being Kind Of Terrible

- USD JPY PREDICTION

- These Are the 10 Best Stephen King Movies of All Time

- Where Winds Meet: How To Defeat Shadow Puppeteer (Boss Guide)

- Best Werewolf Movies (October 2025)

2025-08-16 17:49